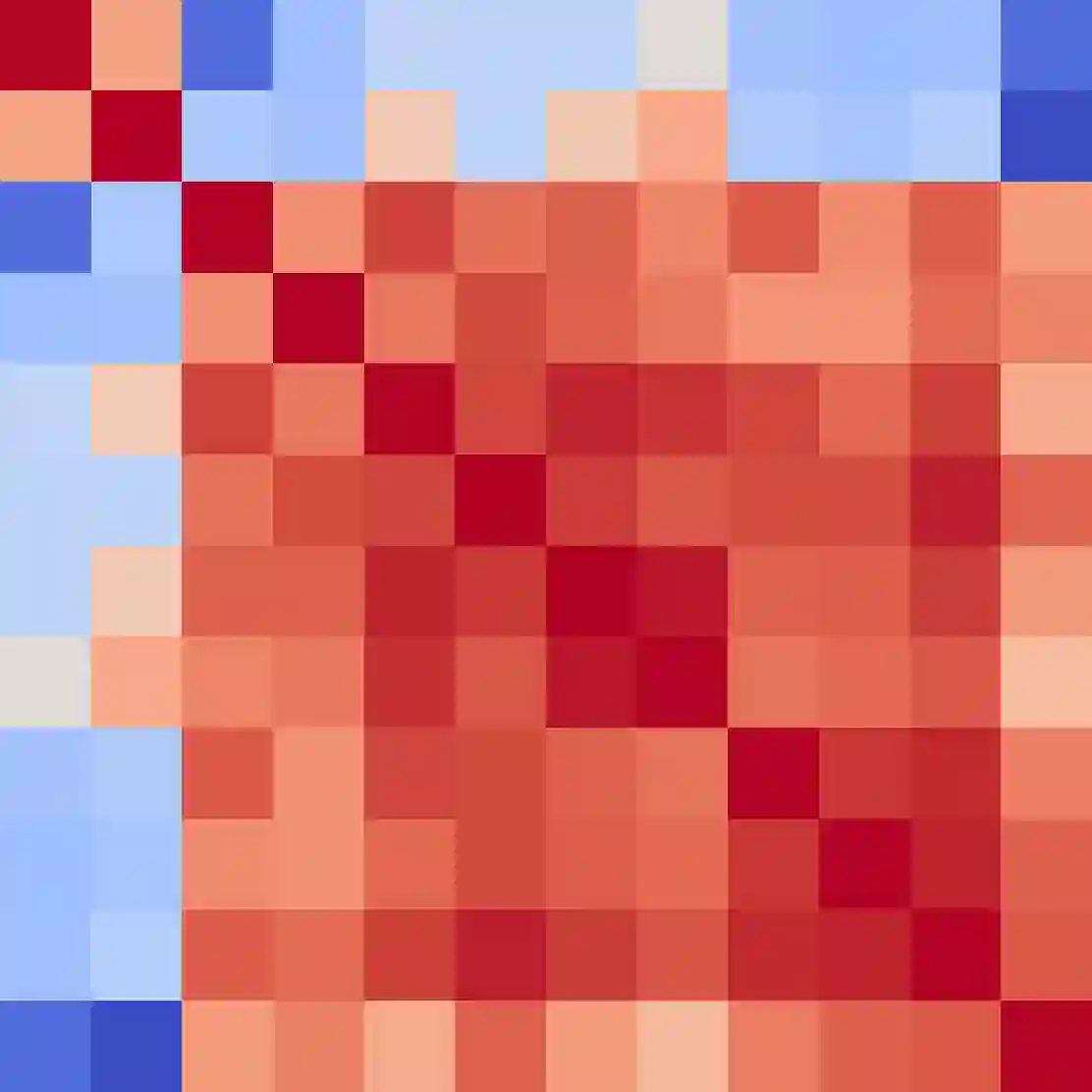

Despite significant achievements in improving the instruction-following capabilities of large language models (LLMs), the ability to process multiple potentially entangled or conflicting instructions remains a considerable challenge. Real-world scenarios often require consistency across multiple instructions over time, such as secret privacy, personal preferences, and prioritization, which demand sophisticated abilities to integrate multiple turns and carefully balance competing objectives when instructions intersect or conflict. This work presents a systematic investigation of LLMs' capabilities in handling multiple turns of instructions, covering three levels of difficulty: (1) retrieving information from instructions, (2) tracking and reasoning across turns, and (3) resolving conflicts among instructions. We construct MultiTurnInstruct with around 1.1K high-quality multi-turn conversations through the human-in-the-loop approach and result in nine capability categories, including statics and dynamics, reasoning, and multitasking. Our finding reveals an intriguing trade-off between different capabilities. While GPT models demonstrate superior memorization, they show reduced effectiveness in privacy-protection tasks requiring selective information withholding. Larger models exhibit stronger reasoning capabilities but still struggle with resolving conflicting instructions. Importantly, these performance gaps cannot be attributed solely to information loss, as models demonstrate strong BLEU scores on memorization tasks but their attention mechanisms fail to integrate multiple related instructions effectively. These findings highlight critical areas for improvement in complex real-world tasks involving multi-turn instructions.

翻译:尽管在提升大语言模型(LLM)的指令遵循能力方面已取得显著成就,但处理多个可能交织或冲突指令的能力仍然是一个重大挑战。现实场景通常要求跨时间保持多个指令的一致性,例如秘密隐私、个人偏好和优先级排序,这需要模型具备整合多轮指令并在指令交叉或冲突时仔细权衡竞争目标的复杂能力。本研究系统性地探究了LLM处理多轮指令的能力,涵盖三个难度层次:(1)从指令中检索信息,(2)跨轮次追踪与推理,(3)解决指令间的冲突。我们通过人机协同方法构建了包含约1.1K轮高质量多轮对话的MultiTurnInstruct数据集,并归纳出九种能力类别,包括静态与动态特性、推理及多任务处理。我们的发现揭示了不同能力间存在有趣的权衡关系:GPT系列模型虽展现出卓越的记忆能力,但在需要选择性信息保留的隐私保护任务中表现欠佳;更大规模的模型虽具备更强的推理能力,但在解决冲突指令时仍存在困难。重要的是,这些性能差距不能简单归因于信息丢失——模型在记忆任务上表现出较高的BLEU分数,但其注意力机制未能有效整合多个相关指令。这些发现凸显了涉及多轮指令的复杂现实任务中亟待改进的关键领域。