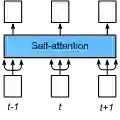

We propose Dynamic Rank Reinforcement Learning (DR-RL), a novel framework that adaptively optimizes the low-rank factorization of Multi-Head Self-Attention (MHSA) in Large Language Models (LLMs) through the integration of reinforcement learning and online matrix perturbation theory. While traditional low-rank approximations often rely on static rank assumptions--limiting their flexibility across diverse input contexts--our method dynamically selects ranks based on real-time sequence dynamics, layer-specific sensitivities, and hardware constraints. The core innovation lies in an RL agent that formulates rank selection as a sequential policy optimization problem, where the reward function strictly balances attention fidelity against computational latency. Crucially, we employ online matrix perturbation bounds to enable incremental rank updates, thereby avoiding the prohibitive cost of full decomposition during inference. Furthermore, the integration of a lightweight Transformer-based policy network and batched Singular Value Decomposition (SVD) operations ensures scalable deployment on modern GPU architectures. Experiments demonstrate that DR-RL maintains downstream accuracy statistically equivalent to full-rank attention while significantly reducing Floating Point Operations (FLOPs), particularly in long-sequence regimes (L > 4096). This work bridges the gap between adaptive efficiency and theoretical rigor in MHSA, offering a principled, mathematically grounded alternative to heuristic rank reduction techniques in resource-constrained deep learning. Source code and experiment logs are available at: https://github.com/canererden/DR_RL_Project

翻译:本文提出动态秩强化学习(DR-RL),这是一种通过整合强化学习与在线矩阵扰动理论,自适应优化大型语言模型(LLMs)中多头自注意力(MHSA)低秩分解的新型框架。传统的低秩近似方法通常依赖静态秩假设——限制了其在不同输入上下文中的灵活性——而我们的方法基于实时序列动态、层间敏感度及硬件约束动态选择秩值。核心创新在于设计了一个将秩选择建模为序列策略优化问题的强化学习智能体,其奖励函数严格权衡注意力保真度与计算延迟。关键之处在于,我们采用在线矩阵扰动边界实现增量秩更新,从而避免推理过程中全分解的过高计算成本。此外,通过集成轻量级基于Transformer的策略网络与批处理奇异值分解(SVD)操作,确保了在现代GPU架构上的可扩展部署。实验表明,DR-RL在保持下游任务准确性与全秩注意力统计等效的同时,显著降低了浮点运算量(FLOPs),尤其在长序列场景(L > 4096)中效果突出。本研究弥合了MHSA自适应效率与理论严谨性之间的鸿沟,为资源受限的深度学习领域提供了基于数学原理的启发式降秩替代方案。源代码与实验日志发布于:https://github.com/canererden/DR_RL_Project