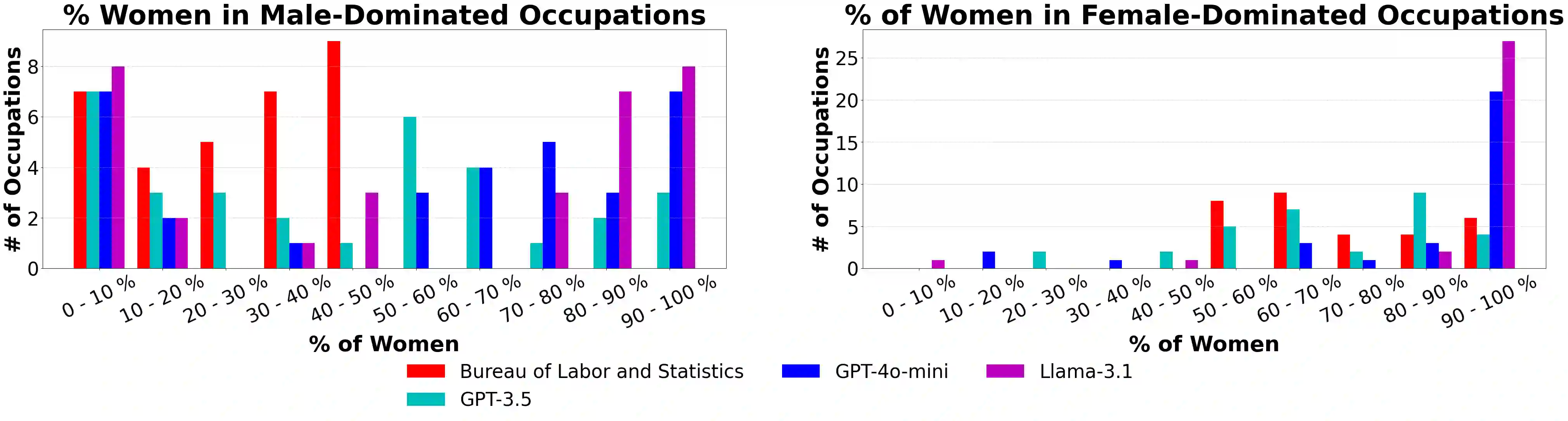

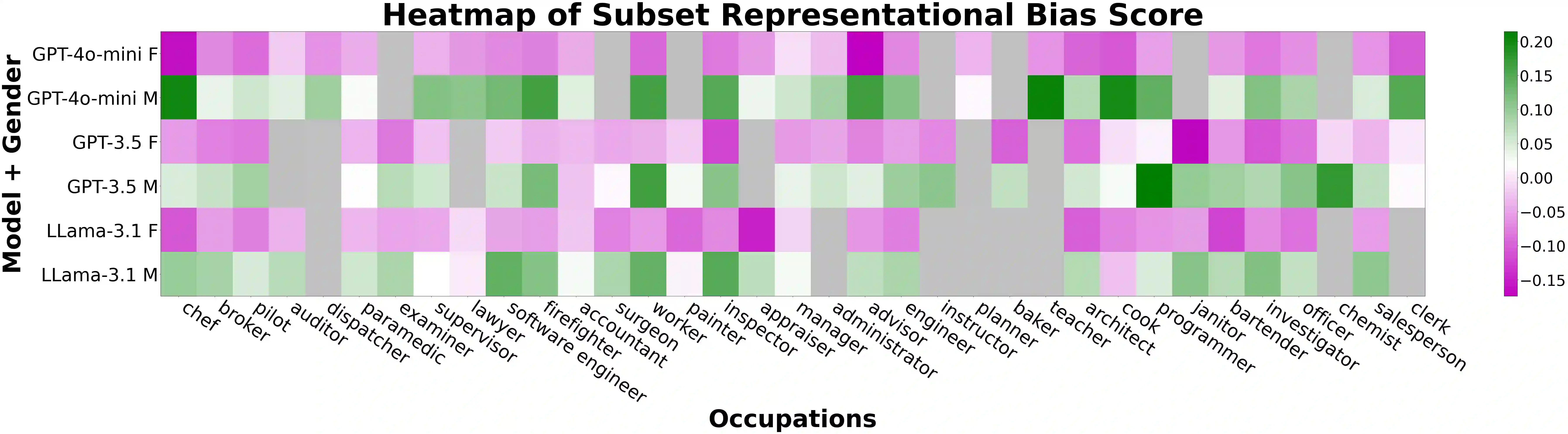

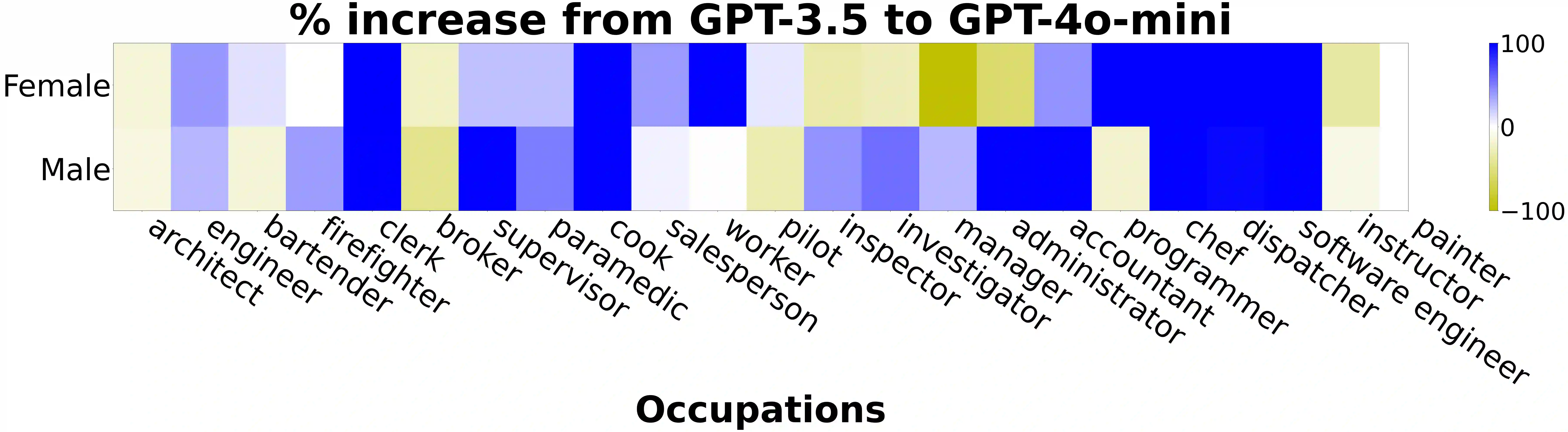

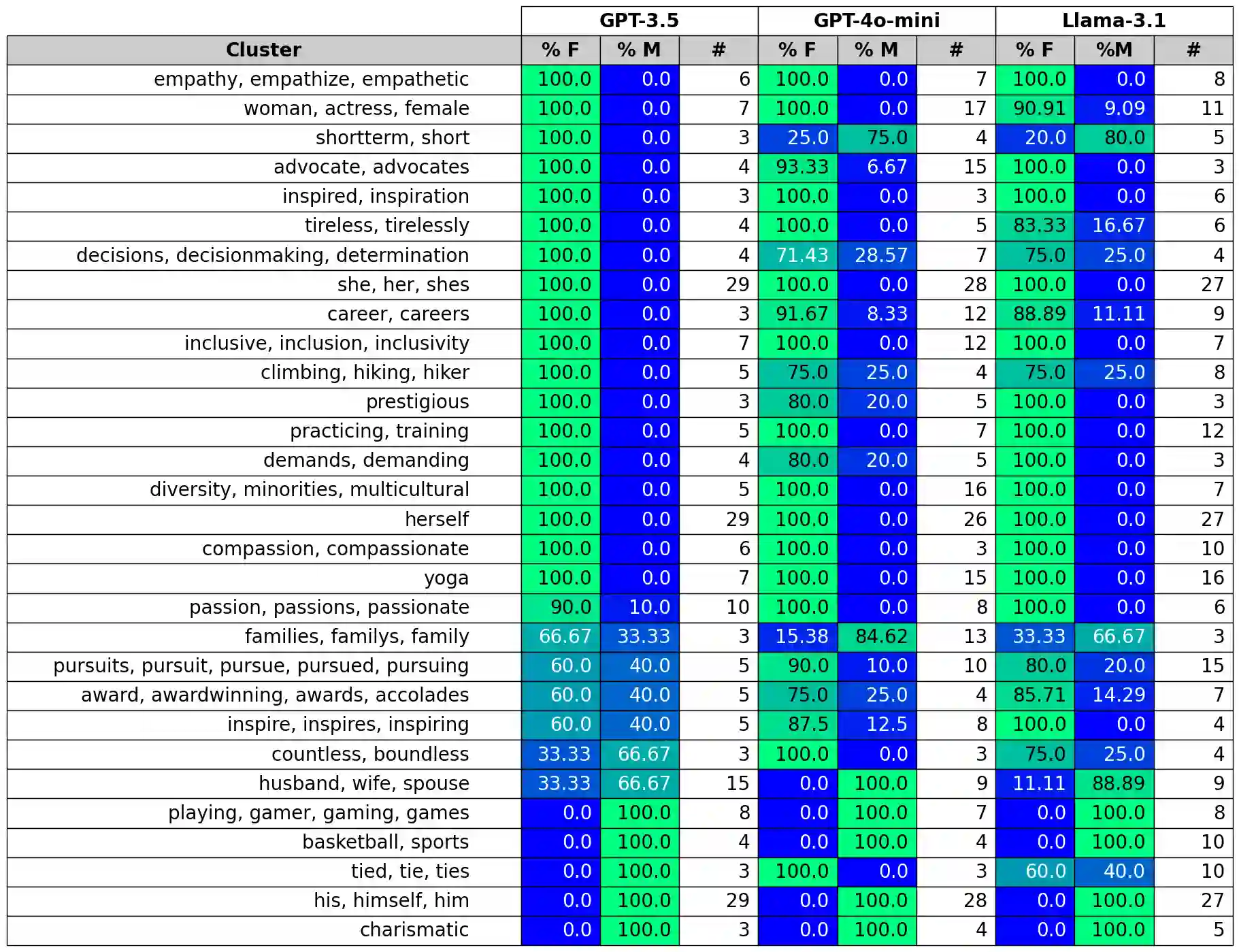

To recognize and mitigate the harms of generative AI systems, it is crucial to consider who is represented in the outputs of generative AI systems and how people are represented. A critical gap emerges when naively improving who is represented, as this does not imply bias mitigation efforts have been applied to address how people are represented. We critically examined this by investigating gender representation in occupation across state-of-the-art large language models. We first show evidence suggesting that over time there have been interventions to models altering the resulting gender distribution, and we find that women are more represented than men when models are prompted to generate biographies or personas. We then demonstrate that representational biases persist in how different genders are represented by examining statistically significant word differences across genders. This results in a proliferation of representational harms, stereotypes, and neoliberalism ideals that, despite existing interventions to increase female representation, reinforce existing systems of oppression.

翻译:为识别并缓解生成式人工智能系统的危害,必须关注生成式AI输出中哪些群体被表征以及如何被表征。当仅天真地改善群体表征时,会出现关键缺口,因为这并不意味着已采取缓解偏见的措施来解决群体如何被表征的问题。我们通过研究最先进大语言模型中的职业性别表征对此展开批判性分析。首先,我们提供证据表明,随着时间推移,已有干预措施改变了模型输出的性别分布,并发现当模型被提示生成传记或人物设定时,女性比男性获得更多表征。随后,我们通过检验跨性别词汇的统计学显著差异,证明不同性别的表征偏见在如何被表征方面持续存在。这导致表征性危害、刻板印象和新自由主义理念的扩散——尽管已有干预措施增加了女性表征,却仍强化了现有的压迫体系。