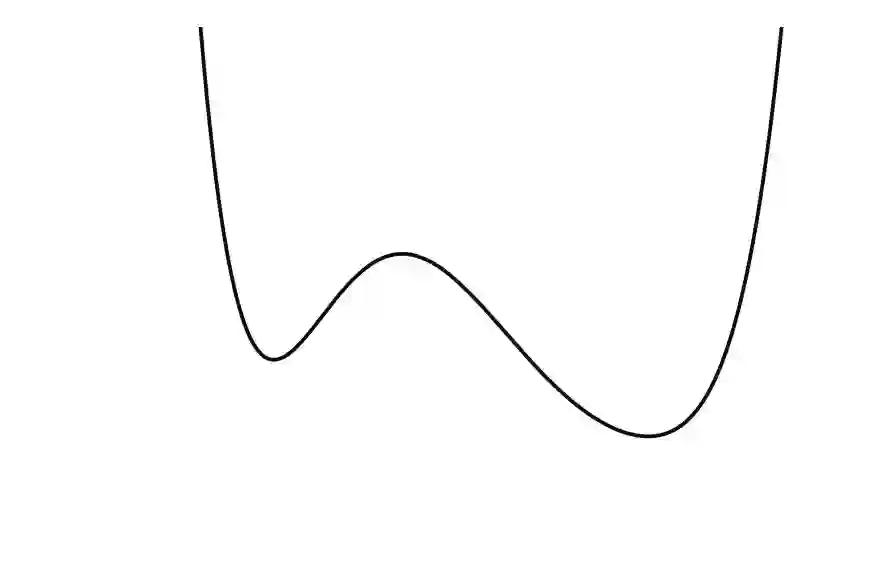

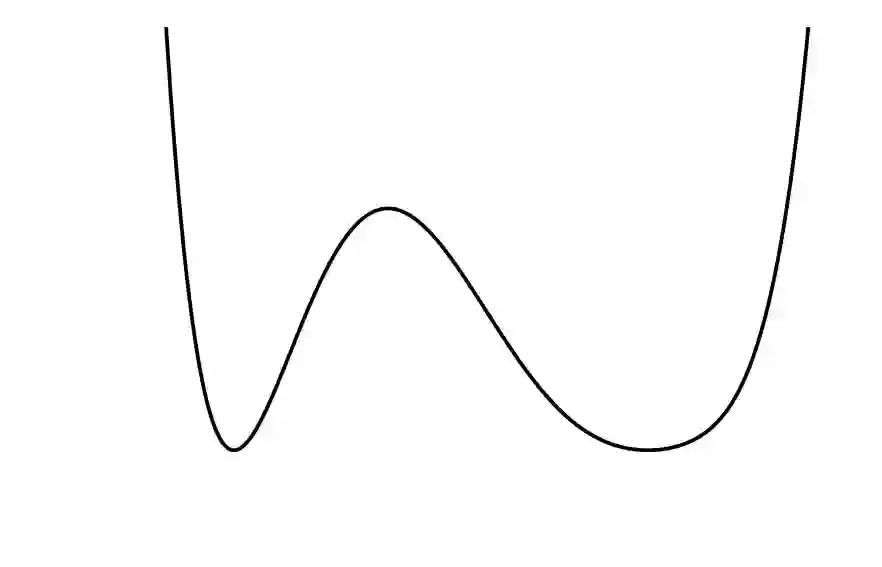

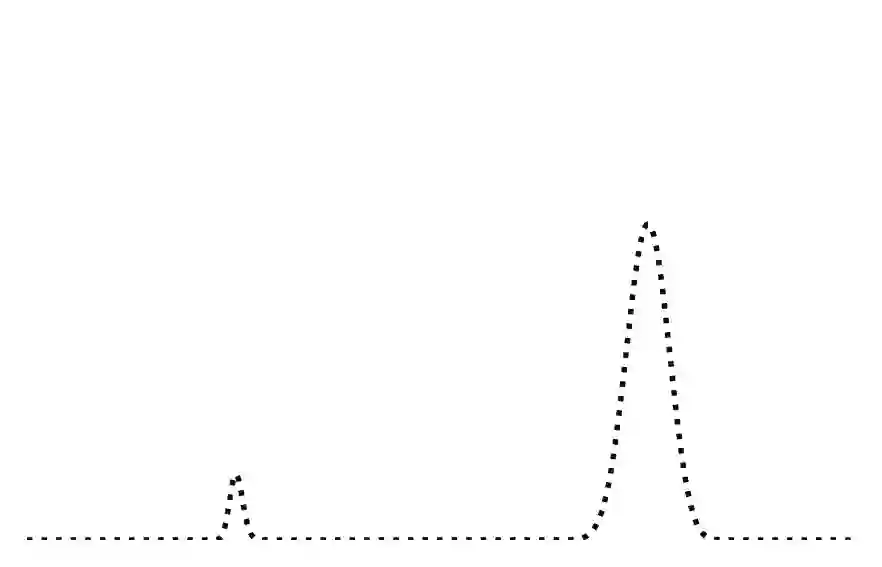

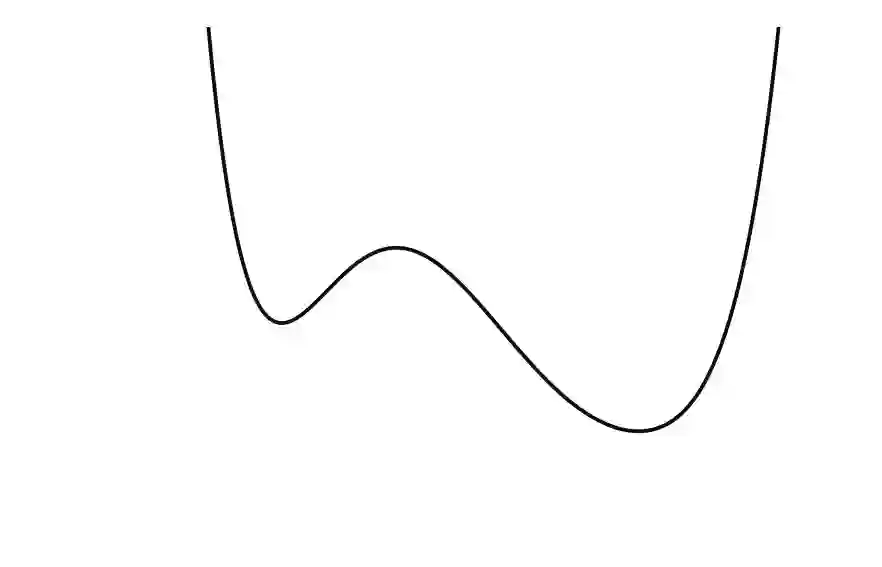

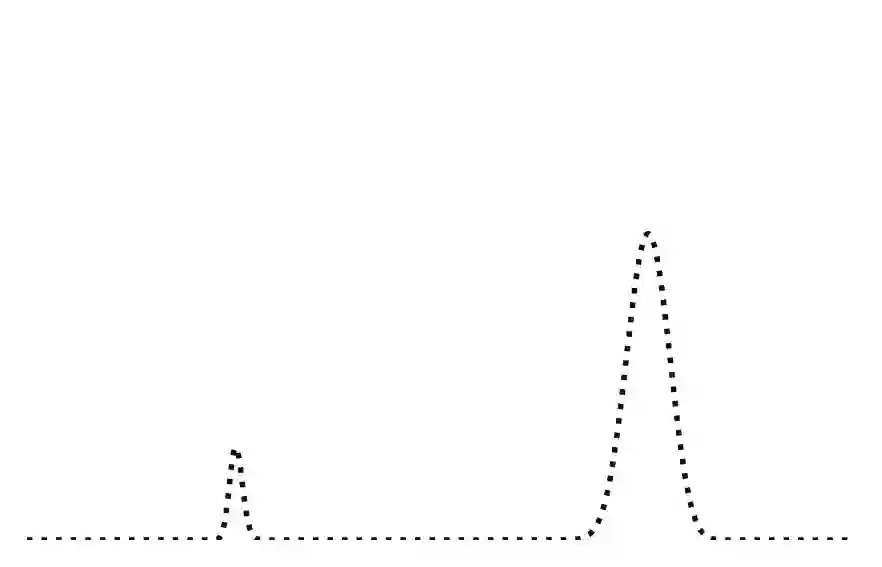

Generalization in deep learning is closely tied to the pursuit of flat minima in the loss landscape, yet classical Stochastic Gradient Langevin Dynamics (SGLD) offers no mechanism to bias its dynamics toward such low-curvature solutions. This work introduces Flatness-Aware Stochastic Gradient Langevin Dynamics (fSGLD), designed to efficiently and provably seek flat minima in high-dimensional nonconvex optimization problems. At each iteration, fSGLD uses the stochastic gradient evaluated at parameters perturbed by isotropic Gaussian noise, commonly referred to as Random Weight Perturbation (RWP), thereby optimizing a randomized-smoothing objective that implicitly captures curvature information. Leveraging these properties, we prove that the invariant measure of fSGLD stays close to a stationary measure concentrated on the global minimizers of a loss function regularized by the Hessian trace whenever the inverse temperature and the scale of random weight perturbation are properly coupled. This result provides a rigorous theoretical explanation for the benefits of random weight perturbation. In particular, we establish non-asymptotic convergence guarantees in Wasserstein distance with the best known rate and derive an excess-risk bound for the Hessian-trace regularized objective. Extensive experiments on noisy-label and large-scale vision tasks, in both training-from-scratch and fine-tuning settings, demonstrate that fSGLD achieves superior or comparable generalization and robustness to baseline algorithms while maintaining the computational cost of SGD, about half that of SAM. Hessian-spectrum analysis further confirms that fSGLD converges to significantly flatter minima.

翻译:深度学习中的泛化能力与损失函数景观中平坦最小值的寻找密切相关,然而经典的随机梯度朗之万动力学(SGLD)缺乏将动力学偏向此类低曲率解的机制。本文提出平坦感知随机梯度朗之万动力学(fSGLD),旨在高效且可证明地寻求高维非凸优化问题中的平坦最小值。在每次迭代中,fSGLD使用通过各向同性高斯噪声扰动参数后评估的随机梯度(通常称为随机权重扰动,RWP),从而优化一个隐式捕捉曲率信息的随机平滑目标函数。利用这些特性,我们证明当逆温度参数与随机权重扰动的尺度适当耦合时,fSGLD的不变测度将保持接近一个集中于经Hessian迹正则化的损失函数全局最小值的平稳测度。该结果为随机权重扰动的优势提供了严格的理论解释。特别地,我们建立了Wasserstein距离中具有最优已知速率的非渐近收敛保证,并推导了Hessian迹正则化目标的超额风险界。在噪声标签和大规模视觉任务上进行的广泛实验(包括从头训练和微调设置)表明,fSGLD在保持SGD计算成本(约为SAM的一半)的同时,实现了优于或相当于基线算法的泛化能力和鲁棒性。Hessian谱分析进一步证实fSGLD收敛至显著更平坦的最小值。