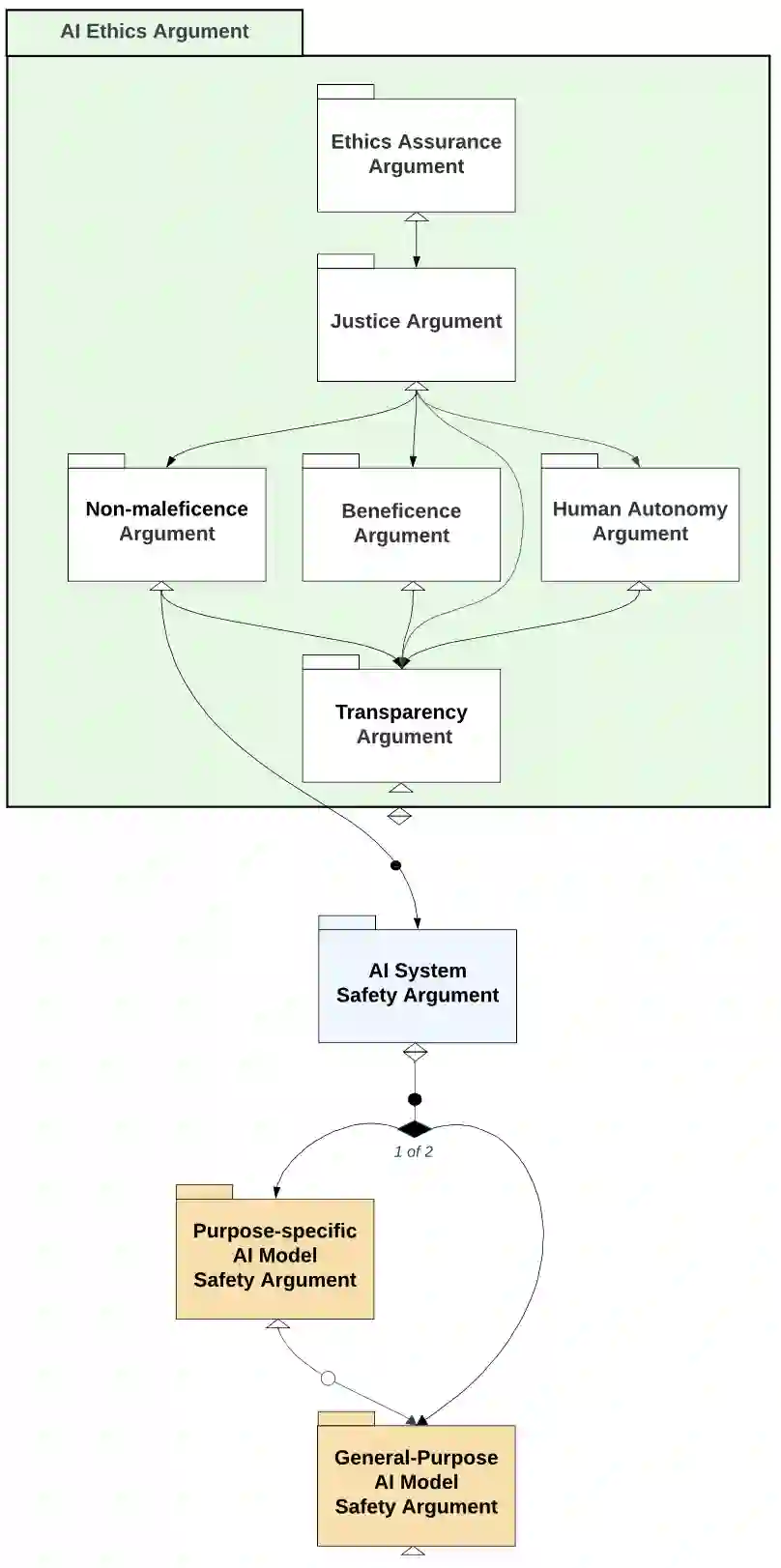

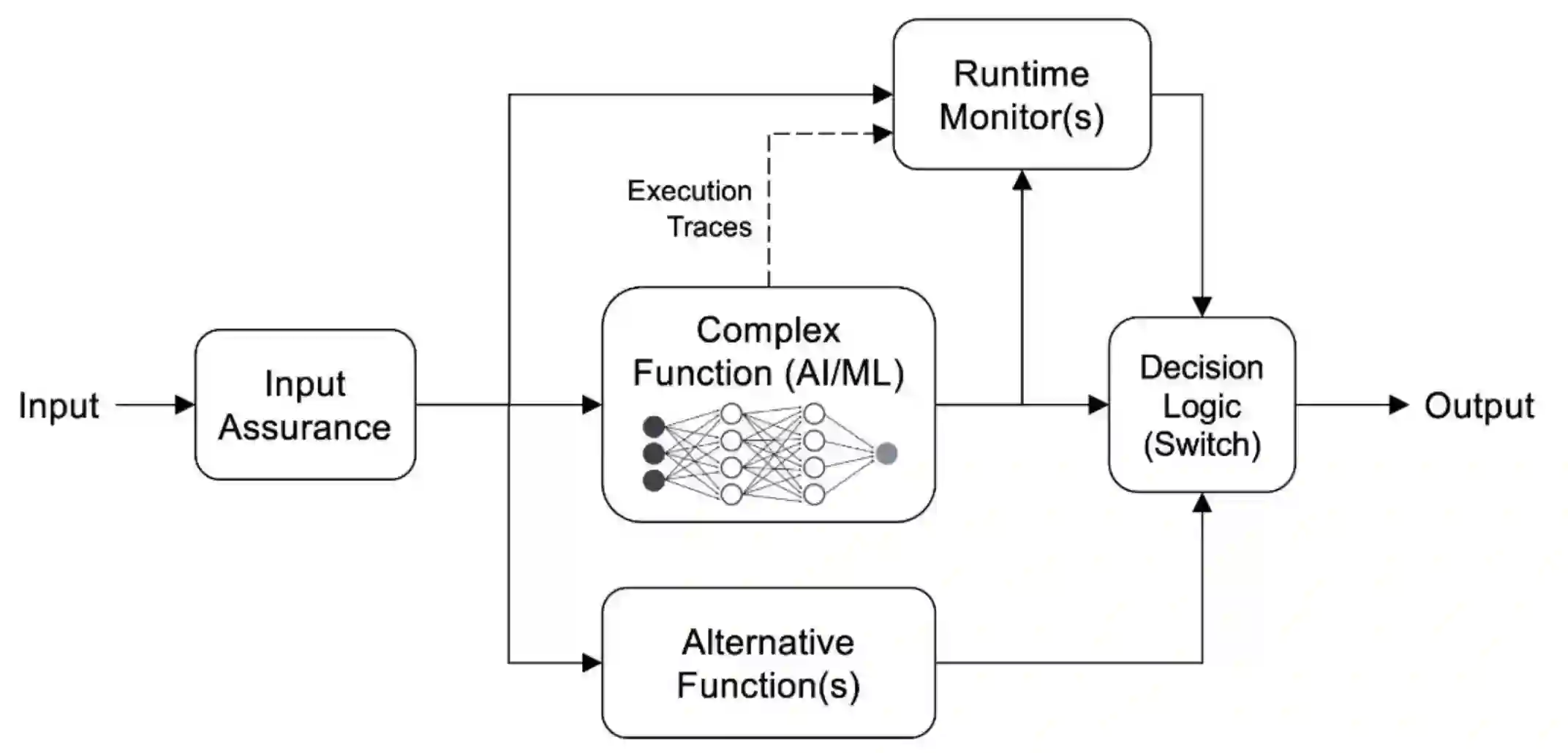

We present our Balanced, Integrated and Grounded (BIG) argument for assuring the safety of AI systems. The BIG argument adopts a whole-system approach to constructing a safety case for AI systems of varying capability, autonomy and criticality. Firstly, it is balanced by addressing safety alongside other critical ethical issues such as privacy and equity, acknowledging complexities and trade-offs in the broader societal impact of AI. Secondly, it is integrated by bringing together the social, ethical and technical aspects of safety assurance in a way that is traceable and accountable. Thirdly, it is grounded in long-established safety norms and practices, such as being sensitive to context and maintaining risk proportionality. Whether the AI capability is narrow and constrained or general-purpose and powered by a frontier or foundational model, the BIG argument insists on a systematic treatment of safety. Further, it places a particular focus on the novel hazardous behaviours emerging from the advanced capabilities of frontier AI models and the open contexts in which they are rapidly being deployed. These complex issues are considered within a wider AI safety case, approaching assurance from both technical and sociotechnical perspectives. Examples illustrating the use of the BIG argument are provided throughout the paper.

翻译:本文提出了一种平衡、集成与基于实践(BIG)的论证框架,用于确保人工智能系统的安全性。该论证框架采用全系统方法,为不同能力、自主性和关键性的人工智能系统构建安全案例。首先,其平衡性体现在将安全性与隐私、公平等其他关键伦理问题协同考量,承认人工智能更广泛社会影响中的复杂性与权衡关系。其次,其集成性体现在以可追溯、可问责的方式,将安全保障的社会、伦理与技术维度有机结合。第三,其基于实践的特性体现在遵循长期确立的安全规范与实践,例如对情境保持敏感并维持风险比例原则。无论人工智能能力是狭窄受限的,还是由前沿或基础模型驱动的通用型系统,BIG论证都坚持对安全性进行系统性处理。此外,该框架特别关注前沿AI模型先进能力所衍生的新型危险行为,以及这些模型快速部署的开放环境。这些复杂问题被置于更广泛的AI安全案例中加以考量,从技术和社会技术双重视角推进安全保障。本文通篇提供了阐释BIG论证应用的具体案例。