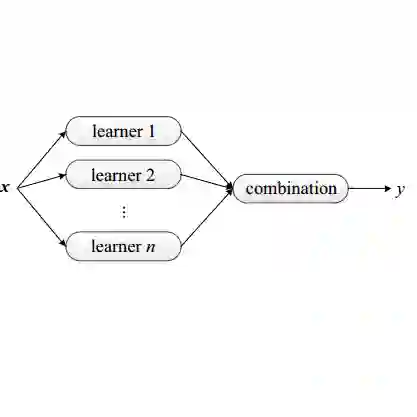

Accurate vehicle type recognition underpins intelligent transportation and logistics, but severe class imbalance in public datasets suppresses performance on rare categories. We curate a 16-class corpus (~47k images) by merging Kaggle, ImageNet, and web-crawled data, and create six balanced variants via SMOTE oversampling and targeted undersampling. Lightweight ensembles, such as Random Forest, AdaBoost, and a soft-voting combiner built on MobileNet-V2 features are benchmarked against a configurable ResNet-style CNN trained with strong augmentation and label smoothing. The best ensemble (SMOTE-combined) attains 74.8% test accuracy, while the CNN achieves 79.19% on the full test set and 81.25% on an unseen inference batch, confirming the advantage of deep models. Nonetheless, the most under-represented class (Barge) remains a failure mode, highlighting the limits of rebalancing alone. Results suggest prioritizing additional minority-class collection and cost-sensitive objectives (e.g., focal loss) and exploring hybrid ensemble or CNN pipelines to combine interpretability with representational power.

翻译:准确的车型识别是智能交通与物流的基础,但公开数据集中严重的类别不平衡抑制了对稀有类别的识别性能。我们通过合并Kaggle、ImageNet及网络爬取数据构建了一个包含16个类别(约4.7万张图像)的数据集,并采用SMOTE过采样与针对性欠采样方法创建了六个平衡化变体。基于MobileNet-V2特征构建的轻量级集成模型(包括随机森林、AdaBoost及软投票组合器)与采用强数据增强和标签平滑训练的可配置ResNet风格CNN进行了基准比较。最佳集成模型(SMOTE组合)获得74.8%的测试准确率,而CNN在全测试集上达到79.19%,在未见推理批次上达到81.25%,证实了深度模型的优势。然而,最欠表征的类别(Barge)仍是失效案例,凸显了单纯重平衡策略的局限性。结果表明应优先补充少数类别样本数据、采用代价敏感目标(如焦点损失),并探索融合可解释性与表征能力的混合集成或CNN流水线。