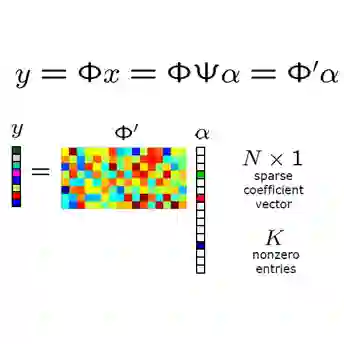

In this study we develop dimension-reduction techniques to accelerate diffusion model inference in the context of synthetic data generation. The idea is to integrate compressed sensing into diffusion models (hence, CSDM): First, compress the dataset into a latent space (from an ambient space), and train a diffusion model in the latent space; next, apply a compressed sensing algorithm to the samples generated in the latent space for decoding back to the original space; and the goal is to facilitate the efficiency of both model training and inference. Under certain sparsity assumptions on data, our proposed approach achieves provably faster convergence, via combining diffusion model inference with sparse recovery. It also sheds light on the best choice of the latent space dimension. To illustrate the effectiveness of this approach, we run numerical experiments on a range of datasets, including handwritten digits, medical and climate images, and financial time series for stress testing.

翻译:本研究开发了维度约减技术,以加速合成数据生成场景下的扩散模型推理。核心思想是将压缩感知融入扩散模型(即CSDM框架):首先将数据集从原始空间压缩至潜在空间,并在潜在空间中训练扩散模型;随后对潜在空间生成的样本应用压缩感知算法,将其解码回原始空间;该方法旨在同步提升模型训练与推理效率。在数据满足特定稀疏性假设的前提下,通过将扩散模型推理与稀疏恢复技术相结合,所提方法可实现理论可证的速度收敛。研究同时揭示了潜在空间维度的最优选择准则。为验证方法的有效性,我们在多类数据集上进行了数值实验,包括手写数字、医学与气候图像,以及用于压力测试的金融时间序列。