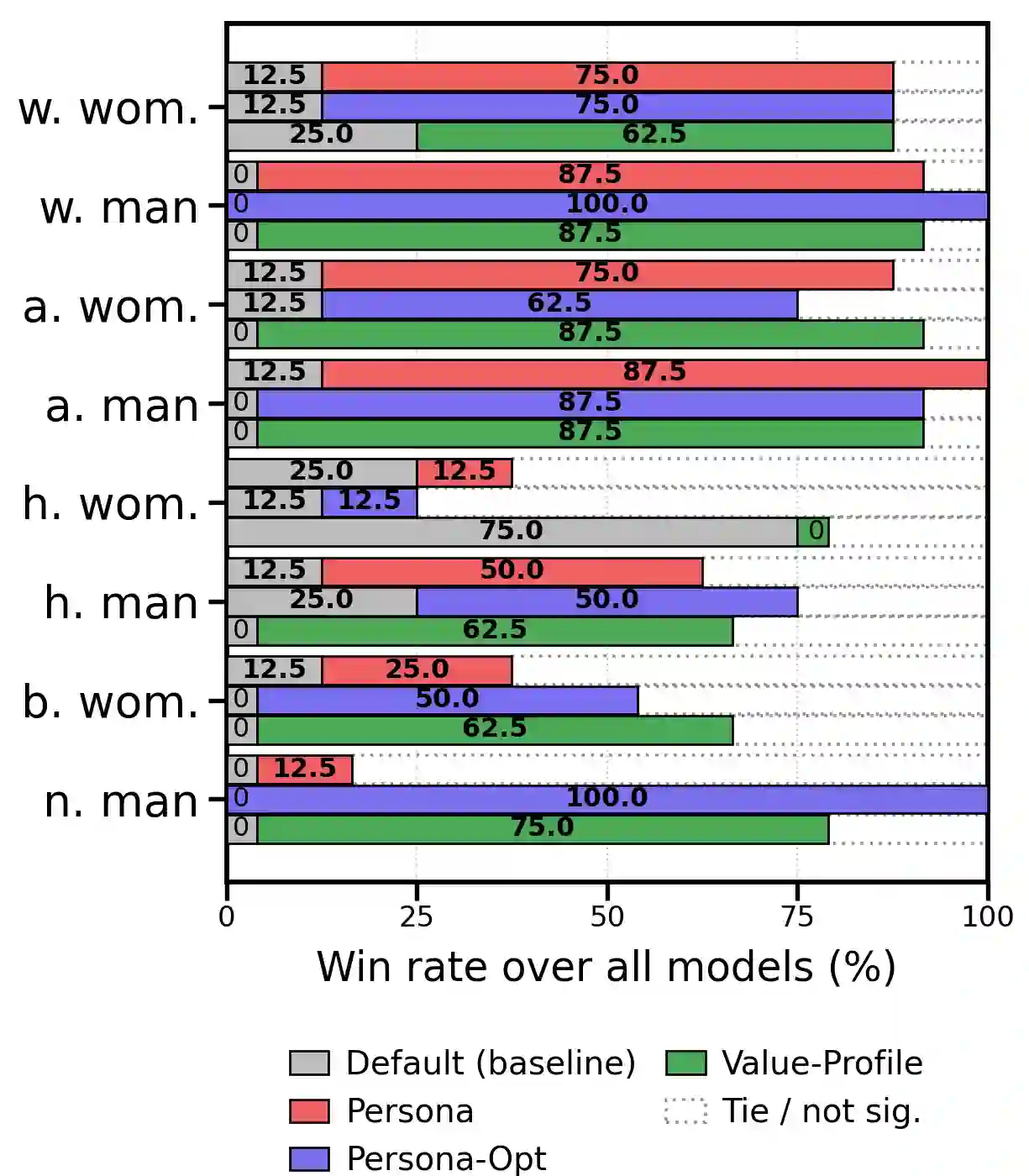

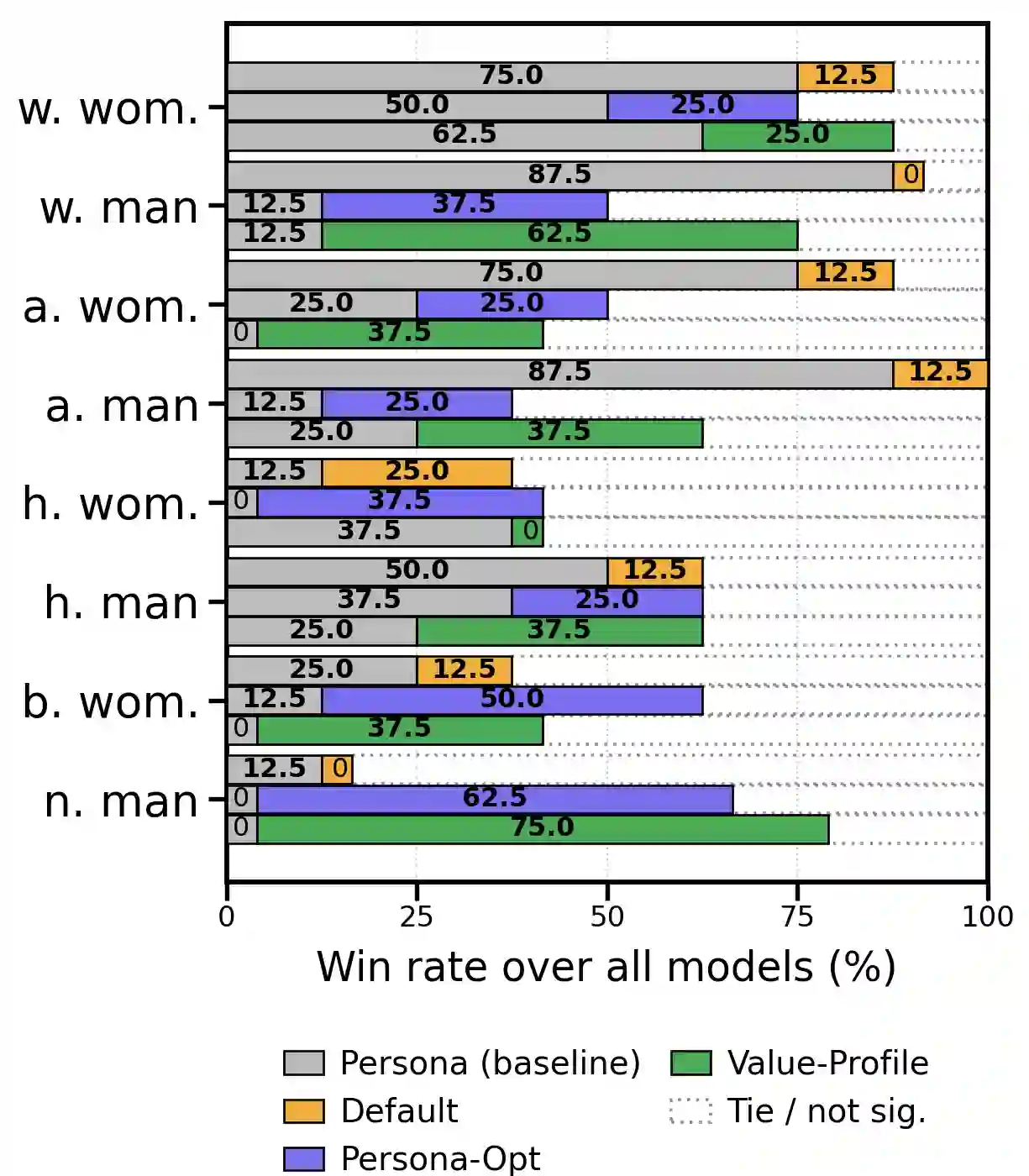

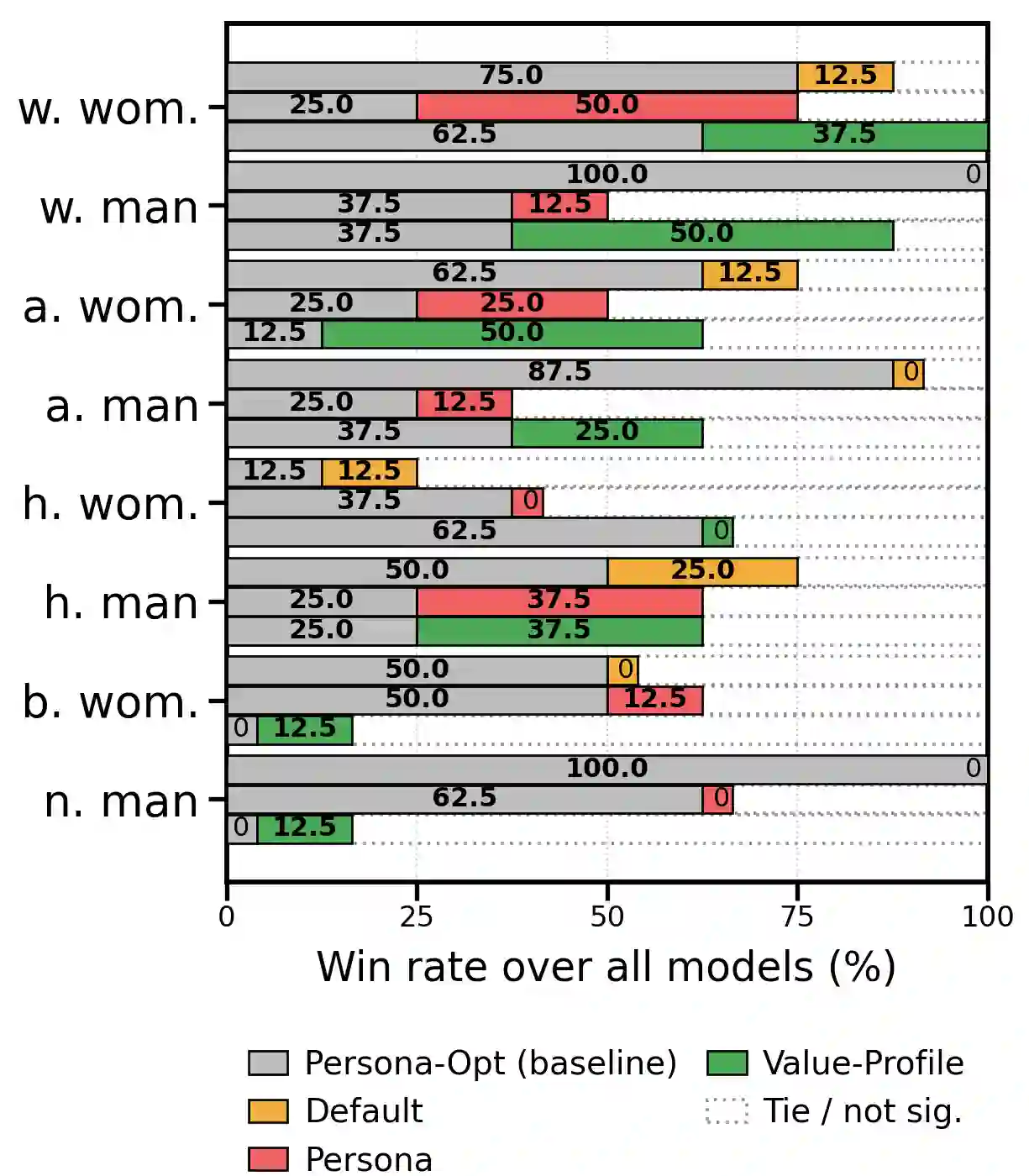

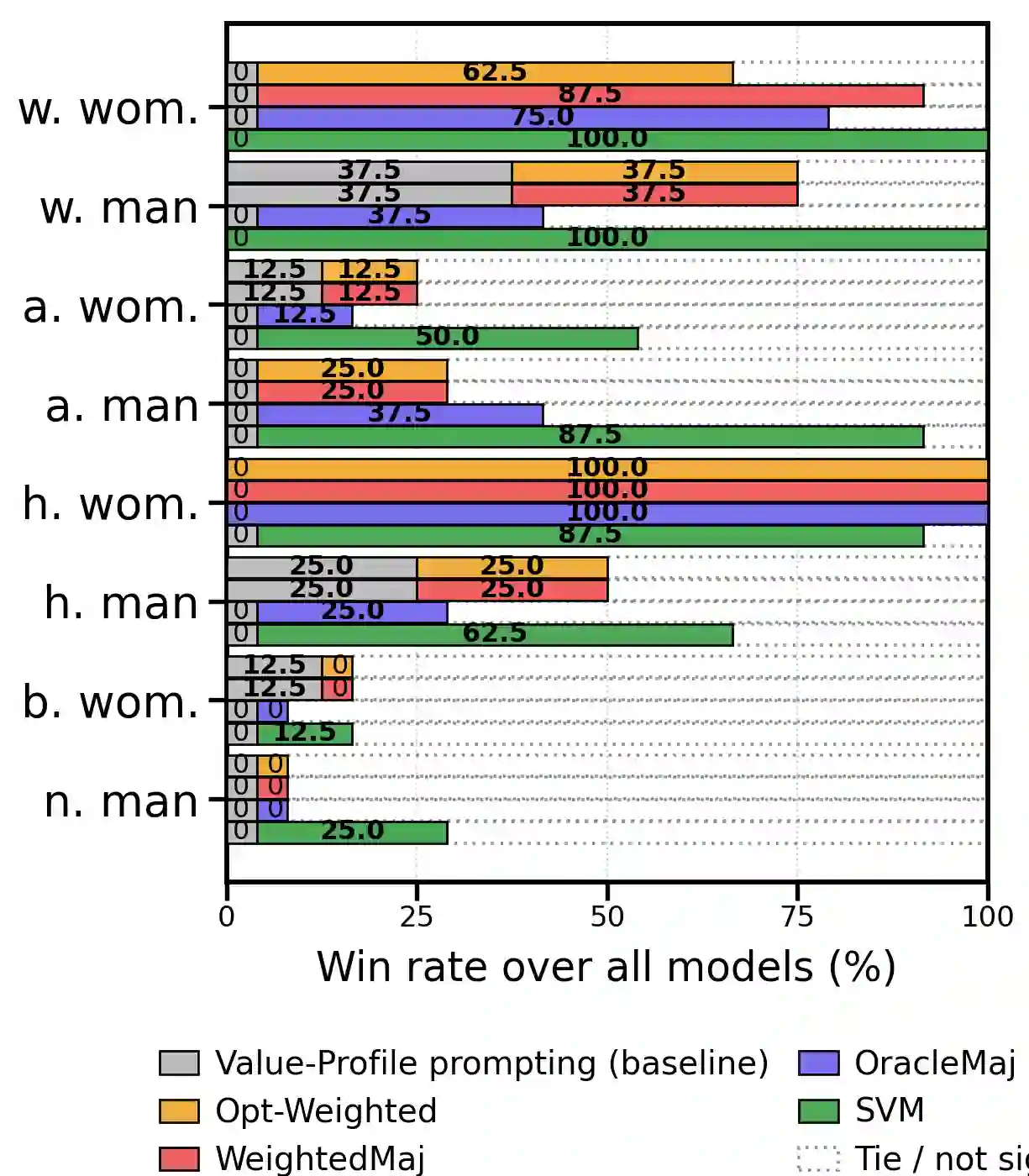

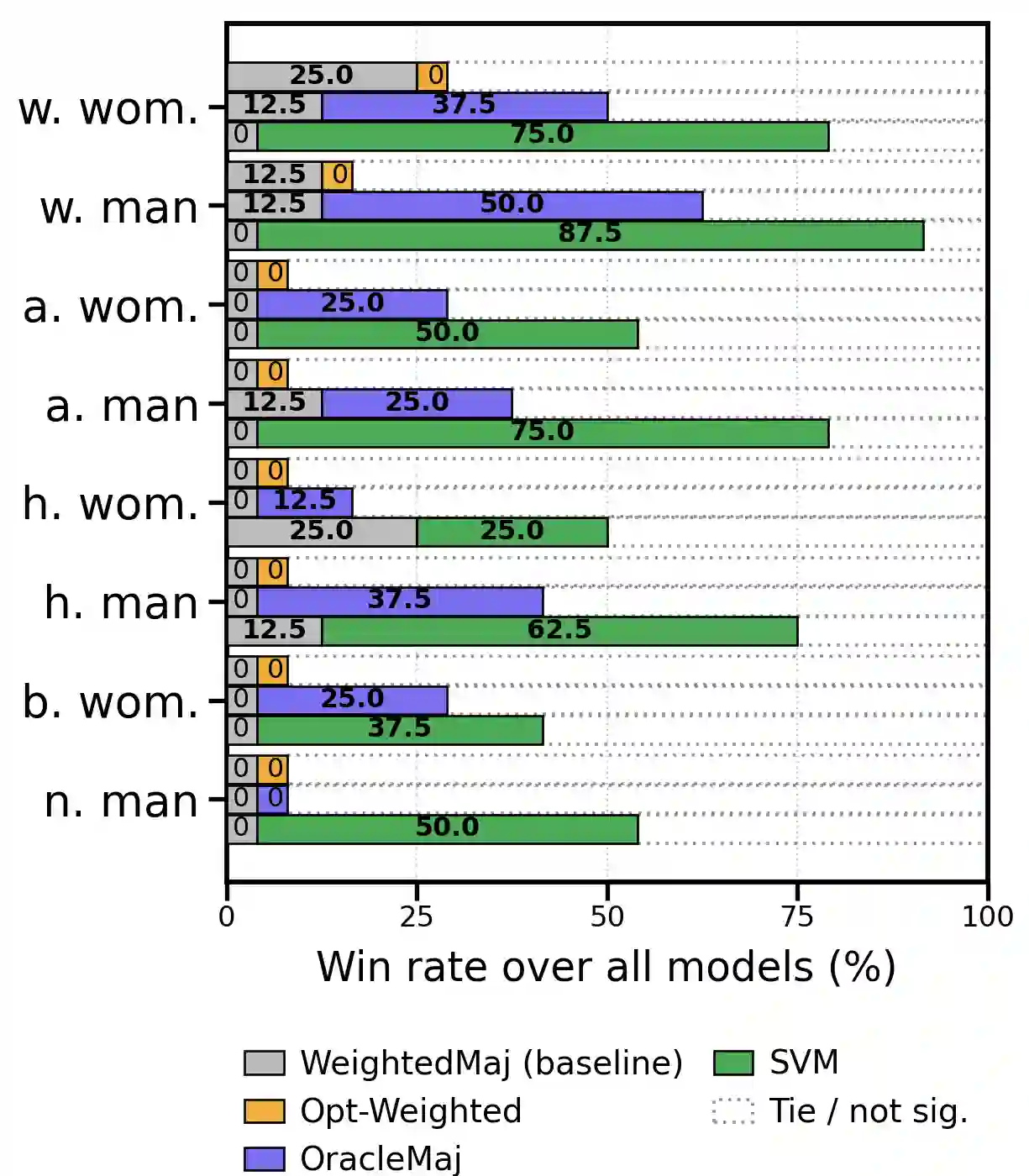

Toxicity detection is inherently subjective, shaped by the diverse perspectives and social priors of different demographic groups. While ``pluralistic'' modeling as used in economics and the social sciences aims to capture perspective differences across contexts, current Large Language Model (LLM) prompting techniques have different results across different personas and base models. In this work, we conduct a systematic evaluation of persona-aware toxicity detection, showing that no single prompting method, including our proposed automated prompt optimization strategy, uniformly dominates across all model-persona pairs. To exploit complementary errors, we explore ensembling four prompting variants and propose a lightweight meta-ensemble: an SVM over the 4-bit vector of prompt predictions. Our results demonstrate that the proposed SVM ensemble consistently outperforms individual prompting methods and traditional majority-voting techniques, achieving the strongest overall performance across diverse personas. This work provides one of the first systematic comparisons of persona-conditioned prompting for toxicity detection and offers a robust method for pluralistic evaluation in subjective NLP tasks.

翻译:毒性检测本质上具有主观性,其判断受到不同人口群体多元视角与社会先验的影响。尽管经济学与社会科学中采用的“多元主义”建模方法旨在捕捉不同情境下的视角差异,但当前大语言模型(LLM)的提示技术在不同人物角色与基础模型间会产生不一致的结果。本研究对人物感知毒性检测进行了系统性评估,结果表明:包括我们提出的自动化提示优化策略在内,没有任何单一提示方法能在所有模型-人物角色配对中均占优势。为利用互补性误差,我们探索了集成四种提示变体的方法,并提出一种轻量级元集成策略:基于四维提示预测向量的支持向量机(SVM)。实验结果表明,所提出的SVM集成方法持续优于单一提示技术与传统多数投票方法,在多样化人物角色中实现了最强的整体性能。本研究首次系统比较了人物角色条件提示在毒性检测中的应用,并为主观性自然语言处理任务中的多元评估提供了一种鲁棒性方法。