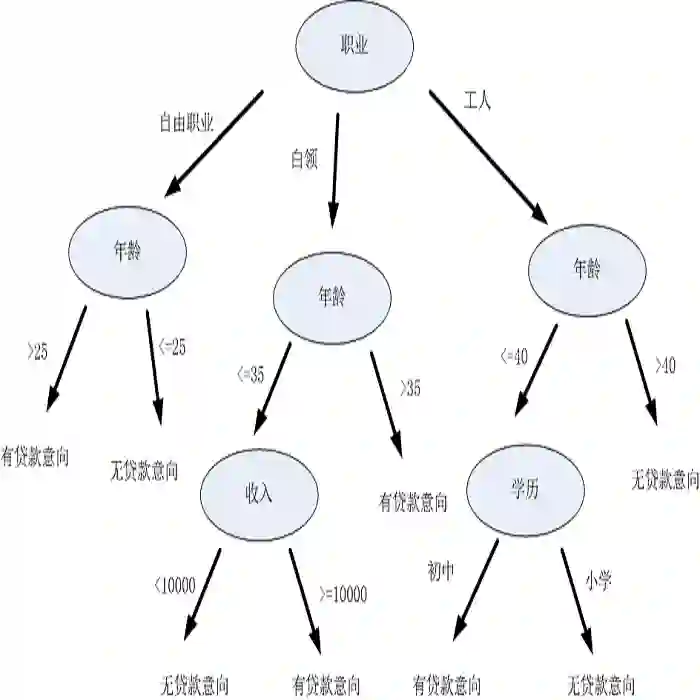

Decision tree models, including random forests and gradient-boosted decision trees, are widely used in machine learning due to their high predictive performance. However, their complex structures often make them difficult to interpret, especially in safety-critical applications where model decisions require formal justification. Recent work has demonstrated that logical and abductive explanations can be derived through automated reasoning techniques. In this paper, we propose a method for generating various types of explanations, namely, sufficient, contrastive, majority, and tree-specific explanations, using Answer Set Programming (ASP). Compared to SAT-based approaches, our ASP-based method offers greater flexibility in encoding user preferences and supports enumeration of all possible explanations. We empirically evaluate the approach on a diverse set of datasets and demonstrate its effectiveness and limitations compared to existing methods.

翻译:决策树模型(包括随机森林和梯度提升决策树)因其高预测性能而在机器学习中得到广泛应用。然而,其复杂结构常导致模型难以解释,尤其在安全关键应用中,模型决策需要形式化论证。近期研究表明,可通过自动推理技术推导出逻辑解释与溯因解释。本文提出一种基于答案集编程(ASP)的方法,用于生成多种类型的解释,即充分性解释、对比性解释、多数性解释及树特异性解释。相较于基于可满足性理论的方案,我们基于ASP的方法在编码用户偏好方面具有更高灵活性,并支持枚举所有可能的解释。我们在多样化数据集上对该方法进行了实证评估,并通过与现有方法的对比验证了其有效性与局限性。