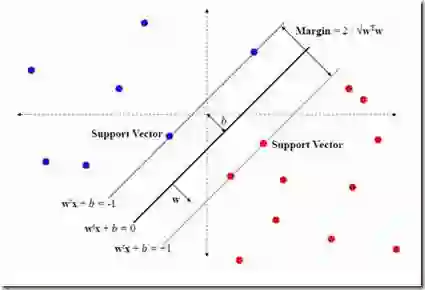

Foundation vision, audio, and language models enable zero-shot performance on downstream tasks via their latent representations. Recently, unsupervised learning of data group structure with deep learning methods has gained popularity. TURTLE, a state of the art deep clustering algorithm, uncovers data labeling without supervision by alternating label and hyperplane updates, maximizing the hyperplane margin, in a similar fashion to support vector machines (SVMs). However, TURTLE assumes clusters are balanced; when data is imbalanced, it yields non-ideal hyperplanes that cause higher clustering error. We propose PET-TURTLE, which generalizes the cost function to handle imbalanced data distributions by a power law prior. Additionally, by introducing sparse logits in the labeling process, PET-TURTLE optimizes a simpler search space that in turn improves accuracy for balanced datasets. Experiments on synthetic and real data show that PET-TURTLE improves accuracy for imbalanced sources, prevents over-prediction of minority clusters, and enhances overall clustering.

翻译:基础视觉、音频和语言模型能够通过其潜在表征在下游任务上实现零样本性能。近年来,利用深度学习方法无监督学习数据分组结构日益受到关注。TURTLE是一种先进的深度聚类算法,它以类似于支持向量机(SVM)的方式,通过交替更新标签和超平面、最大化超平面间隔,在无监督条件下揭示数据标签。然而,TURTLE假设簇是平衡的;当数据不平衡时,它会产生非理想的超平面,从而导致更高的聚类误差。我们提出了PET-TURTLE,它通过幂律先验推广了损失函数,以处理不平衡的数据分布。此外,通过在标签过程中引入稀疏逻辑值,PET-TURTLE优化了更简单的搜索空间,从而提高了平衡数据集的准确性。在合成数据和真实数据上的实验表明,PET-TURTLE提高了不平衡数据源的准确性,防止了对少数簇的过度预测,并增强了整体聚类性能。