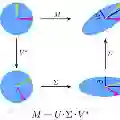

The Randomized Singular Value Decomposition (RSVD) is a widely used algorithm for efficiently computing low-rank approximations of large matrices, without the need to construct a full-blown SVD. Of interest, of course, is the approximation error of RSVD compared to the optimal low-rank approximation error obtained from the SVD. While the literature provides various upper and lower error bounds for RSVD, in this paper we derive precise asymptotic expressions that characterize its approximation error as the matrix dimensions grow to infinity. Our expressions depend only on the singular values of the matrix, and we evaluate them for two important matrix ensembles: those with power law and bilevel singular value distributions. Our results aim to quantify the gap between the existing theoretical bounds and the actual performance of RSVD. Furthermore, we extend our analysis to polynomial-filtered RSVD, deriving performance characterizations that provide insights into optimal filter selection.

翻译:随机奇异值分解(RSVD)是一种广泛使用的算法,用于高效计算大规模矩阵的低秩近似,而无需构建完整的奇异值分解。当然,人们关注的是RSVD的近似误差与从奇异值分解获得的最优低秩近似误差之间的比较。尽管文献中提供了RSVD的各种误差上下界,但在本文中,我们推导了精确的渐近表达式,以刻画当矩阵维度趋于无穷时其近似误差的特性。我们的表达式仅依赖于矩阵的奇异值,并针对两种重要的矩阵集合进行了评估:具有幂律分布和双层分布的奇异值矩阵。我们的研究旨在量化现有理论界与RSVD实际性能之间的差距。此外,我们将分析扩展到多项式滤波的RSVD,推导出的性能特征为最优滤波选择提供了见解。