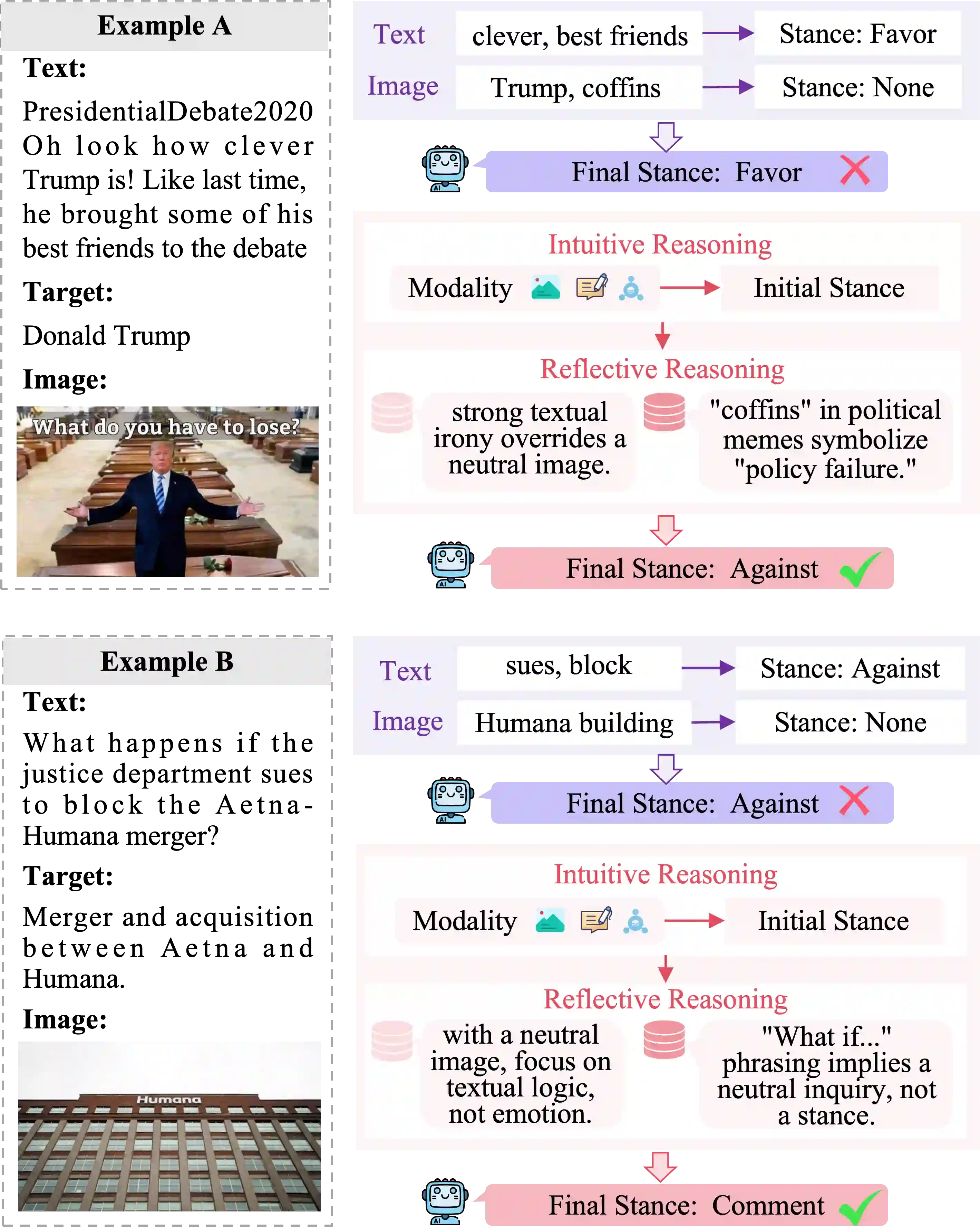

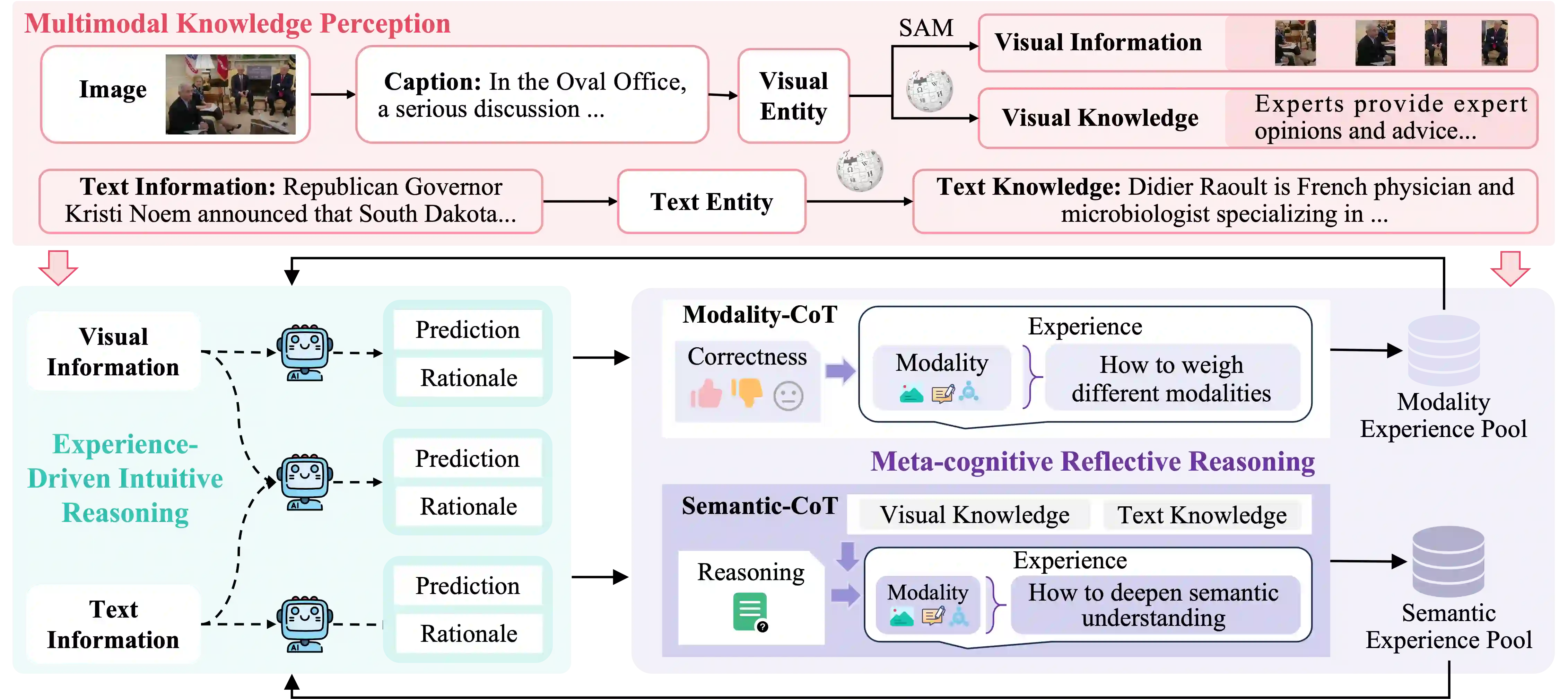

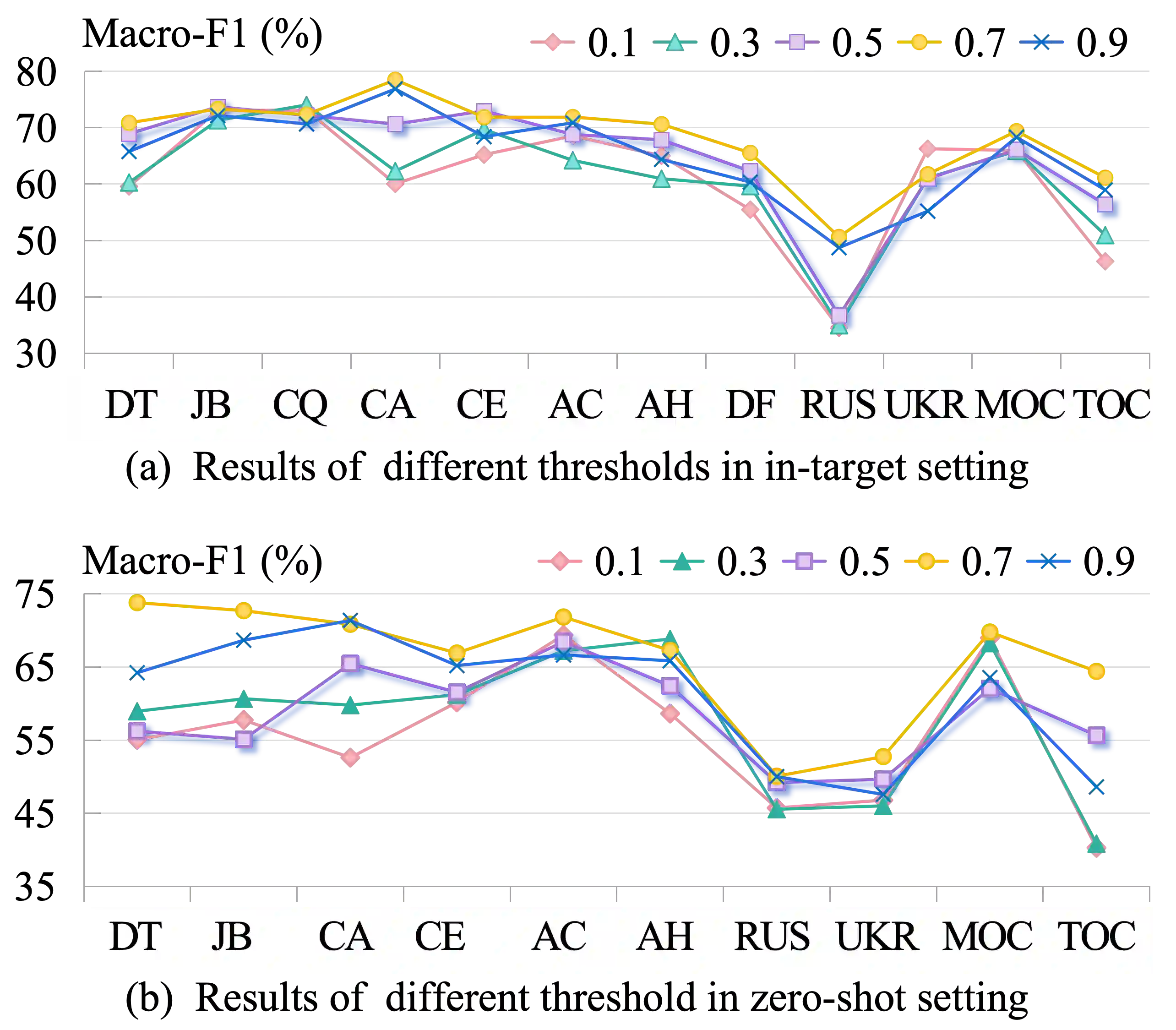

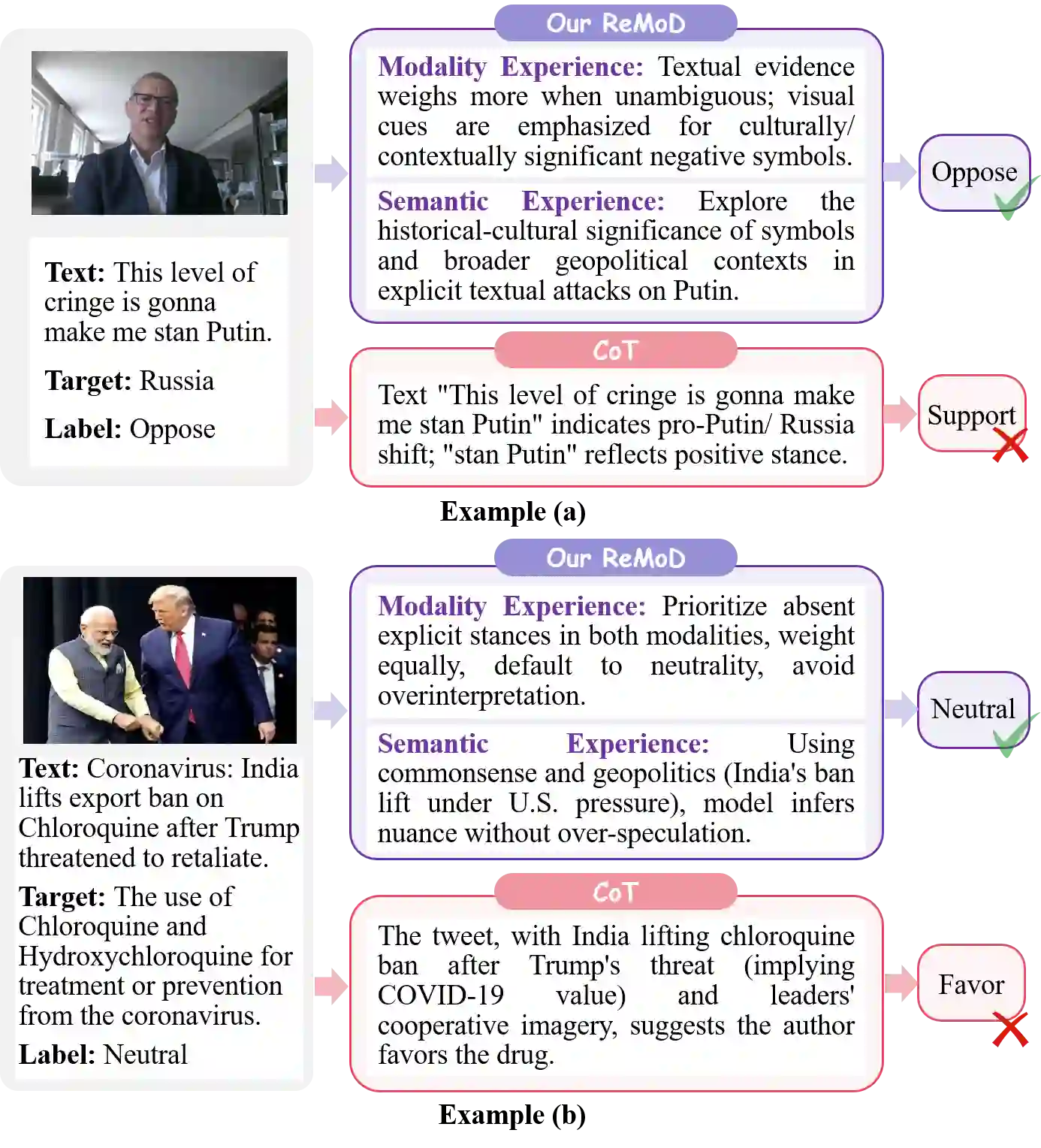

Multimodal Stance Detection (MSD) is a crucial task for understanding public opinion on social media. Existing methods predominantly operate by learning to fuse modalities. They lack an explicit reasoning process to discern how inter-modal dynamics, such as irony or conflict, collectively shape the user's final stance, leading to frequent misjudgments. To address this, we advocate for a paradigm shift from *learning to fuse* to *learning to reason*. We introduce **MIND**, a **M**eta-cognitive **I**ntuitive-reflective **N**etwork for **D**ual-reasoning. Inspired by the dual-process theory of human cognition, MIND operationalizes a self-improving loop. It first generates a rapid, intuitive hypothesis by querying evolving Modality and Semantic Experience Pools. Subsequently, a meta-cognitive reflective stage uses Modality-CoT and Semantic-CoT to scrutinize this initial judgment, distill superior adaptive strategies, and evolve the experience pools themselves. These dual experience structures are continuously refined during training and recalled at inference to guide robust and context-aware stance decisions. Extensive experiments on the MMSD benchmark demonstrate that our MIND significantly outperforms most baseline models and exhibits strong generalization.

翻译:多模态立场检测(MSD)是理解社交媒体公众意见的关键任务。现有方法主要通过学**习模态融合**来运作,缺乏明确的推理过程来辨别模态间动态(如反讽或冲突)如何共同塑造用户的最终立场,导致频繁误判。为解决此问题,我们倡导从“学习融合”向“学习推理”的范式转变。我们提出**MIND**——一种用于双重推理的**元认知直觉-反思网络**。受人类认知双过程理论启发,MIND实现了一个自我改进的循环:首先通过查询动态演化的模态与语义经验池生成快速的直觉假设;随后,元认知反思阶段利用模态思维链(Modality-CoT)与语义思维链(Semantic-CoT)审视初始判断,提炼更优的自适应策略,并演进经验池本身。这两类经验结构在训练中持续优化,在推理时被召回以指导鲁棒且上下文感知的立场决策。在MMSD基准上的大量实验表明,我们的MIND模型显著优于多数基线模型,并展现出强大的泛化能力。