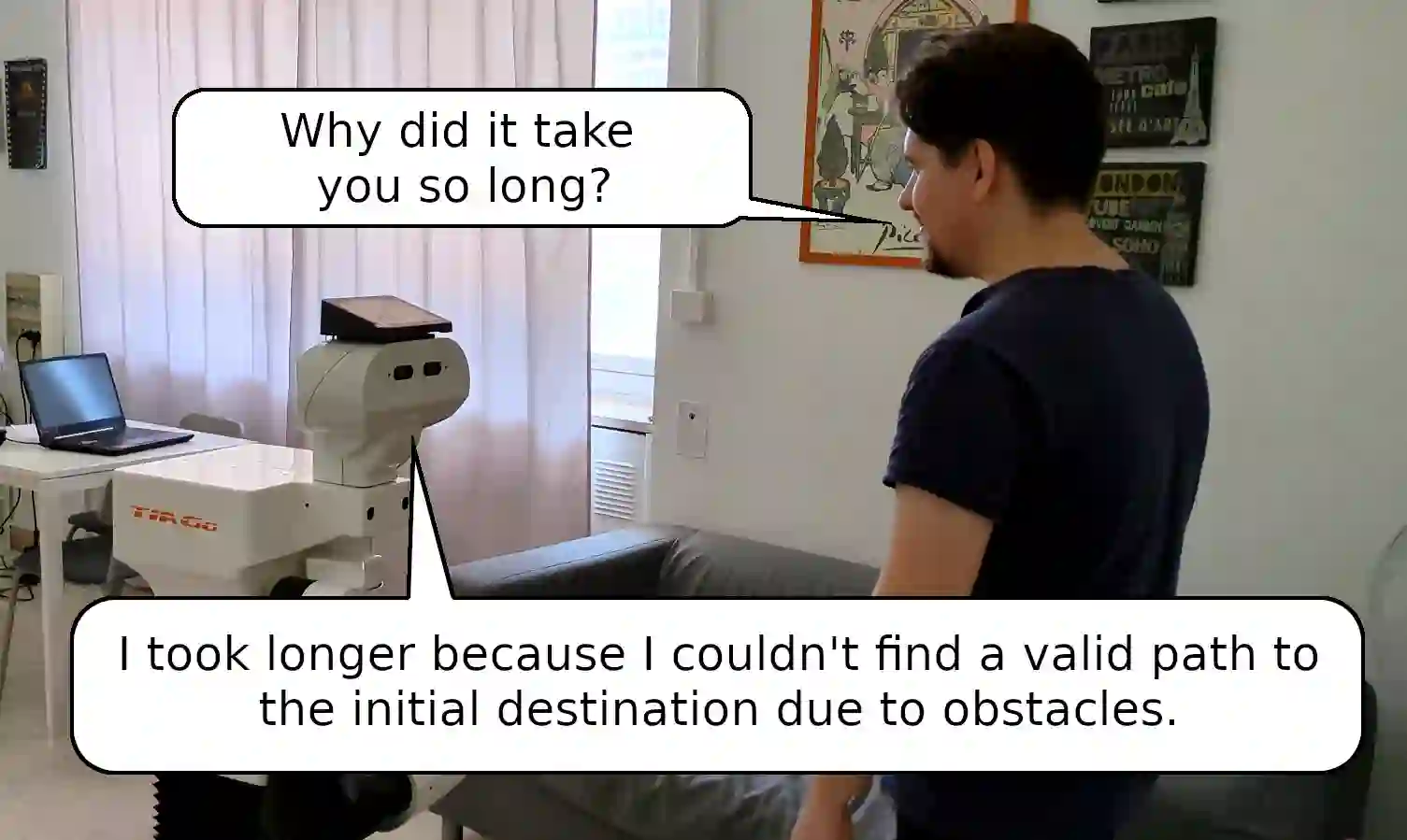

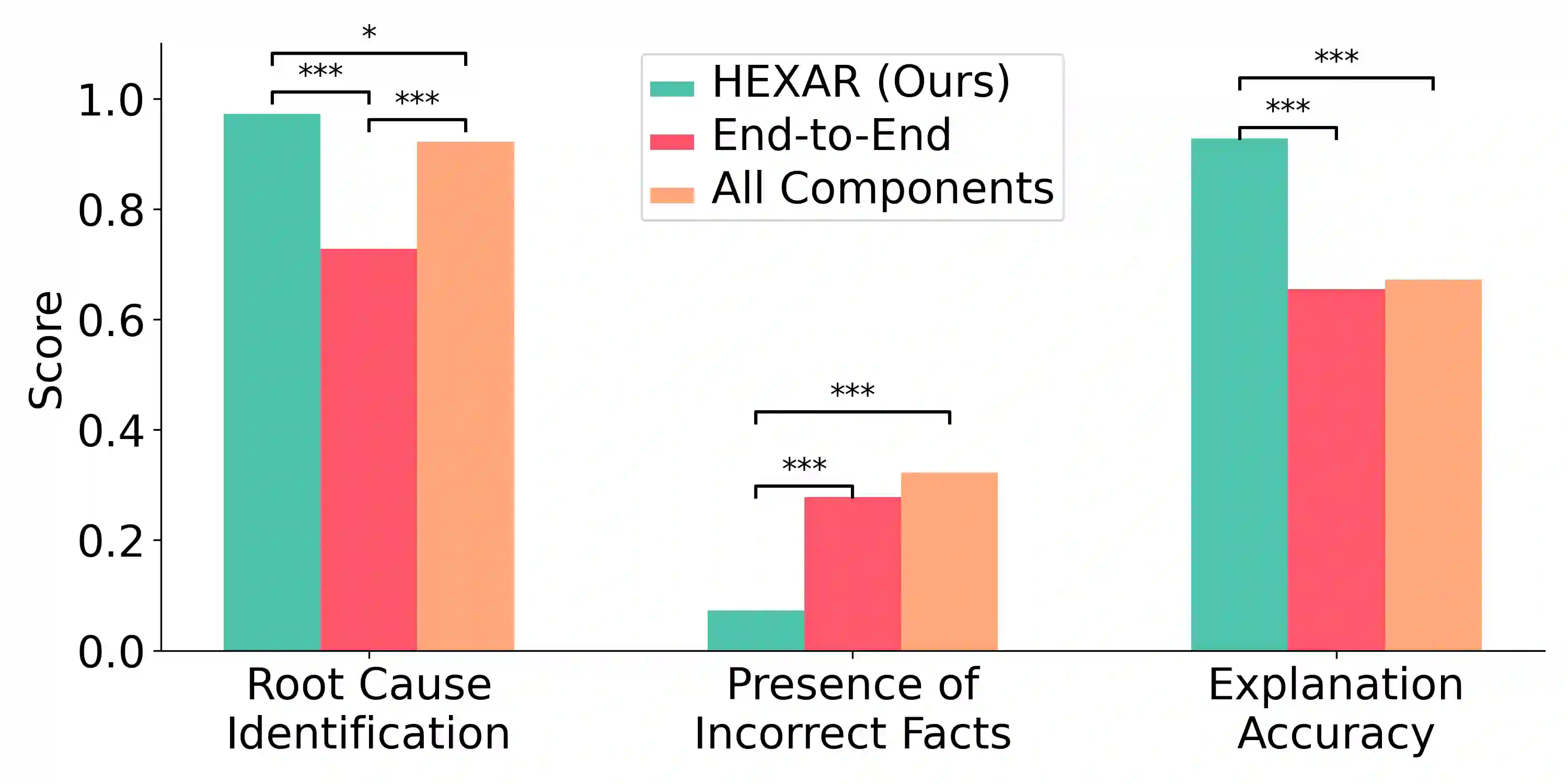

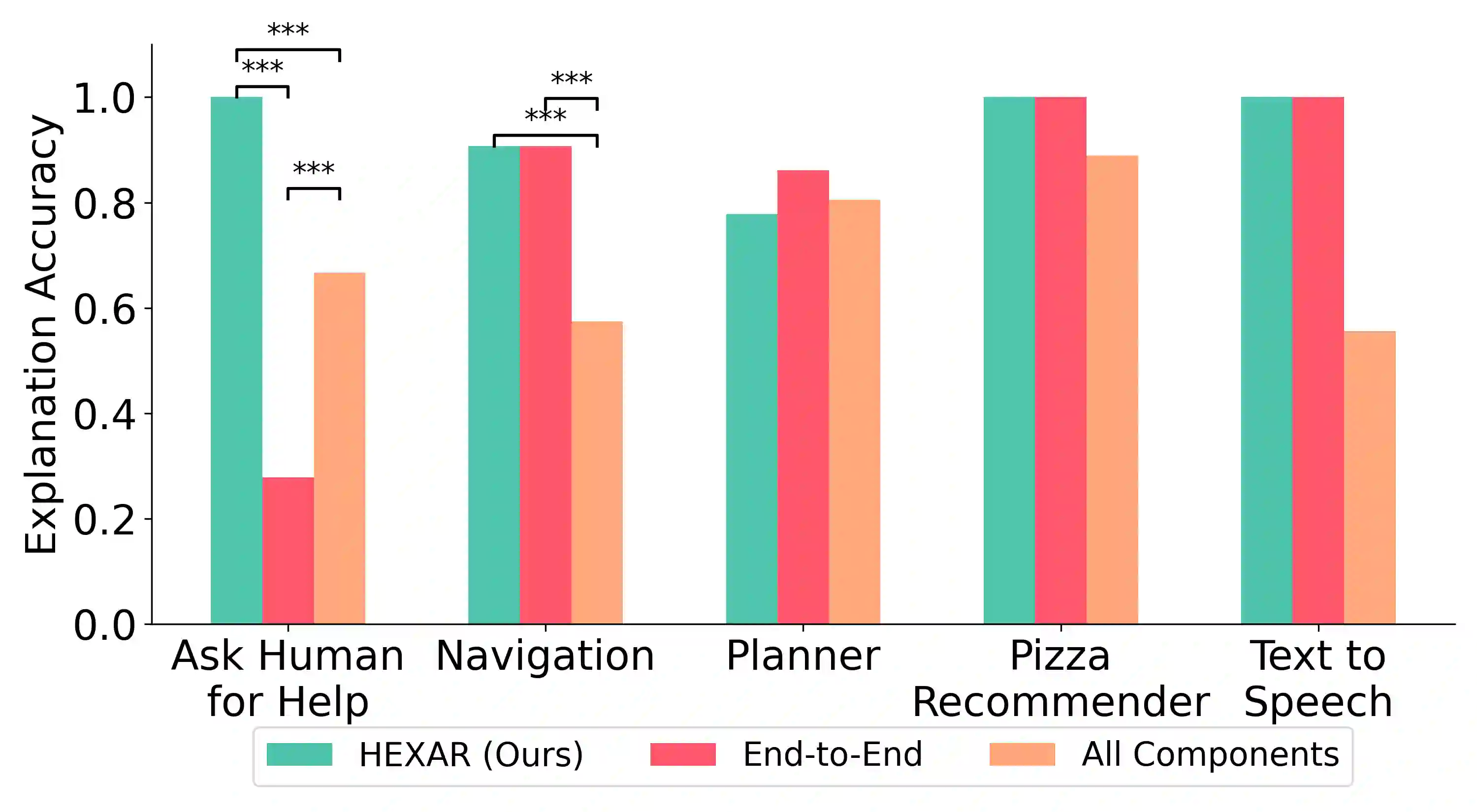

As robotic systems become increasingly complex, the need for explainable decision-making becomes critical. Existing explainability approaches in robotics typically either focus on individual modules, which can be difficult to query from the perspective of high-level behaviour, or employ monolithic approaches, which do not exploit the modularity of robotic architectures. We present HEXAR (Hierarchical EXplainability Architecture for Robots), a novel framework that provides a plug-in, hierarchical approach to generate explanations about robotic systems. HEXAR consists of specialised component explainers using diverse explanation techniques (e.g., LLM-based reasoning, causal models, feature importance, etc) tailored to specific robot modules, orchestrated by an explainer selector that chooses the most appropriate one for a given query. We implement and evaluate HEXAR on a TIAGo robot performing assistive tasks in a home environment, comparing it against end-to-end and aggregated baseline approaches across 180 scenario-query variations. We observe that HEXAR significantly outperforms baselines in root cause identification, incorrect information exclusion, and runtime, offering a promising direction for transparent autonomous systems.

翻译:随着机器人系统日益复杂,可解释决策的需求变得至关重要。机器人学中现有的可解释性方法通常要么聚焦于独立模块(从高层行为视角难以查询),要么采用单一化方法(未能利用机器人架构的模块化特性)。本文提出HEXAR(面向机器人的分层可解释性架构),这是一种通过插件化分层方法生成机器人系统解释的新型框架。HEXAR包含针对特定机器人模块定制的专用组件解释器(采用多样化解释技术,如基于LLM的推理、因果模型、特征重要性分析等),并由解释器选择器根据给定查询调度最合适的解释器。我们在执行家庭辅助任务的TIAGo机器人上实现并评估HEXAR,通过180种场景-查询变体与端到端及聚合基线方法进行对比。实验表明,HEXAR在根本原因识别、错误信息排除和运行时间方面显著优于基线方法,为透明自主系统的发展提供了新方向。