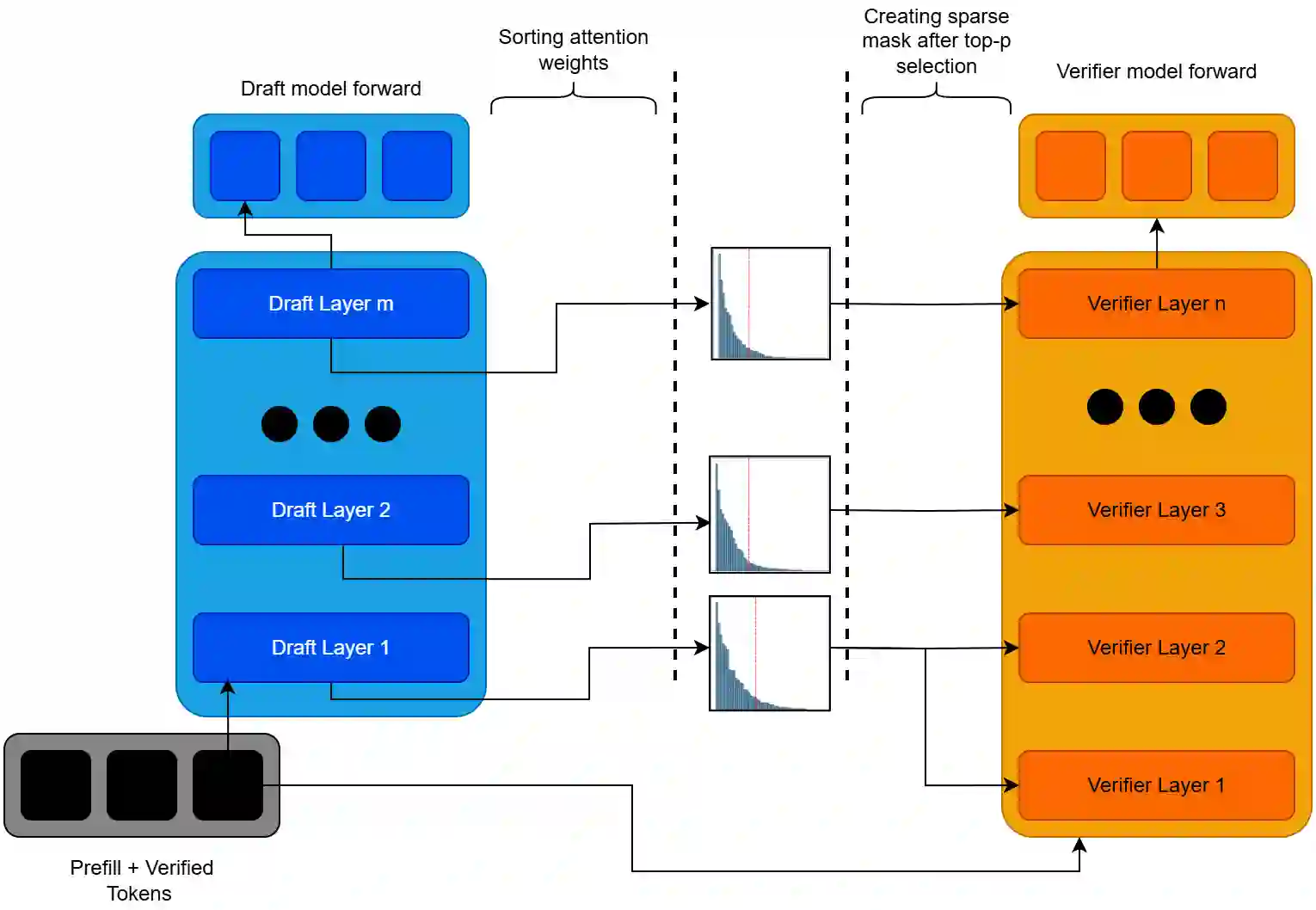

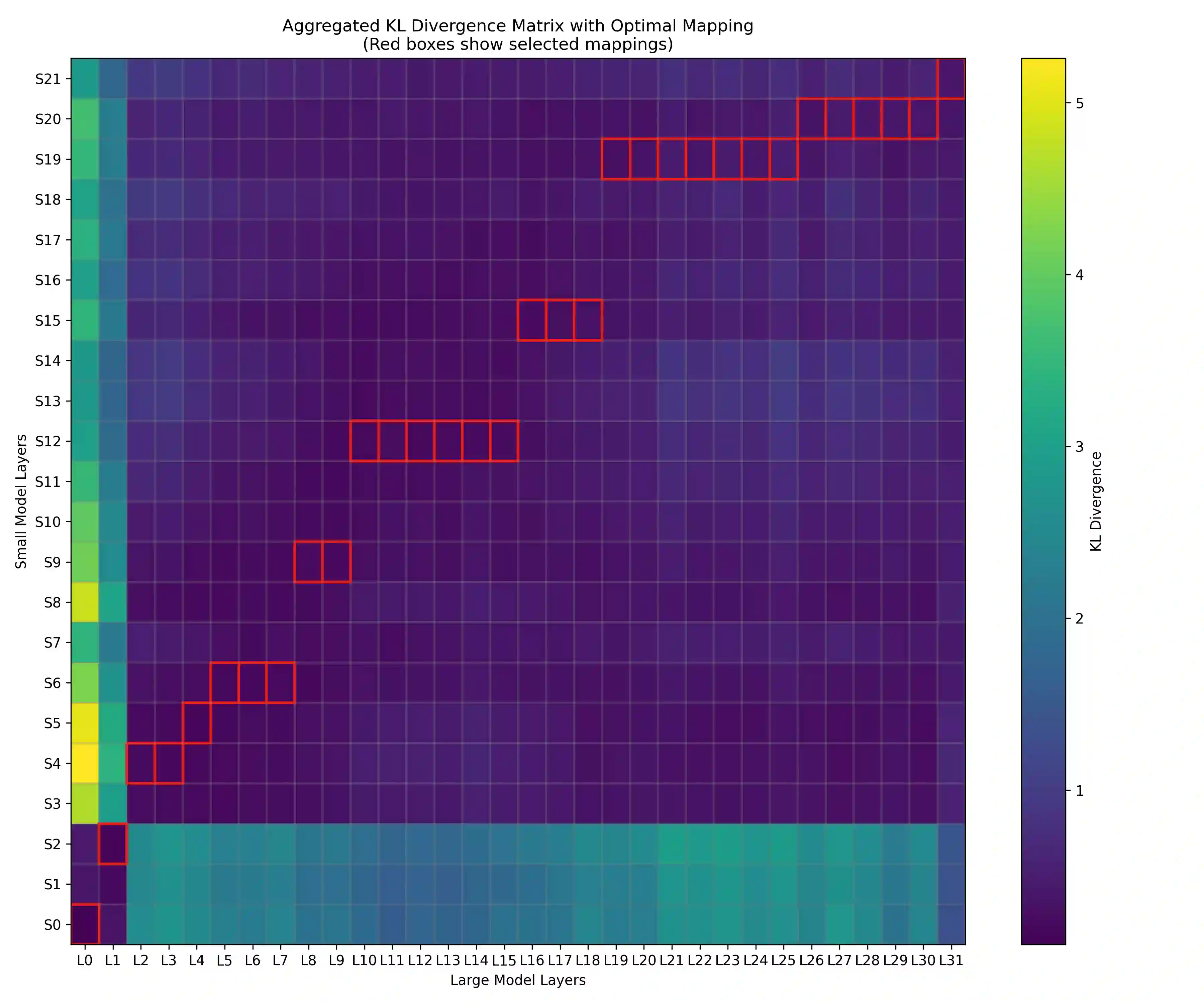

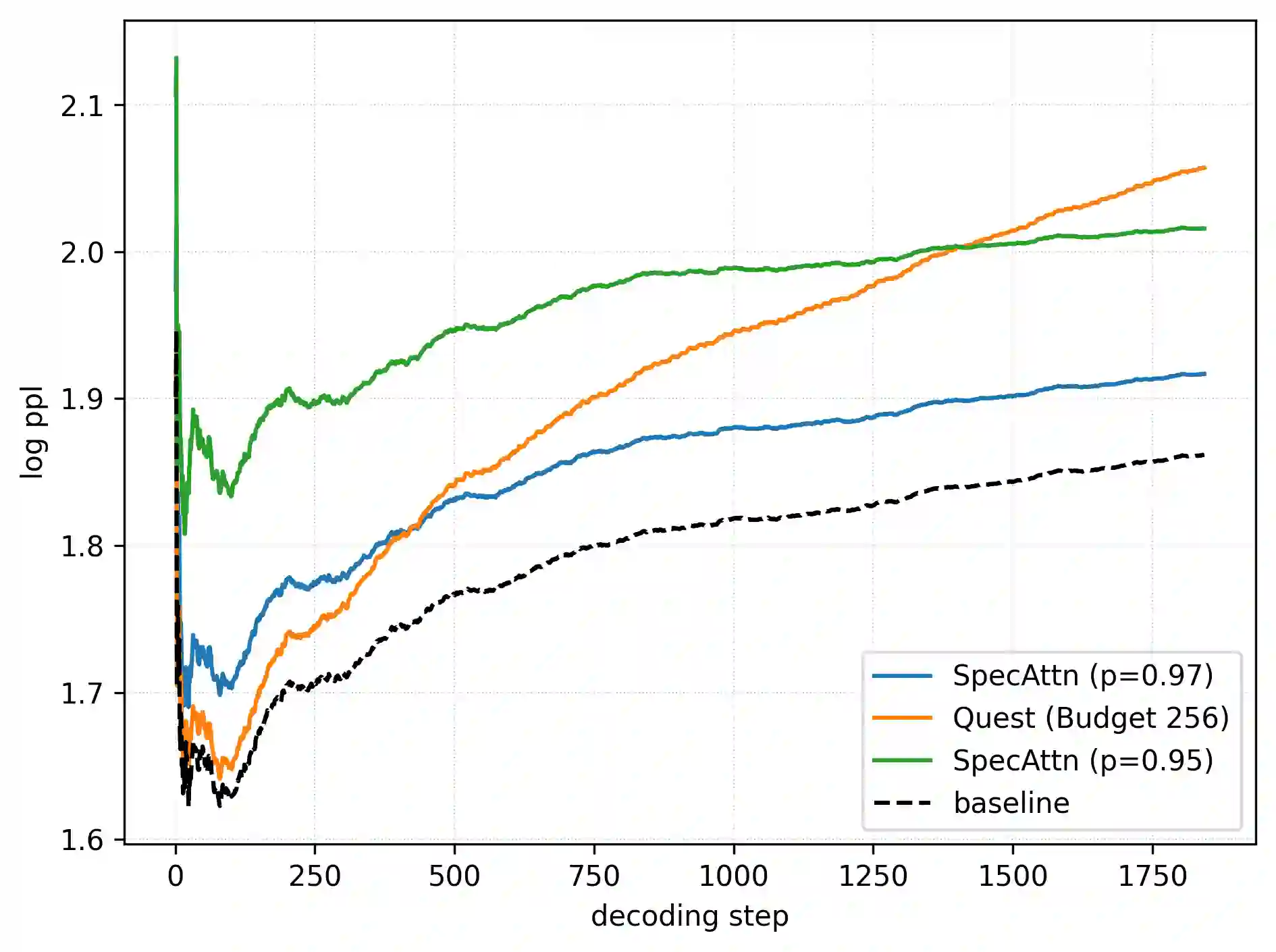

Large Language Models (LLMs) face significant computational bottlenecks during inference due to the quadratic complexity of self-attention mechanisms, particularly as context lengths increase. We introduce SpecAttn, a novel training-free approach that seamlessly integrates with existing speculative decoding techniques to enable efficient sparse attention in pre-trained transformers. Our key insight is to exploit the attention weights already computed by the draft model during speculative decoding to identify important tokens for the target model, eliminating redundant computation while maintaining output quality. SpecAttn employs three core techniques: KL divergence-based layer alignment between draft and target models, a GPU-optimized sorting-free algorithm for top-p token selection from draft attention patterns, and dynamic key-value cache pruning guided by these predictions. By leveraging the computational work already performed in standard speculative decoding pipelines, SpecAttn achieves over 75% reduction in key-value cache accesses with a mere 15.29% increase in perplexity on the PG-19 dataset, significantly outperforming existing sparse attention methods. Our approach demonstrates that speculative execution can be enhanced to provide approximate verification without significant performance degradation.

翻译:大型语言模型(LLMs)在推理过程中面临显著的计算瓶颈,这源于自注意力机制的二次复杂度,尤其是在上下文长度增加时。我们提出了SpecAttn,一种无需训练的新方法,能够无缝集成到现有的推测解码技术中,从而在预训练的Transformer模型中实现高效的稀疏注意力。我们的核心洞见是利用推测解码过程中草稿模型已计算出的注意力权重,为目标模型识别重要标记,从而在保持输出质量的同时消除冗余计算。SpecAttn采用三种核心技术:基于KL散度的草稿与目标模型层对齐、针对GPU优化的无排序算法用于从草稿注意力模式中选取top-p标记,以及基于这些预测的动态键值缓存剪枝。通过利用标准推测解码流程中已执行的计算工作,SpecAttn在PG-19数据集上实现了超过75%的键值缓存访问减少,而困惑度仅增加15.29%,显著优于现有的稀疏注意力方法。我们的研究表明,推测执行可以通过增强来提供近似验证,而不会导致显著的性能下降。