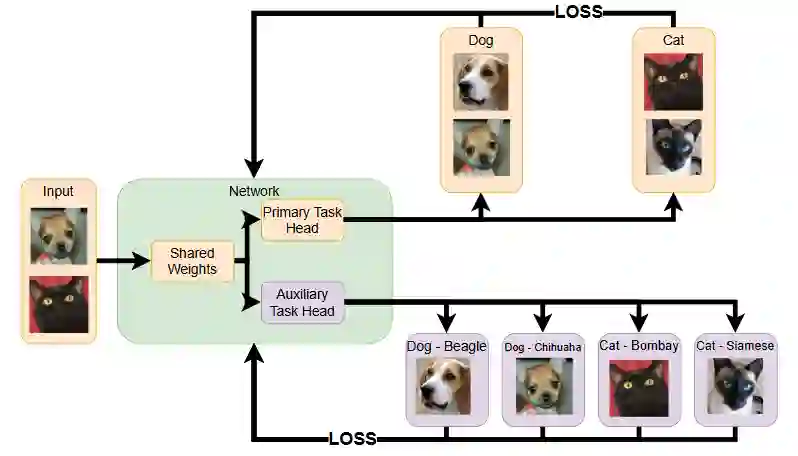

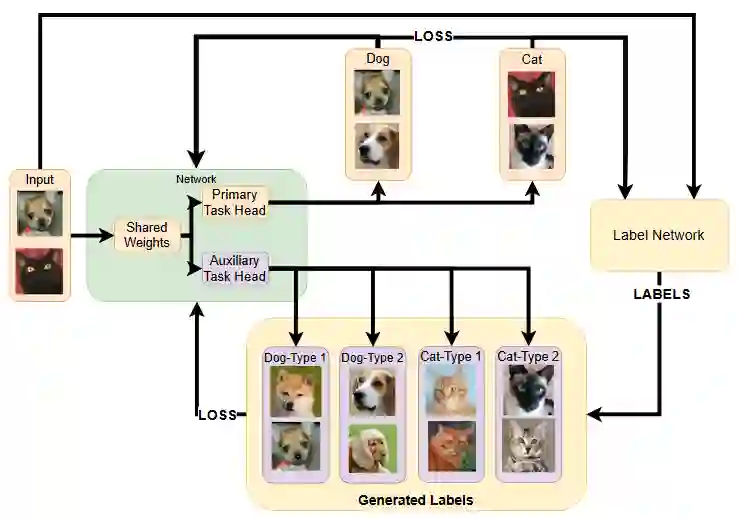

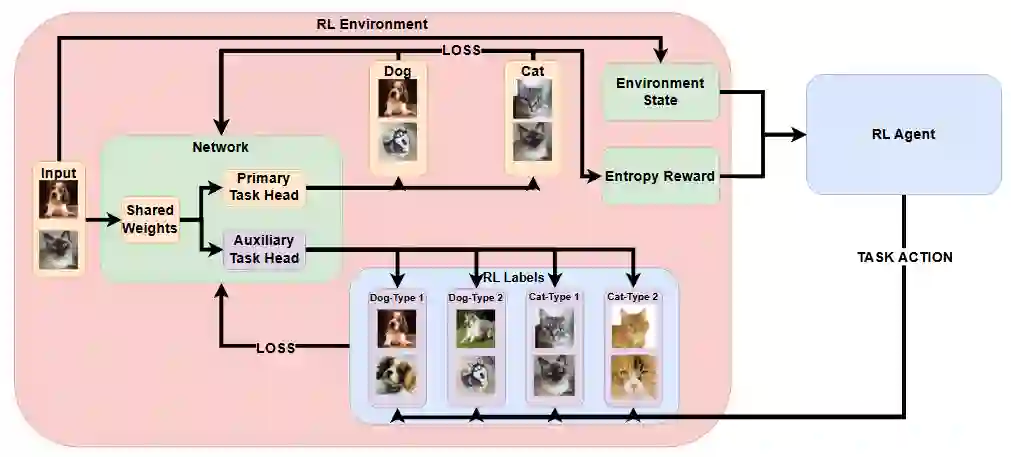

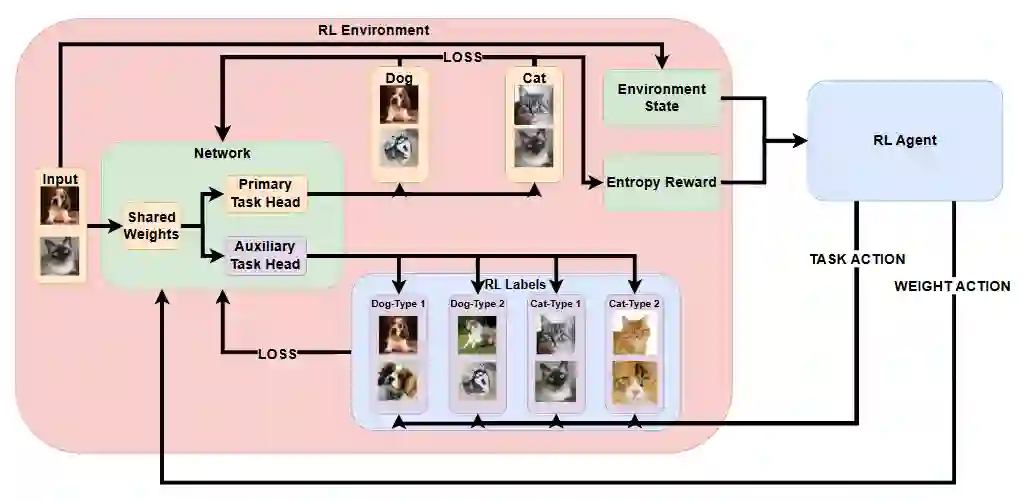

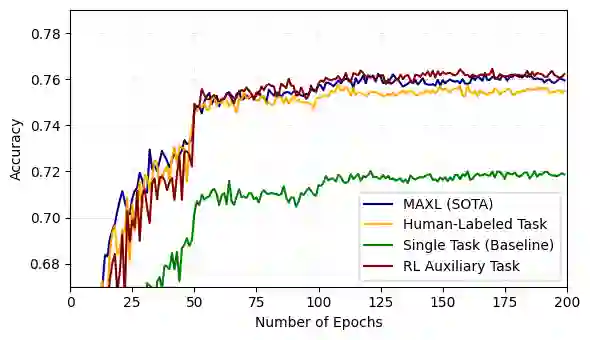

Auxiliary Learning (AL) is a form of multi-task learning in which a model trains on auxiliary tasks to boost performance on a primary objective. While AL has improved generalization across domains such as navigation, image classification, and NLP, it often depends on human-labeled auxiliary tasks that are costly to design and require domain expertise. Meta-learning approaches mitigate this by learning to generate auxiliary tasks, but typically rely on gradient based bi-level optimization, adding substantial computational and implementation overhead. We propose RL-AUX, a reinforcement-learning (RL) framework that dynamically creates auxiliary tasks by assigning auxiliary labels to each training example, rewarding the agent whenever its selections improve the performance on the primary task. We also explore learning per-example weights for the auxiliary loss. On CIFAR-100 grouped into 20 superclasses, our RL method outperforms human-labeled auxiliary tasks and matches the performance of a prominent bi-level optimization baseline. We present similarly strong results on other classification datasets. These results suggest RL is a viable path to generating effective auxiliary tasks.

翻译:辅助学习(AL)是一种多任务学习形式,模型通过训练辅助任务来提升主要目标的性能。尽管AL在导航、图像分类和自然语言处理等领域提升了泛化能力,但它通常依赖于人工标注的辅助任务,这些任务设计成本高昂且需要领域专业知识。元学习方法通过学习生成辅助任务来缓解这一问题,但通常依赖于基于梯度的双层优化,增加了显著的计算和实现开销。我们提出了RL-AUX,一种强化学习(RL)框架,通过为每个训练样本分配辅助标签来动态创建辅助任务,每当智能体的选择提升主要任务性能时给予奖励。我们还探索了为辅助损失学习每个样本的权重。在将CIFAR-100分为20个超类的实验中,我们的RL方法超越了人工标注的辅助任务,并与一个重要的双层优化基线性能相当。我们在其他分类数据集上同样展示了强劲的结果。这些结果表明,强化学习是生成有效辅助任务的可行途径。