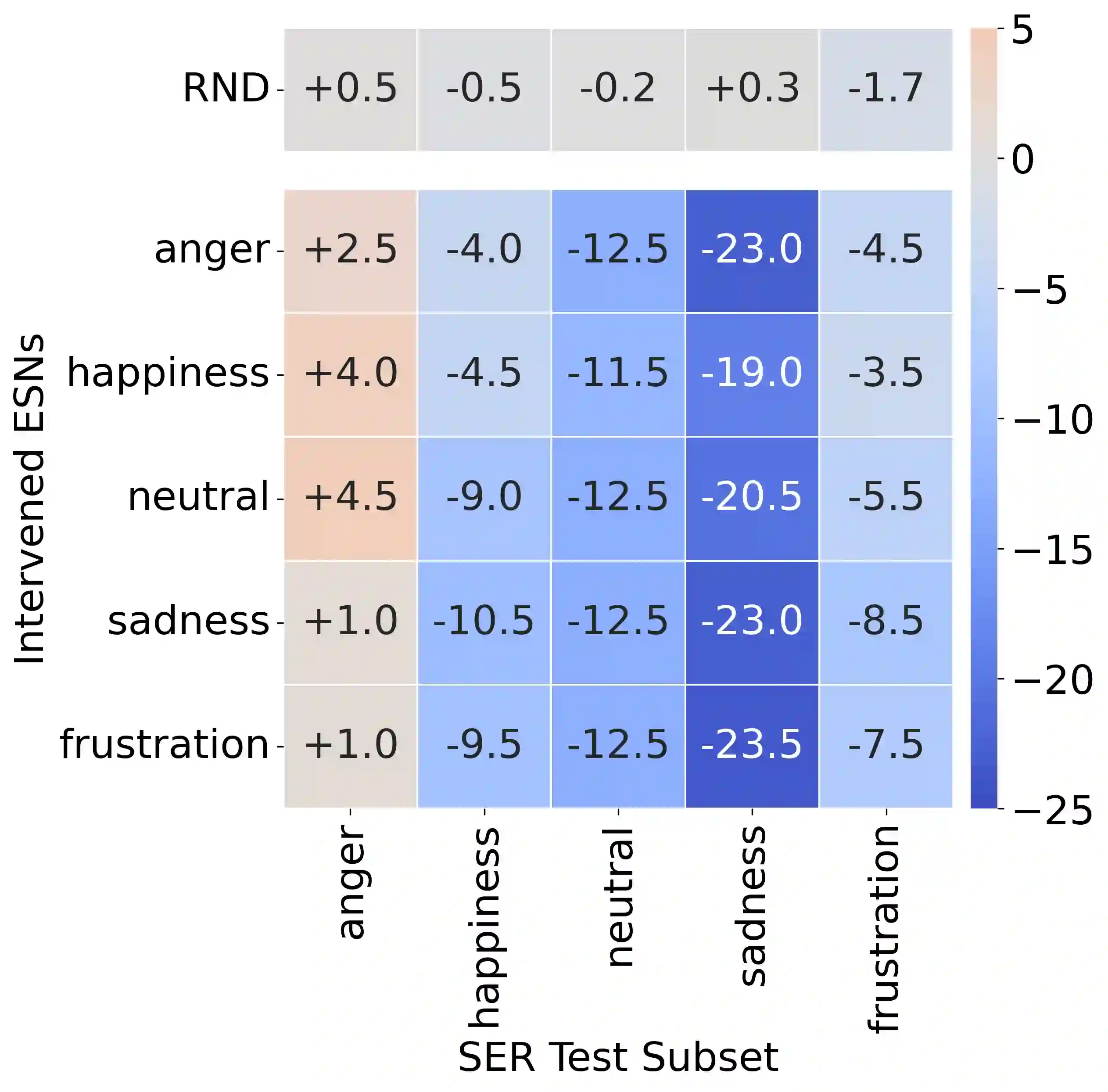

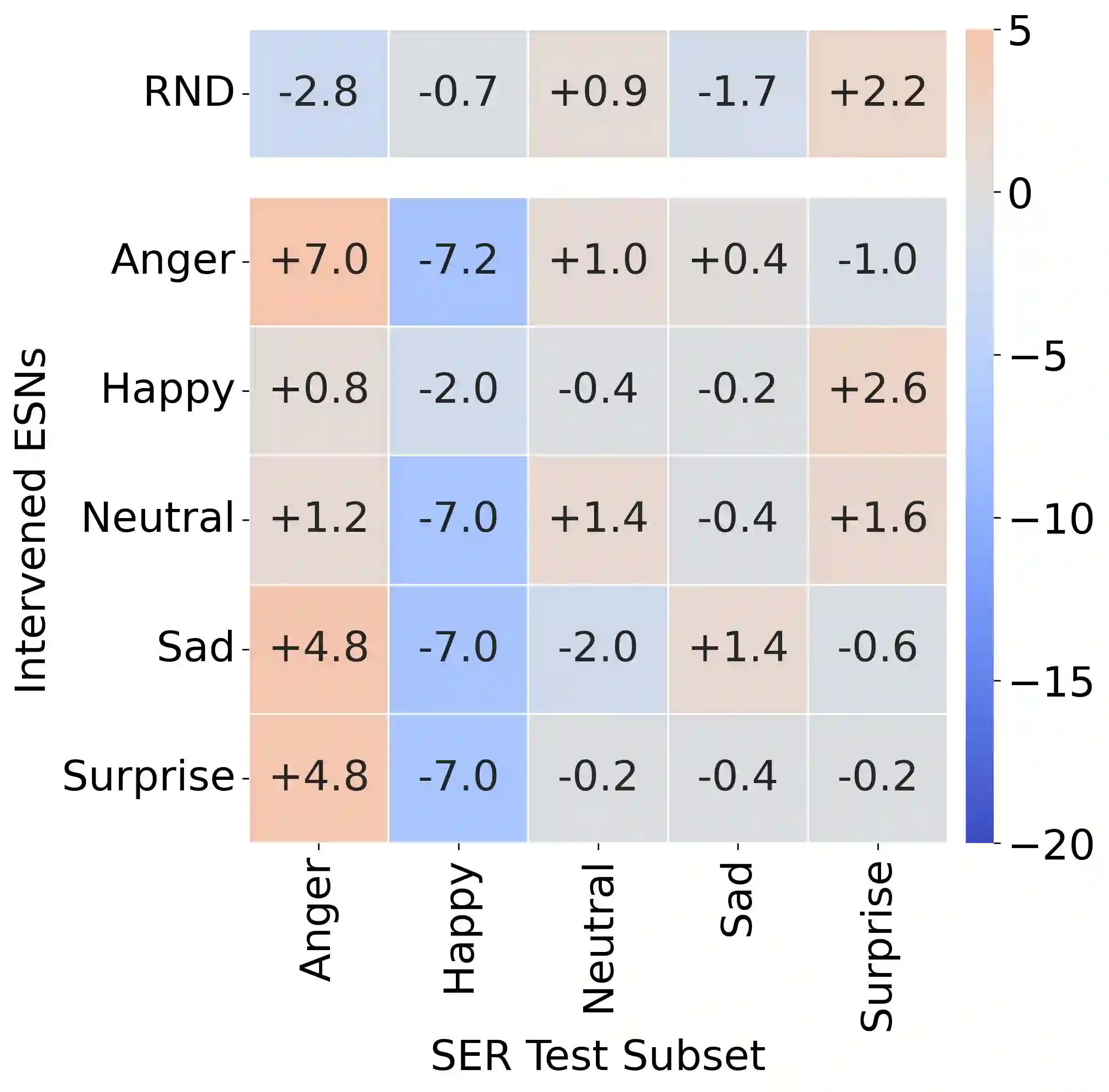

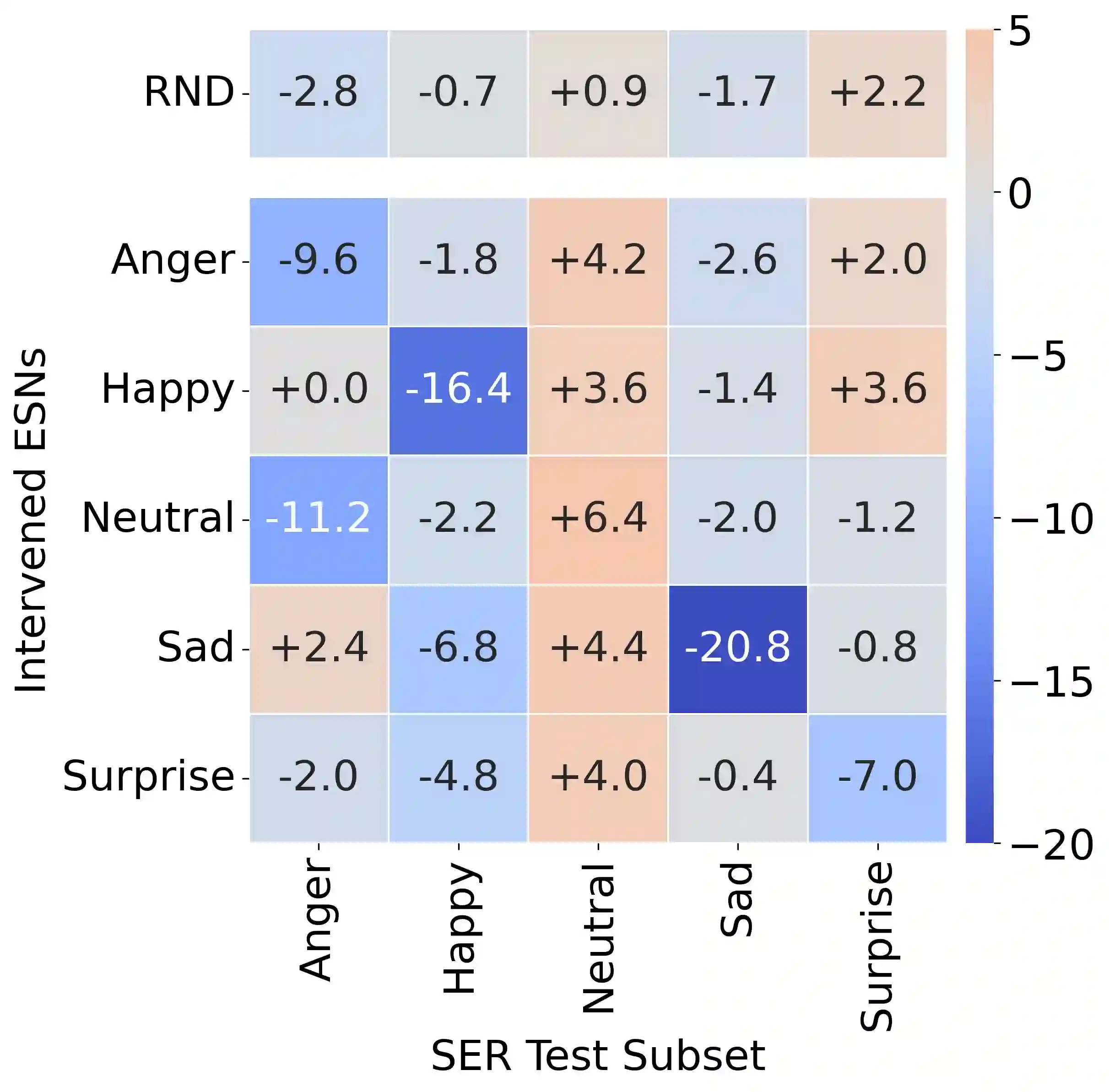

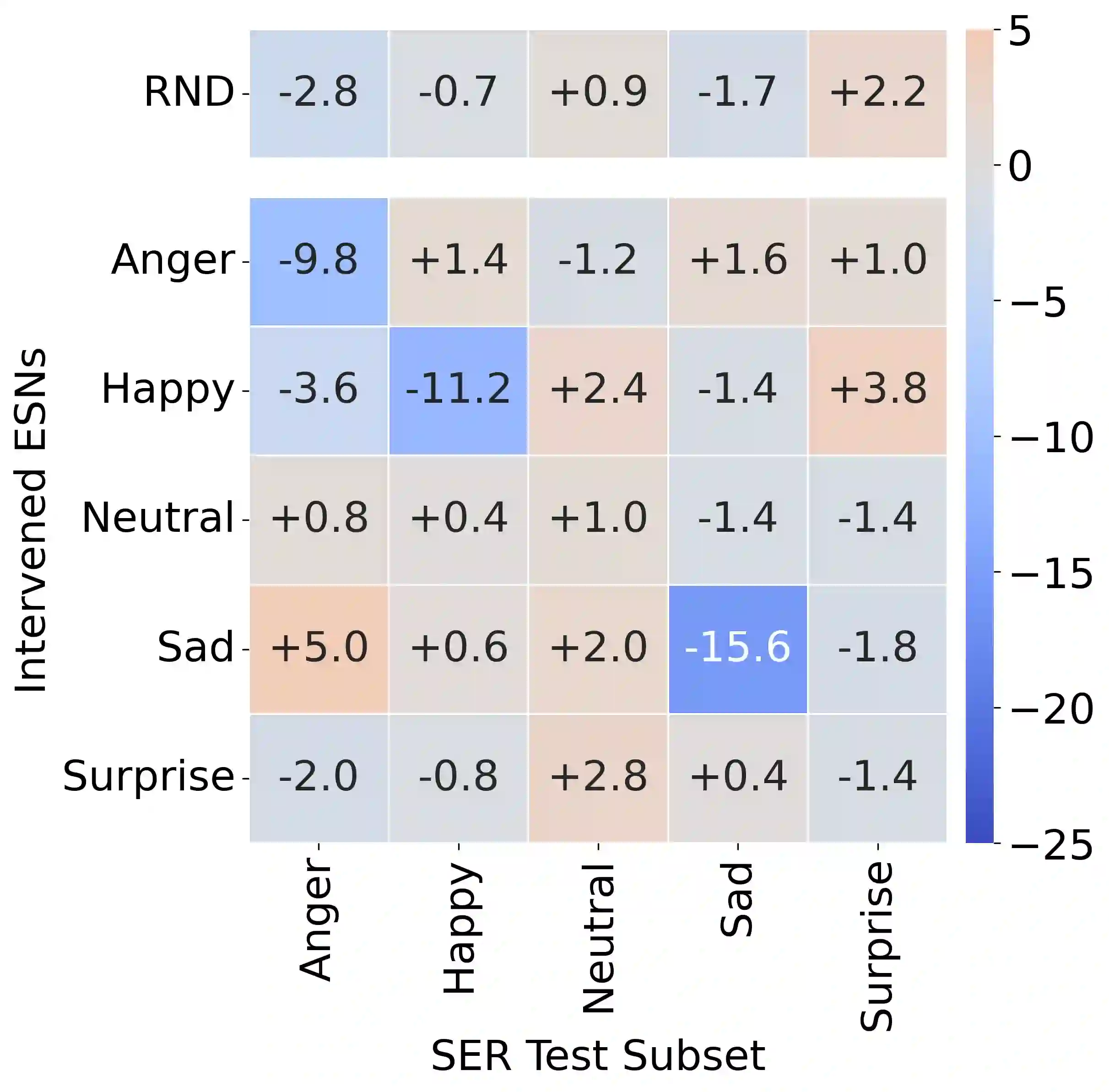

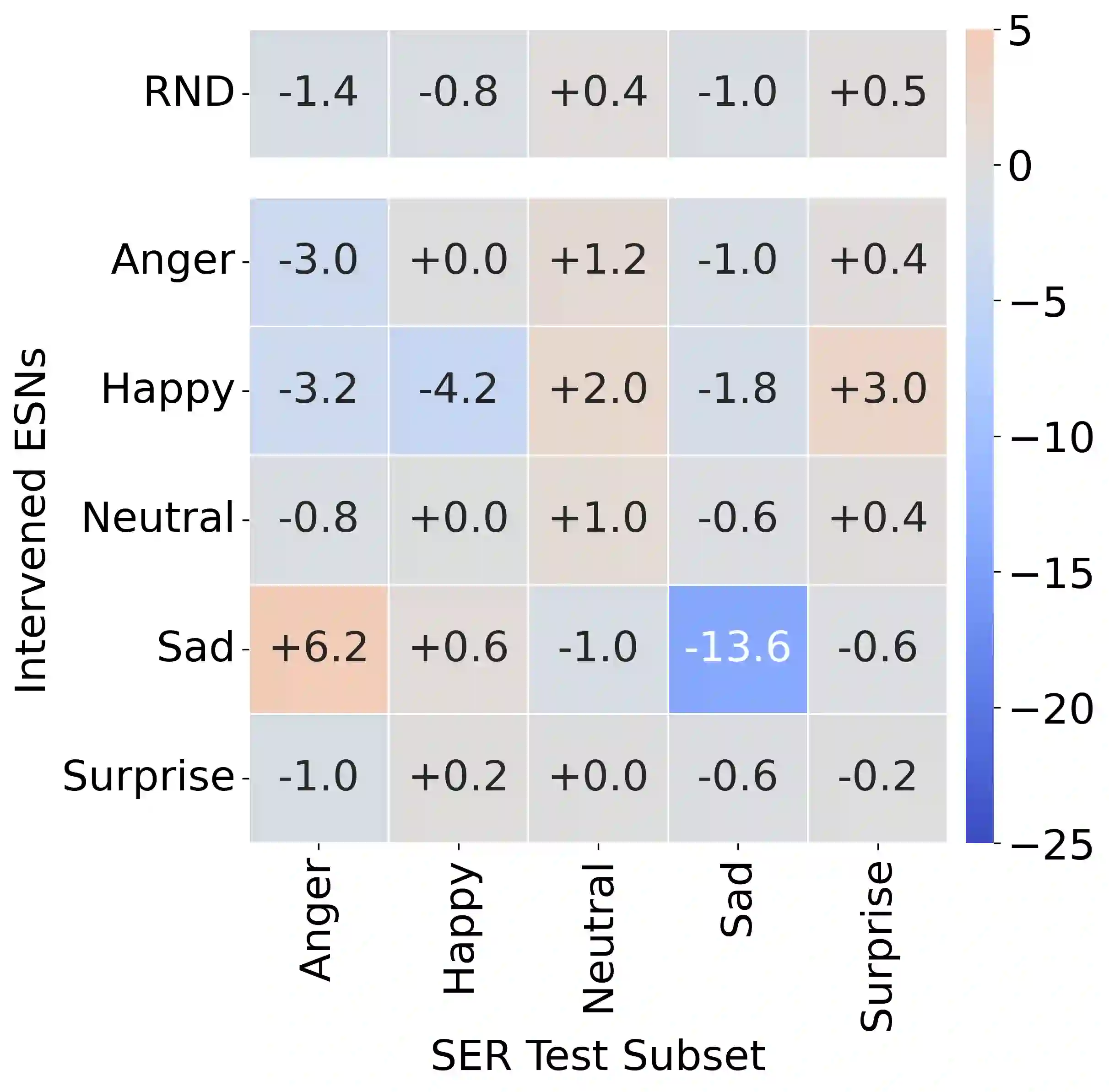

Emotion is a central dimension of spoken communication, yet, we still lack a mechanistic account of how modern large audio-language models (LALMs) encode it internally. We present the first neuron-level interpretability study of emotion-sensitive neurons (ESNs) in LALMs and provide causal evidence that such units exist in Qwen2.5-Omni, Kimi-Audio, and Audio Flamingo 3. Across these three widely used open-source models, we compare frequency-, entropy-, magnitude-, and contrast-based neuron selectors on multiple emotion recognition benchmarks. Using inference-time interventions, we reveal a consistent emotion-specific signature: ablating neurons selected for a given emotion disproportionately degrades recognition of that emotion while largely preserving other classes, whereas gain-based amplification steers predictions toward the target emotion. These effects arise with modest identification data and scale systematically with intervention strength. We further observe that ESNs exhibit non-uniform layer-wise clustering with partial cross-dataset transfer. Taken together, our results offer a causal, neuron-level account of emotion decisions in LALMs and highlight targeted neuron interventions as an actionable handle for controllable affective behaviors.

翻译:情感是语音交流的核心维度,然而我们仍缺乏关于现代大型音频-语言模型(LALMs)如何在内部编码情感机制的描述。我们首次对LALMs中的情感敏感神经元(ESNs)进行了神经元层面的可解释性研究,并为Qwen2.5-Omni、Kimi-Audio和Audio Flamingo 3中存在此类单元提供了因果证据。在这三个广泛使用的开源模型中,我们在多个情感识别基准上比较了基于频率、熵值、幅度和对比度的神经元选择器。通过推理时干预,我们揭示了一致的情感特异性特征:针对特定情感选择的神经元进行消融会不成比例地降低对该情感的识别能力,同时基本保留其他类别;而基于增益的放大则会将预测导向目标情感。这些效应在少量识别数据下即可出现,并随干预强度系统性地增强。我们进一步观察到ESNs表现出非均匀的层级聚类特性,并具有部分跨数据集迁移能力。综上所述,我们的研究为LALMs中的情感决策提供了因果性、神经元层面的解释,并强调定向神经元干预可作为实现可控情感行为的可操作性手段。