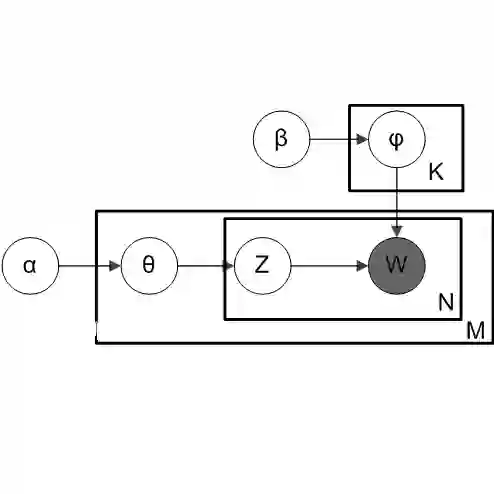

We show that for unconstrained Deep Linear Discriminant Analysis (LDA) classifiers, maximum-likelihood training admits pathological solutions in which class means drift together, covariances collapse, and the learned representation becomes almost non-discriminative. Conversely, cross-entropy training yields excellent accuracy but decouples the head from the underlying generative model, leading to highly inconsistent parameter estimates. To reconcile generative structure with discriminative performance, we introduce the \emph{Discriminative Negative Log-Likelihood} (DNLL) loss, which augments the LDA log-likelihood with a simple penalty on the mixture density. DNLL can be interpreted as standard LDA NLL plus a term that explicitly discourages regions where several classes are simultaneously likely. Deep LDA trained with DNLL produces clean, well-separated latent spaces, matches the test accuracy of softmax classifiers on synthetic data and standard image benchmarks, and yields substantially better calibrated predictive probabilities, restoring a coherent probabilistic interpretation to deep discriminant models.

翻译:我们证明,对于无约束的深度线性判别分析(LDA)分类器,最大似然训练会存在病态解:类均值会漂移靠近,协方差会坍缩,学习到的表征几乎不具备判别性。相反,交叉熵训练能获得优异的准确率,但会使分类头与底层生成模型解耦,导致参数估计高度不一致。为了调和生成结构与判别性能,我们引入了判别性负对数似然(DNLL)损失,该损失通过在混合密度上施加一个简单惩罚项来增强LDA对数似然。DNLL可解释为标准LDA负对数似然加上一个明确抑制多个类别同时可能出现的区域的项。使用DNLL训练的深度LDA能产生清晰、分离良好的潜在空间,在合成数据和标准图像基准测试中与softmax分类器的测试准确率相当,并产生显著更好校准的预测概率,从而为深度判别模型恢复了连贯的概率解释。