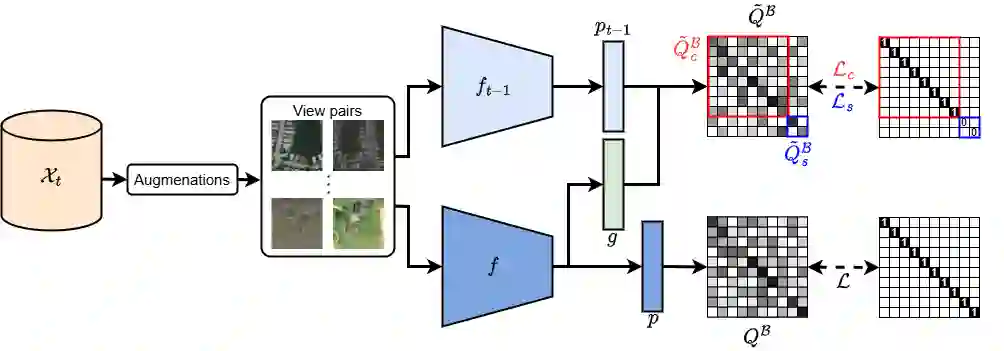

Continual self-supervised learning (CSSL) methods have gained increasing attention in remote sensing (RS) due to their capability to learn new tasks sequentially from continuous streams of unlabeled data. Existing CSSL methods, while learning new tasks, focus on preventing catastrophic forgetting. To this end, most of them use regularization strategies to retain knowledge of previous tasks. This reduces the model's ability to adapt to the data of new tasks (i.e., learning plasticity), which can degrade performance. To address this problem, in this paper, we propose a novel CSSL method that aims to learn tasks sequentially, while achieving high learning plasticity. To this end, the proposed method uses a knowledge distillation strategy with an integrated decoupling mechanism. The decoupling is achieved by first dividing the feature dimensions into task-common and task-specific parts. Then, the task-common features are forced to be correlated to ensure memory stability while the task-specific features are forced to be de-correlated facilitating the learning of new features. Experimental results show the effectiveness of the proposed method compared to CaSSLe, which is a widely used CSSL framework, with improvements of up to 1.12% in average accuracy and 2.33% in intransigence in a task-incremental scenario, and 1.24% in average accuracy and 2.01% in intransigence in a class-incremental scenario.

翻译:持续自监督学习(CSSL)方法因其能够从连续的无标注数据流中顺序学习新任务的能力,在遥感(RS)领域受到越来越多的关注。现有的CSSL方法在学习新任务时,主要侧重于防止灾难性遗忘。为此,它们大多采用正则化策略来保留先前任务的知识。但这会降低模型适应新任务数据的能力(即学习可塑性),从而可能损害性能。为了解决这个问题,本文提出了一种新颖的CSSL方法,旨在顺序学习任务的同时,实现较高的学习可塑性。为此,所提出的方法采用了一种集成解耦机制的知识蒸馏策略。该解耦首先通过将特征维度划分为任务通用部分和任务特定部分来实现。然后,强制任务通用特征之间具有相关性以确保记忆稳定性,同时强制任务特定特征之间去相关以促进新特征的学习。实验结果表明,与广泛使用的CSSL框架CaSSLe相比,所提方法具有有效性:在任务增量场景下,平均准确率提升高达1.12%,顽固性提升2.33%;在类别增量场景下,平均准确率提升1.24%,顽固性提升2.01%。