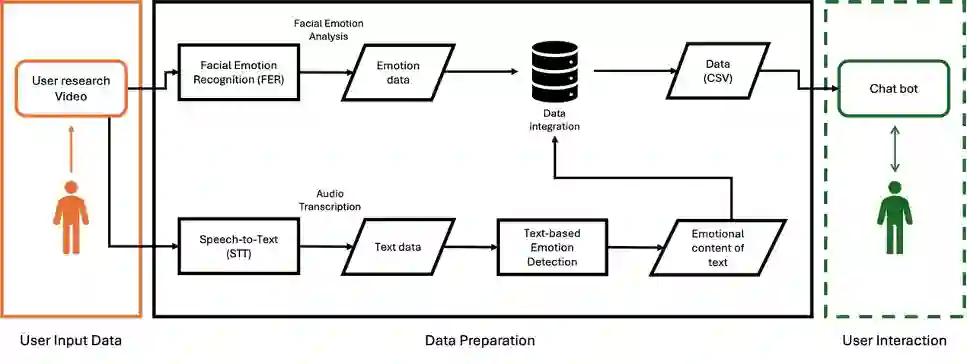

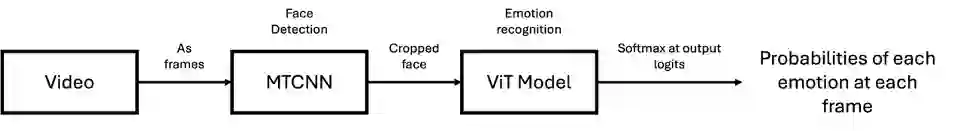

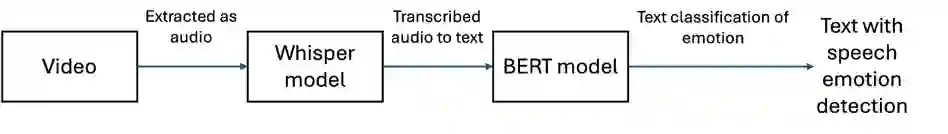

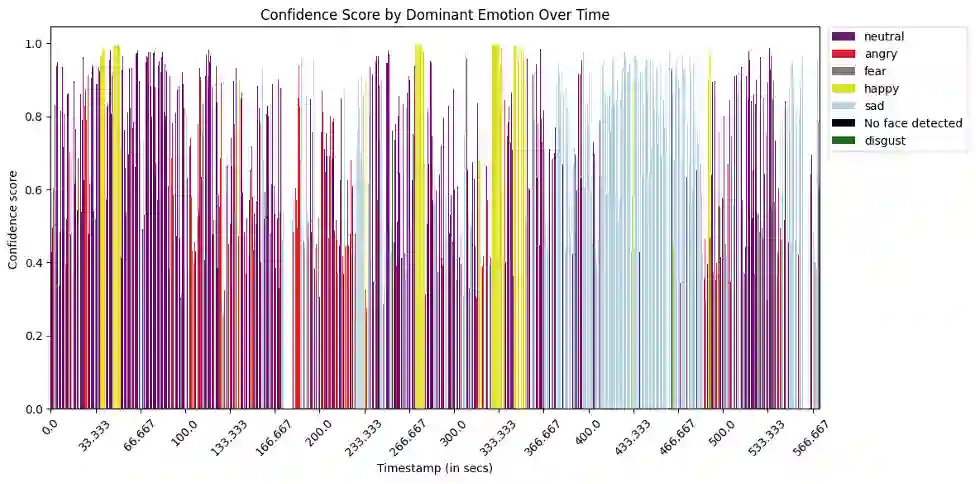

Emotion recognition technology has been studied from the past decade. With its growing importance and applications such as customer service, medical, education, etc., this research study aims to explore its potential and importance in the field of User experience evaluation. Recognizing and keeping track of user emotions in user research video is important to understand user needs and expectations from a service/product. Little research has been done that focuses on automating emotion extraction from a video where more than one modality has been incorporated in the field of UX. The study aims at implementing different modalities such as facial emotion recognition, speech-to-text and text-based emotion recognition for capturing emotional nuances from a user research video and extract meaningful actionable insights. For selection of facial emotion recognition model, 10 pre-trained models were evaluated on three benchmark datasets i.e. FER-2013, AffectNet and CK+, selecting the model with most generalization ability. To extract speech and convert to text, OpenAI's Whisper model was implemented and finally the emotions from text were recognized using a pre-trained model available at HuggingFace website having an evaluation accuracy more than 95%. The study also integrates the gathered data using temporal alignment and fusion for deeper and contextual insights. The study further demonstrates a way of automating data analysis through PandasAI Python library where OpenAI's GPT-4o model was implemented along with a discussion on other possible solutions. This study is an attempt to demonstrate a proof of concept where automated meaningful insights are extracted from a video based on user emotions.

翻译:情绪识别技术在过去十年中得到了广泛研究。随着其在客户服务、医疗、教育等领域的重要性日益增长和应用范围不断扩大,本研究旨在探索该技术在用户体验评估领域的潜力与价值。在用户研究视频中识别并追踪用户情绪,对于理解用户对服务/产品的需求与期望至关重要。当前在用户体验领域,针对融合多模态信息的视频进行自动化情绪提取的研究尚不充分。本研究旨在通过整合面部情绪识别、语音转文本及基于文本的情绪识别等多种模态,从用户研究视频中捕捉情感细微差异,并提取具有可操作性的深度洞察。在面部情绪识别模型选择方面,我们在FER-2013、AffectNet和CK+三个基准数据集上评估了10个预训练模型,最终选取了泛化能力最优的模型。为提取语音并转换为文本,本研究采用了OpenAI的Whisper模型;文本情绪识别则通过HuggingFace网站上评估准确率超过95%的预训练模型实现。研究还通过时序对齐与融合技术对采集的数据进行整合,以获取更具深度和上下文关联的洞察。此外,本研究展示了通过PandasAI Python库实现自动化数据分析的路径,其中集成了OpenAI的GPT-4o模型,并对其他潜在解决方案进行了探讨。本研究尝试构建一个概念验证框架,实现基于用户情绪从视频中自动提取有意义洞察的完整流程。