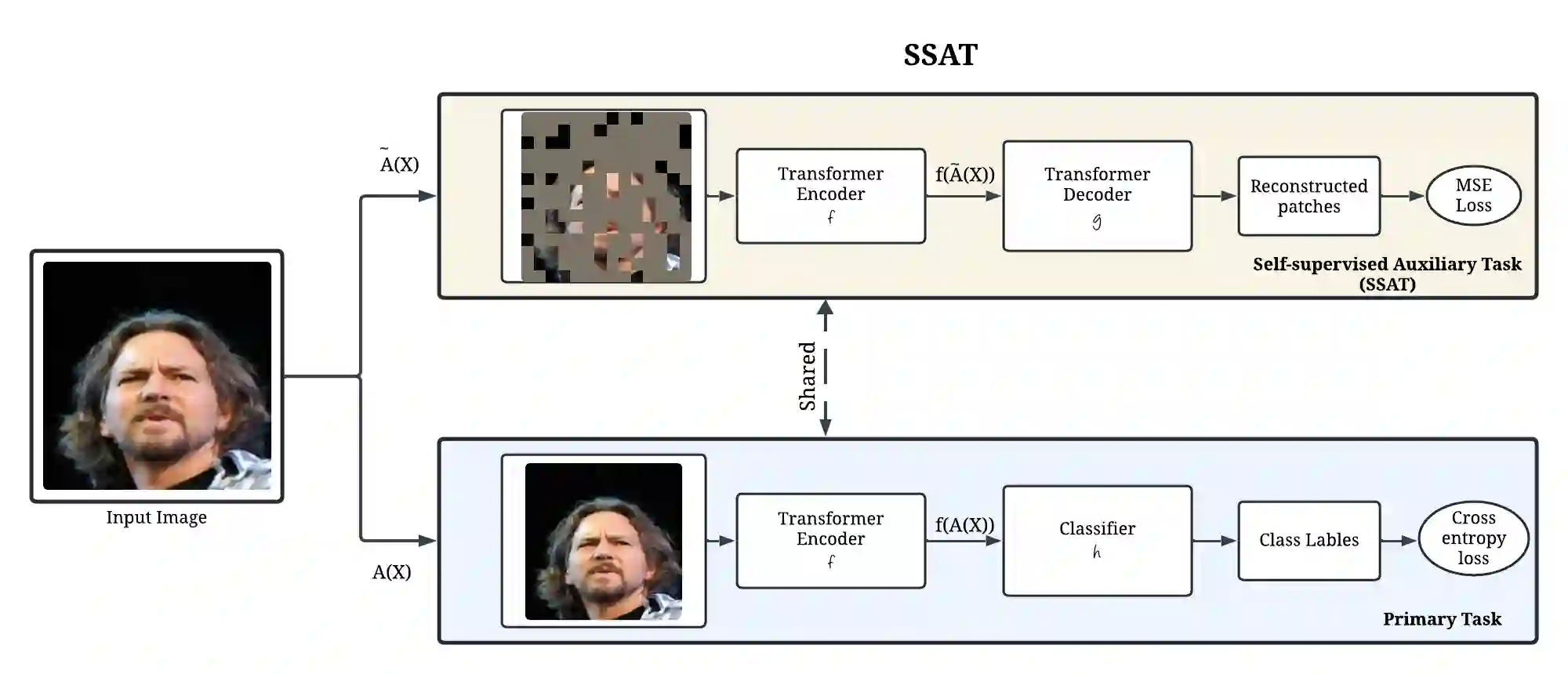

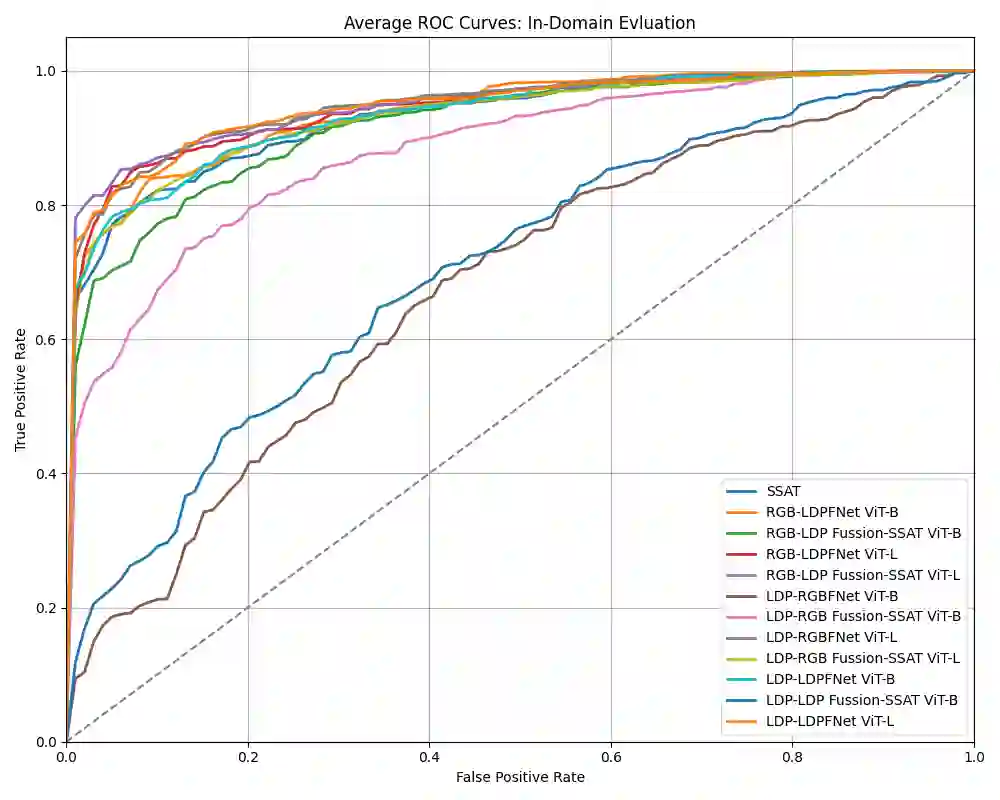

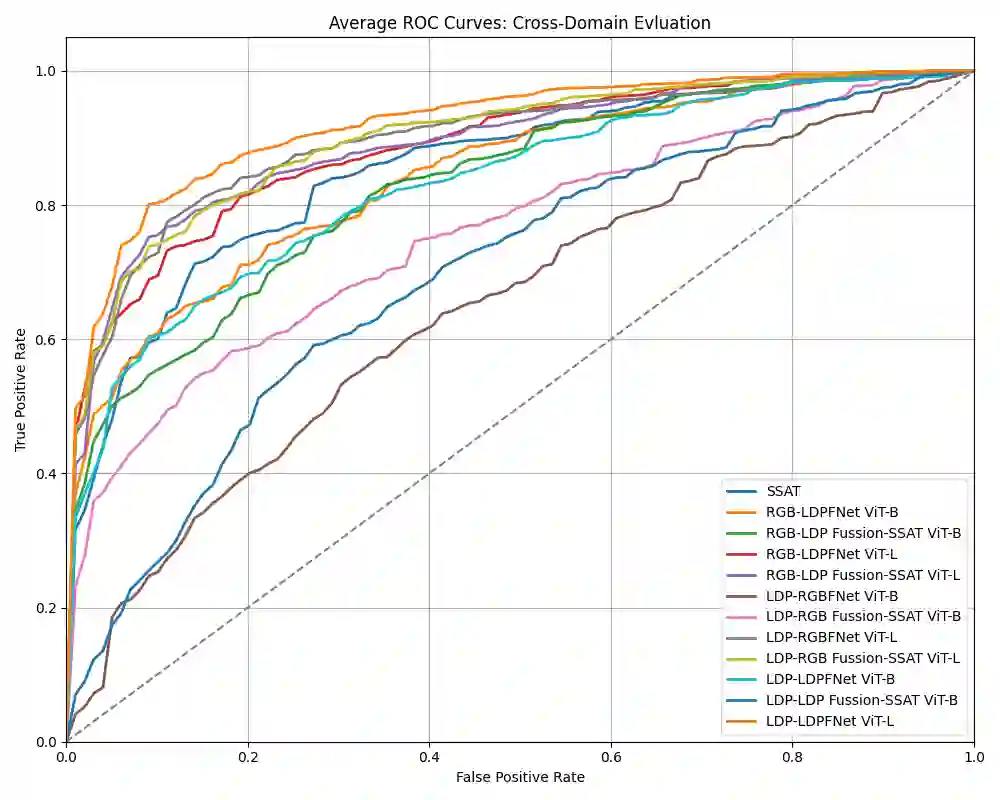

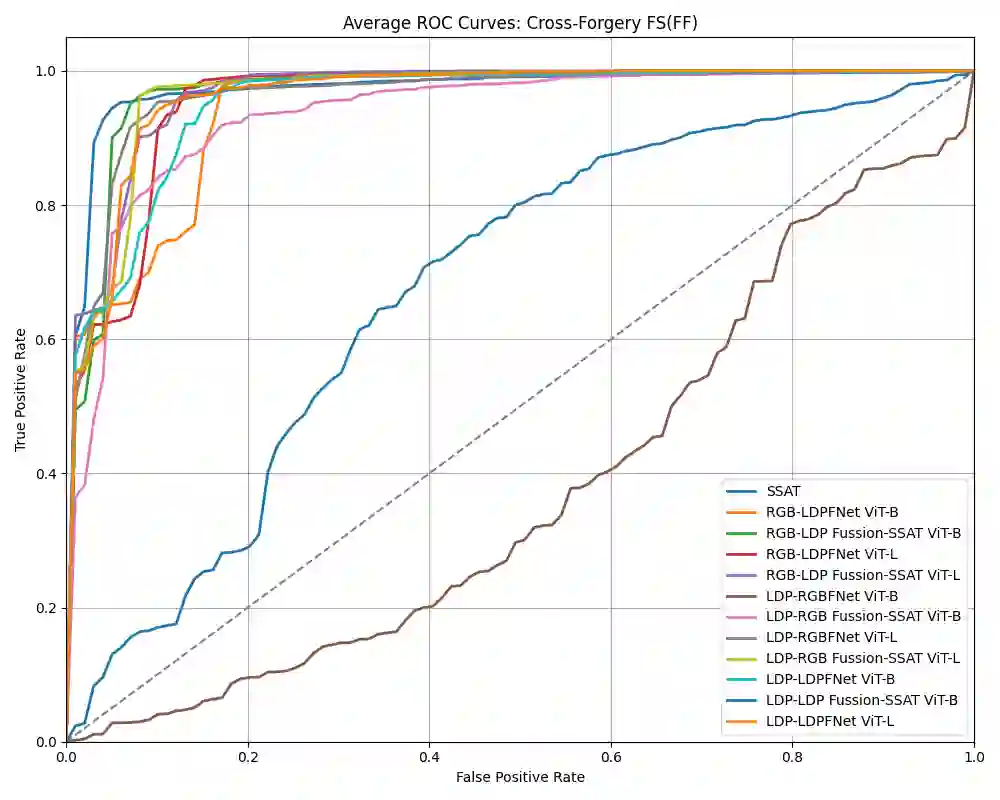

In this work, we attempted to unleash the potential of self-supervised learning as an auxiliary task that can optimise the primary task of generalised deepfake detection. To explore this, we examined different combinations of the training schemes for these tasks that can be most effective. Our findings reveal that fusing the feature representation from self-supervised auxiliary tasks is a powerful feature representation for the problem at hand. Such a representation can leverage the ultimate potential and bring in a unique representation of both the self-supervised and primary tasks, achieving better performance for the primary task. We experimented on a large set of datasets, which includes DF40, FaceForensics++, Celeb-DF, DFD, FaceShifter, UADFV, and our results showed better generalizability on cross-dataset evaluation when compared with current state-of-the-art detectors.

翻译:在本工作中,我们尝试释放自监督学习作为辅助任务的潜力,以优化广义深度伪造检测这一主要任务。为此,我们研究了这些任务训练方案的不同组合,以探寻最有效的配置。我们的研究结果表明,融合来自自监督辅助任务的特征表示是解决当前问题的强大特征表示。这种表示能够充分利用自监督任务与主要任务的潜力,并融合二者独特的表征,从而为主任务带来更好的性能。我们在包含DF40、FaceForensics++、Celeb-DF、DFD、FaceShifter、UADFV在内的大规模数据集上进行了实验,结果表明与当前最先进的检测器相比,我们的方法在跨数据集评估中展现出更好的泛化能力。