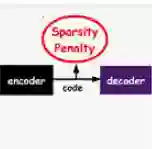

Large Language Models (LLMs) have demonstrated remarkable performance in natural language generation tasks, yet their uncontrolled outputs pose significant ethical and safety risks. Recently, representation engineering methods have shown promising results in steering model behavior by modifying the rich semantic information encoded in activation vectors. However, due to the difficulty of precisely disentangling semantic directions within high-dimensional representation space, existing approaches suffer from three major limitations: lack of fine-grained control, quality degradation of generated content, and poor interpretability. To address these challenges, we propose a sparse encoding-based representation engineering method, named SRE, which decomposes polysemantic activations into a structured, monosemantic feature space. By leveraging sparse autoencoding, our approach isolates and adjusts only task-specific sparse feature dimensions, enabling precise and interpretable steering of model behavior while preserving content quality. We validate our method on three critical domains, i.e., safety, fairness, and truthfulness using the open-source LLM Gemma-2-2B-it. Experimental results show that SRE achieves superior controllability while maintaining the overall quality of generated content (i.e., controllability and quality), demonstrating its effectiveness as a fine-grained and interpretable activation steering framework.

翻译:大型语言模型(LLM)在自然语言生成任务中展现出卓越性能,但其不受控的输出会带来严重的伦理与安全风险。近期,表征工程方法通过修改激活向量中编码的丰富语义信息,在引导模型行为方面显示出良好前景。然而,由于高维表征空间中语义方向难以精确解耦,现有方法存在三大局限:缺乏细粒度控制、生成内容质量下降以及可解释性不足。为解决这些挑战,我们提出一种基于稀疏编码的表征工程方法SRE,该方法将多义性激活分解为结构化的单义特征空间。通过稀疏自编码技术,我们的方法仅隔离并调整任务相关的稀疏特征维度,在保持内容质量的同时实现精确且可解释的模型行为引导。我们在开源模型Gemma-2-2B-it上针对安全性、公平性和真实性三个关键领域验证了该方法。实验结果表明,SRE在保持生成内容整体质量(即控制性与质量)的同时实现了更优的可控性,证明了其作为细粒度可解释激活引导框架的有效性。