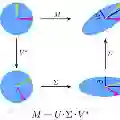

Quantum Federated Learning (QFL) promises to revolutionize distributed machine learning by combining the computational power of quantum devices with collaborative model training. Yet, privacy of both data and models remains a critical challenge. In this work, we propose a privacy-preserving QFL framework where a network of $n$ quantum devices trains local models and transmits them to a central server under a multi-layered privacy protocol. Our design leverages Singular Value Decomposition (SVD), Quantum Key Distribution (QKD), and Analytic Quantum Gradient Descent (AQGD) to secure data preparation, model sharing, and training stages. Through theoretical analysis and experiments on contemporary quantum platforms and datasets, we demonstrate that the framework robustly safeguards data and model confidentiality while maintaining training efficiency.

翻译:量子联邦学习(QFL)通过将量子设备的计算能力与协作模型训练相结合,有望彻底改变分布式机器学习。然而,数据和模型的隐私性仍然是一个关键挑战。在本研究中,我们提出了一种隐私保护的QFL框架,其中$n$个量子设备组成的网络在多层隐私协议下训练本地模型并将其传输至中央服务器。我们的设计利用奇异值分解(SVD)、量子密钥分发(QKD)和分析量子梯度下降(AQGD)来保障数据准备、模型共享和训练阶段的安全性。通过对当代量子平台和数据集的理论分析与实验验证,我们证明该框架在保持训练效率的同时,能够稳健地保护数据和模型的机密性。