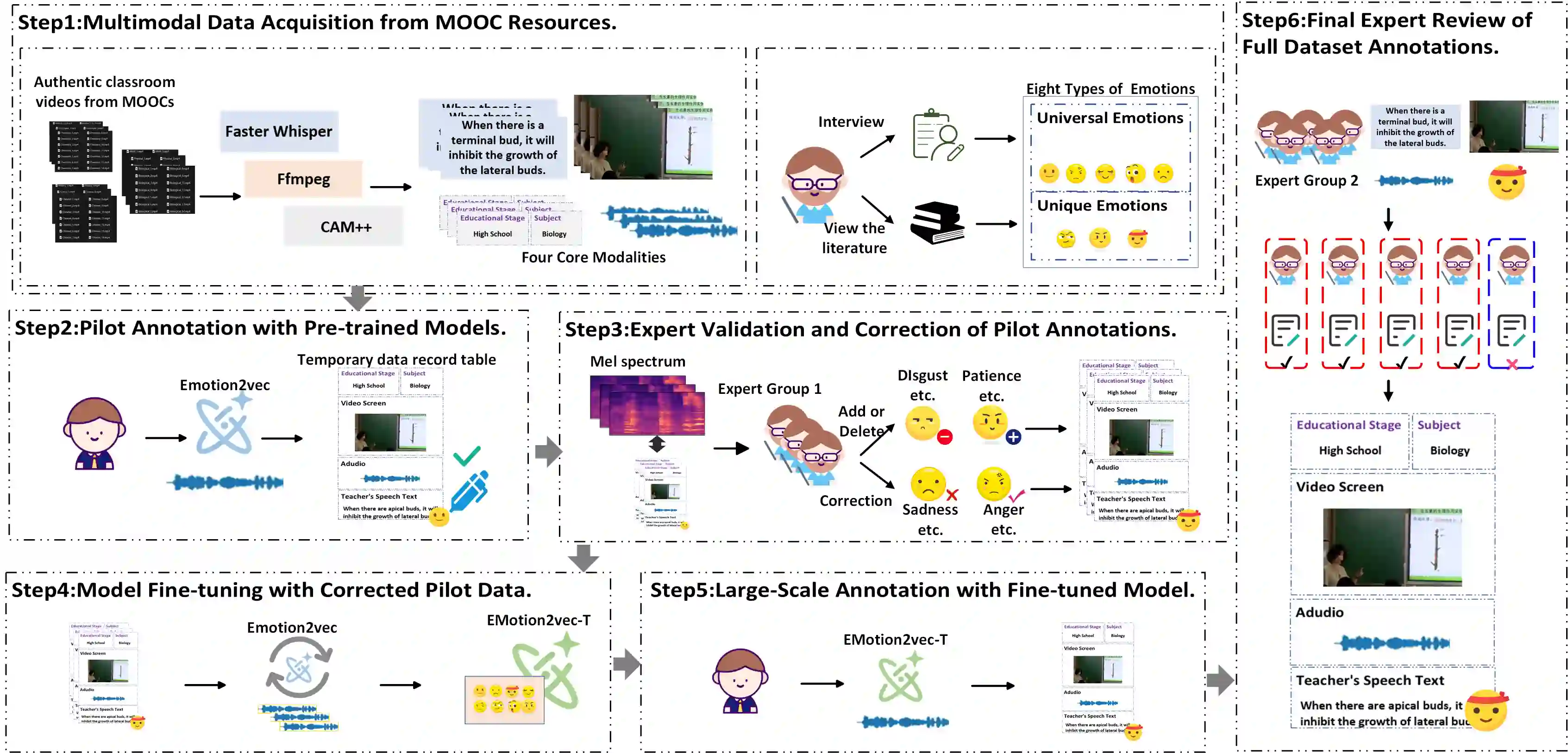

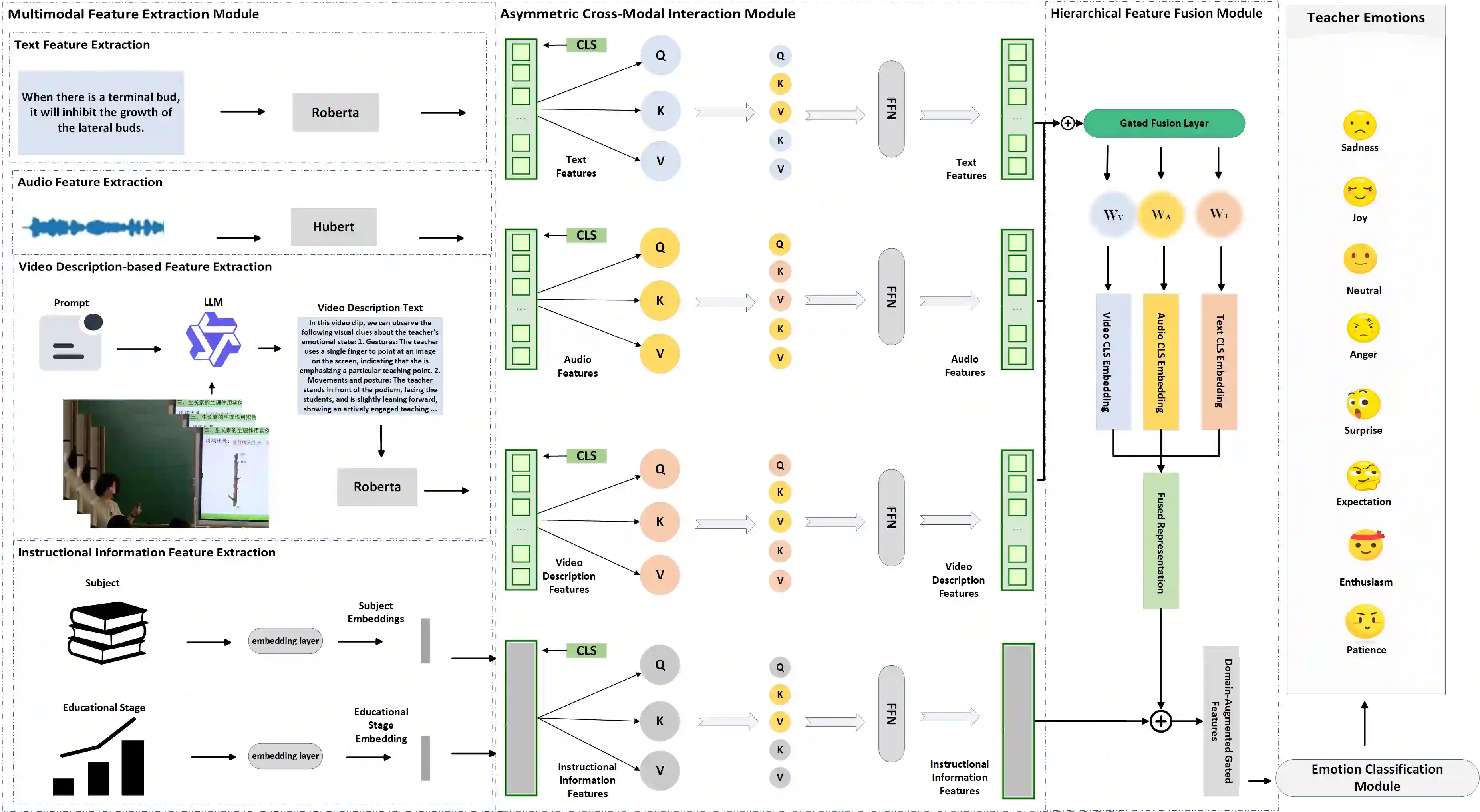

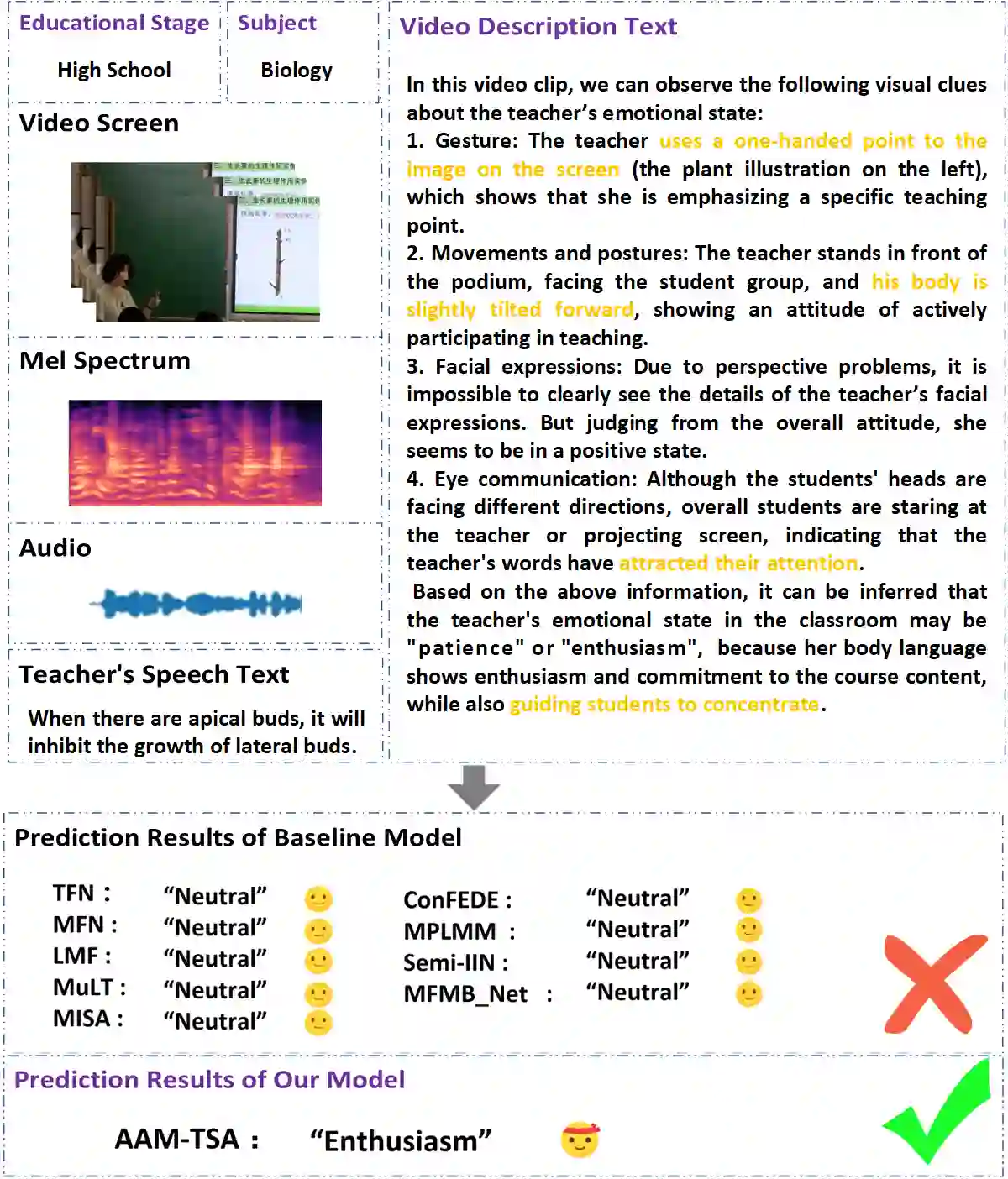

Teachers' emotional states are critical in educational scenarios, profoundly impacting teaching efficacy, student engagement, and learning achievements. However, existing studies often fail to accurately capture teachers' emotions due to the performative nature and overlook the critical impact of instructional information on emotional expression. In this paper, we systematically investigate teacher sentiment analysis by building both the dataset and the model accordingly. We construct the first large-scale teacher multimodal sentiment analysis dataset, T-MED. To ensure labeling accuracy and efficiency, we employ a human-machine collaborative labeling process. The T-MED dataset includes 14,938 instances of teacher emotional data from 250 real classrooms across 11 subjects ranging from K-12 to higher education, integrating multimodal text, audio, video, and instructional information. Furthermore, we propose a novel asymmetric attention-based multimodal teacher sentiment analysis model, AAM-TSA. AAM-TSA introduces an asymmetric attention mechanism and hierarchical gating unit to enable differentiated cross-modal feature fusion and precise emotional classification. Experimental results demonstrate that AAM-TSA significantly outperforms existing state-of-the-art methods in terms of accuracy and interpretability on the T-MED dataset.

翻译:教师的情感状态在教育场景中至关重要,深刻影响着教学效能、学生参与度与学习成效。然而,现有研究常因教师情感的表达具有表演性而难以准确捕捉,且忽视了教学信息对情感表达的关键影响。本文通过相应构建数据集与模型,系统性地研究了教师情感分析。我们构建了首个大规模教师多模态情感分析数据集T-MED。为确保标注的准确性与效率,我们采用了人机协同的标注流程。T-MED数据集包含来自250个真实课堂、涵盖从K-12到高等教育11门学科的14,938个教师情感数据实例,整合了多模态文本、音频、视频及教学信息。此外,我们提出了一种新颖的基于非对称注意力的多模态教师情感分析模型AAM-TSA。AAM-TSA引入了非对称注意力机制与分层门控单元,以实现差异化的跨模态特征融合与精准的情感分类。实验结果表明,在T-MED数据集上,AAM-TSA在准确性与可解释性方面均显著优于现有最先进方法。