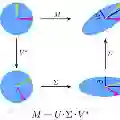

Low-Rank Adaptation (LoRA) has become a popular technique for parameter-efficient fine-tuning of large language models (LLMs). In many real-world scenarios, multiple adapters are loaded simultaneously to enable LLM customization for personalized user experiences or to support a diverse range of tasks. Although each adapter is lightweight in isolation, their aggregate cost becomes substantial at scale. To address this, we propose LoRAQuant, a mixed-precision post-training quantization method tailored to LoRA. Specifically, LoRAQuant reparameterizes each adapter by singular value decomposition (SVD) to concentrate the most important information into specific rows and columns. This makes it possible to quantize the important components to higher precision, while quantizing the rest to ultra-low bitwidth. We conduct comprehensive experiments with LLaMA 2-7B, LLaMA 2-13B, and Mistral 7B models on mathematical reasoning, coding, and summarization tasks. Results show that our LoRAQuant uses significantly lower bits than other quantization methods, but achieves comparable or even higher performance.

翻译:低秩适应(LoRA)已成为大型语言模型(LLMs)参数高效微调的主流技术。在许多实际应用场景中,为了支持个性化用户体验或适应多样化任务,通常需要同时加载多个适配器。尽管单个适配器本身轻量,但在大规模部署时,其总体开销变得十分可观。为解决这一问题,我们提出了LoRAQuant,一种专为LoRA设计的混合精度训练后量化方法。具体而言,LoRAQuant通过奇异值分解(SVD)对每个适配器进行重参数化,将最关键的信息集中到特定的行与列中。这使得我们能够对重要成分采用较高精度量化,而对其余部分进行超低比特位宽量化。我们在LLaMA 2-7B、LLaMA 2-13B和Mistral 7B模型上,针对数学推理、代码生成和文本摘要任务进行了全面实验。结果表明,相较于其他量化方法,LoRAQuant在显著降低比特位宽的同时,取得了相当甚至更优的性能表现。