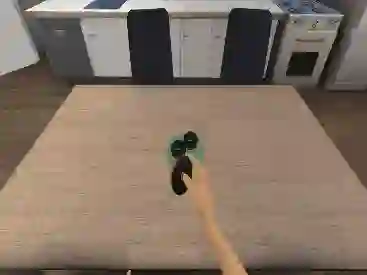

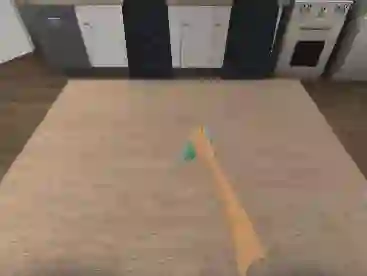

This study introduces a pioneering approach in brain-computer interface (BCI) technology, featuring our novel concept of high-level visual imagery for non-invasive electroencephalography (EEG)-based communication. High-level visual imagery, as proposed in our work, involves the user engaging in the mental visualization of complex upper limb movements. This innovative approach significantly enhances the BCI system, facilitating the extension of its applications to more sophisticated tasks such as EEG-based robotic arm control. By leveraging this advanced form of visual imagery, our study opens new horizons for intricate and intuitive mind-controlled interfaces. We developed an advanced deep learning architecture that integrates functional connectivity metrics with a convolutional neural network-image transformer. This framework is adept at decoding subtle user intentions, addressing the spatial variability in high-level visual tasks, and effectively translating these into precise commands for robotic arm control. Our comprehensive offline and pseudo-online evaluations demonstrate the framework's efficacy in real-time applications, including the nuanced control of robotic arms. The robustness of our approach is further validated through leave-one-subject-out cross-validation, marking a significant step towards versatile, subject-independent BCI applications. This research highlights the transformative impact of advanced visual imagery and deep learning in enhancing the usability and adaptability of BCI systems, particularly in robotic arm manipulation.

翻译:本研究提出了一种脑机接口(BCI)技术中的开创性方法,引入了我们针对非侵入式基于脑电图(EEG)通信的高级视觉意象新概念。在我们的工作中,高级视觉意象涉及用户对复杂上肢运动进行心理可视化。这一创新方法显著增强了BCI系统,促进了其应用向更复杂任务(如基于EEG的机械臂控制)的扩展。通过利用这种高级视觉意象形式,我们的研究为复杂且直观的意念控制界面开辟了新视野。我们开发了一种先进的深度学习架构,将功能连接指标与卷积神经网络-图像变换器相结合。该框架擅长解码细微的用户意图,应对高级视觉任务中的空间变异性,并有效地将其转化为机械臂控制的精确指令。我们全面的离线和伪在线评估证明了该框架在实时应用中的有效性,包括对机械臂的精细控制。通过留一被试交叉验证进一步验证了我们方法的鲁棒性,标志着向通用、独立于被试的BCI应用迈出了重要一步。本研究强调了高级视觉意象与深度学习在提升BCI系统可用性和适应性方面的变革性影响,特别是在机械臂操控领域。