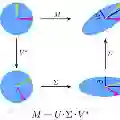

Foundation models (FMs) are pre-trained on large-scale datasets and then fine-tuned for a specific downstream task. The most common fine-tuning method is to update pretrained weights via low-rank adaptation (LoRA). Existing initialization strategies for LoRA often rely on singular value decompositions (SVD) of gradients or weight matrices. However, they do not provably maximize the expected gradient signal, which is critical for fast adaptation. To this end, we introduce Explained Variance Adaptation (EVA), an initialization scheme that uses the directions capturing the most activation variance, provably maximizing the expected gradient signal and accelerating fine-tuning. EVA performs incremental SVD on minibatches of activation vectors and selects the right-singular vectors for initialization once they converged. Further, by selecting the directions that capture the most activation-variance for a given rank budget, EVA accommodates adaptive ranks that reduce the number of trainable parameters. We apply EVA to a variety of fine-tuning tasks as language generation and understanding, image classification, and reinforcement learning. EVA exhibits faster convergence than competitors and achieves the highest average score across a multitude of tasks per domain while reducing the number of trainable parameters through rank redistribution. In summary, EVA establishes a new Pareto frontier compared to existing LoRA initialization schemes in both accuracy and efficiency.

翻译:基础模型(FMs)在大规模数据集上进行预训练,随后针对特定下游任务进行微调。最常见的微调方法是通过低秩适配(LoRA)更新预训练权重。现有的LoRA初始化策略通常依赖于梯度或权重矩阵的奇异值分解(SVD),但这些方法无法在理论上保证最大化期望梯度信号,而该信号对于快速适配至关重要。为此,我们提出了解释方差适配(EVA),这是一种利用捕获最多激活方差的方向进行初始化的方案,可在理论上最大化期望梯度信号并加速微调过程。EVA对小批量激活向量执行增量SVD,并在右奇异向量收敛后将其用于初始化。此外,通过为给定的秩预算选择捕获最多激活方差的方向,EVA支持自适应秩选择,从而减少可训练参数数量。我们将EVA应用于多种微调任务,包括语言生成与理解、图像分类和强化学习。实验表明,EVA相比现有方法具有更快的收敛速度,并通过秩重分配减少可训练参数的同时,在多个领域任务中取得了最高的平均得分。总之,在准确性与效率方面,EVA相较于现有LoRA初始化方案确立了新的帕累托前沿。