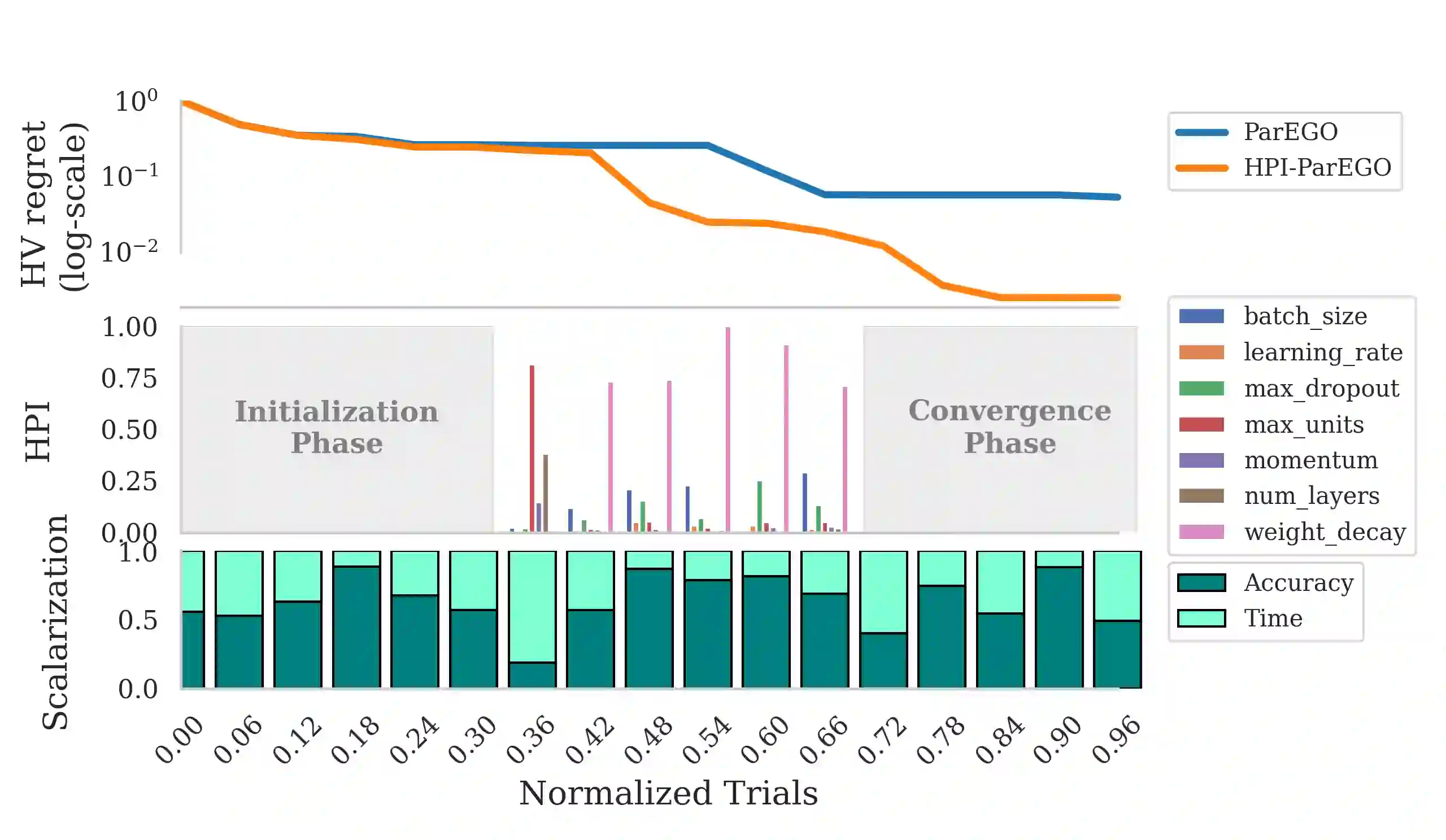

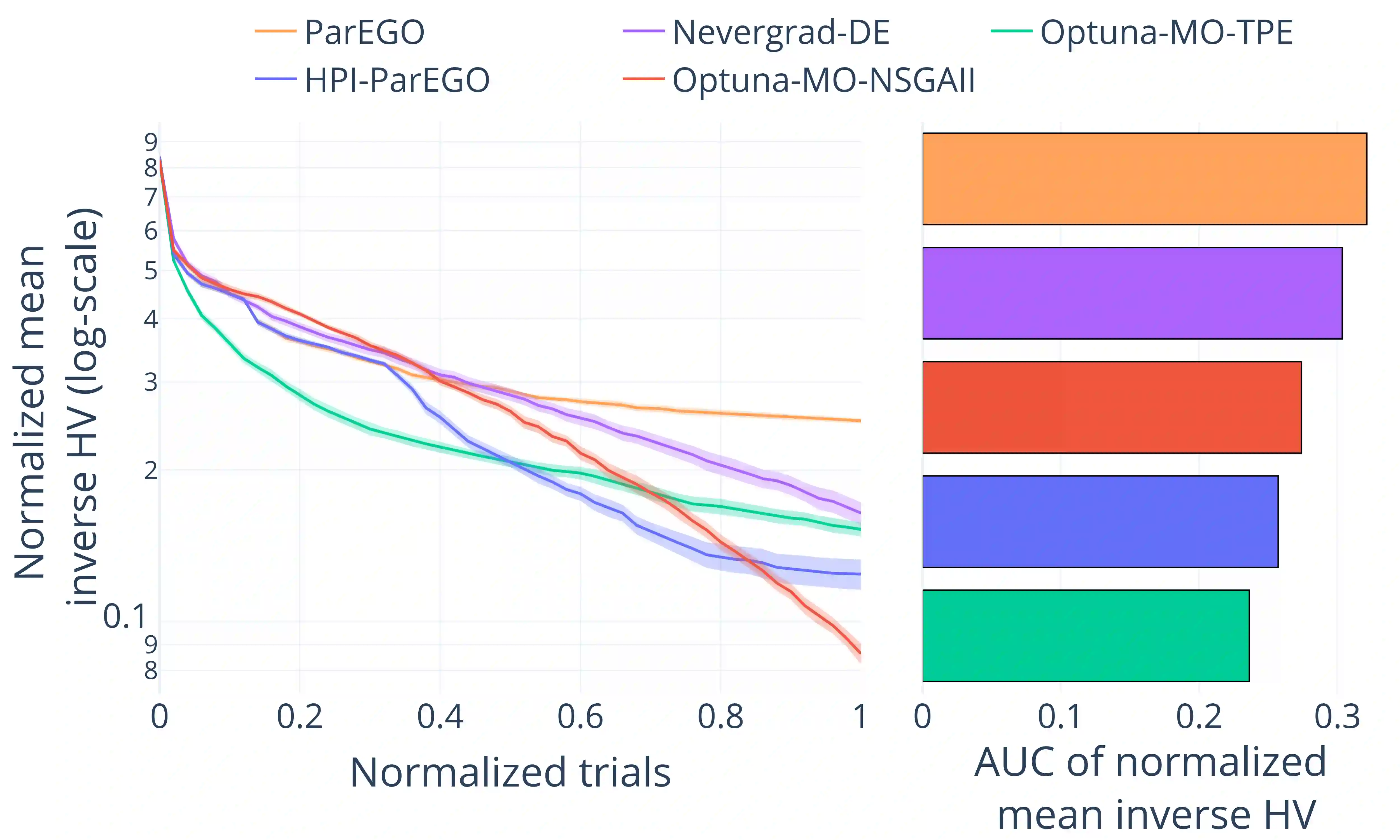

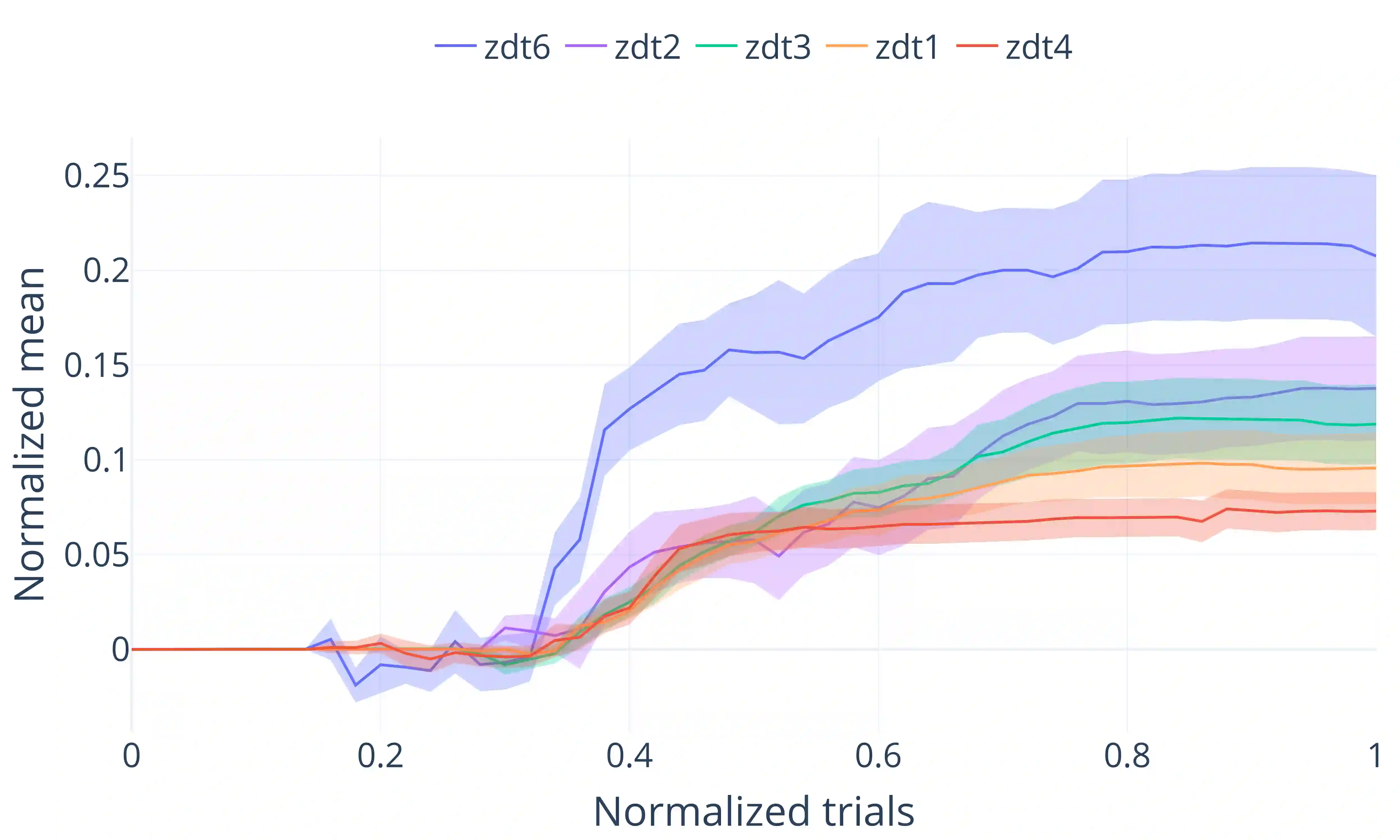

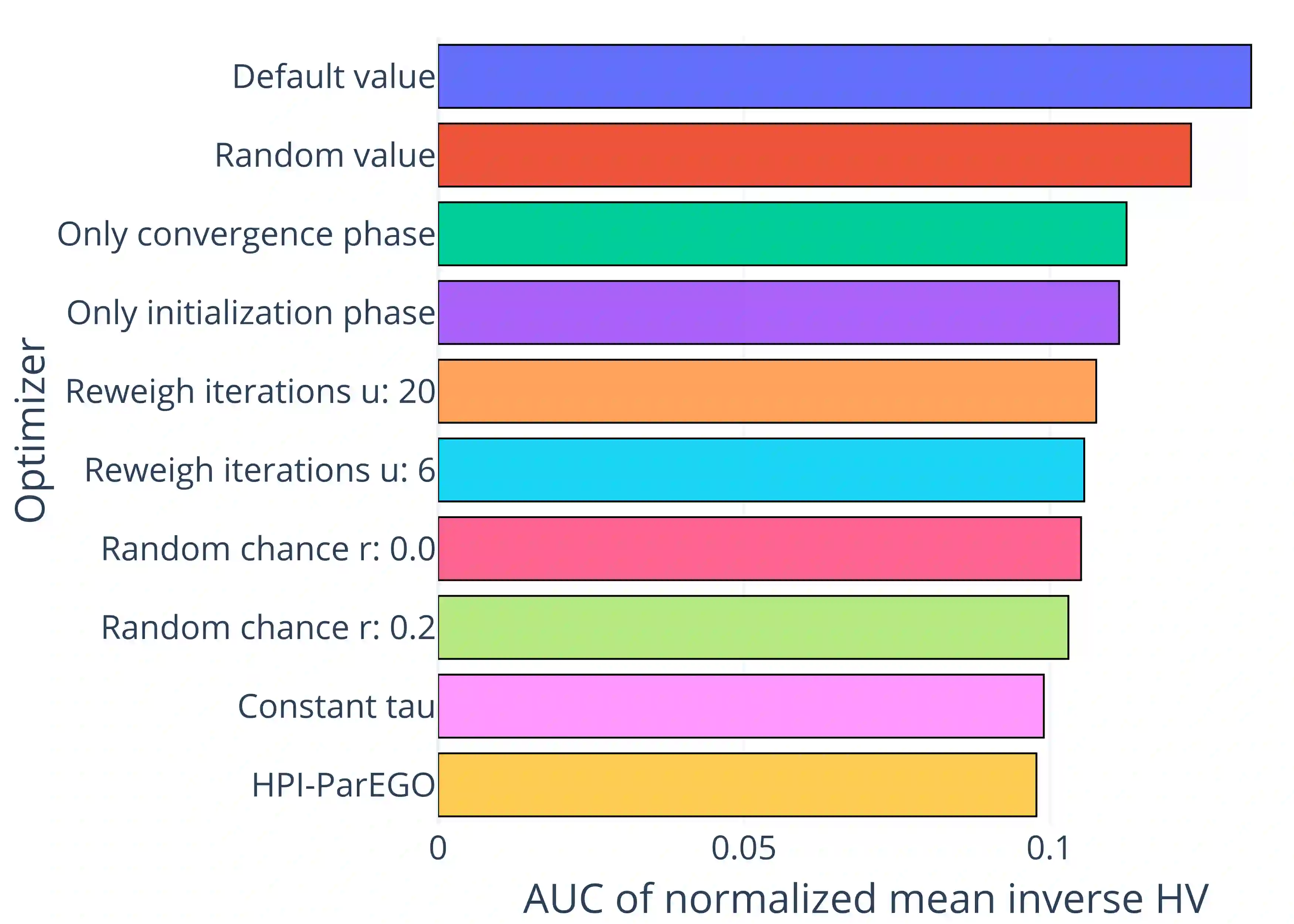

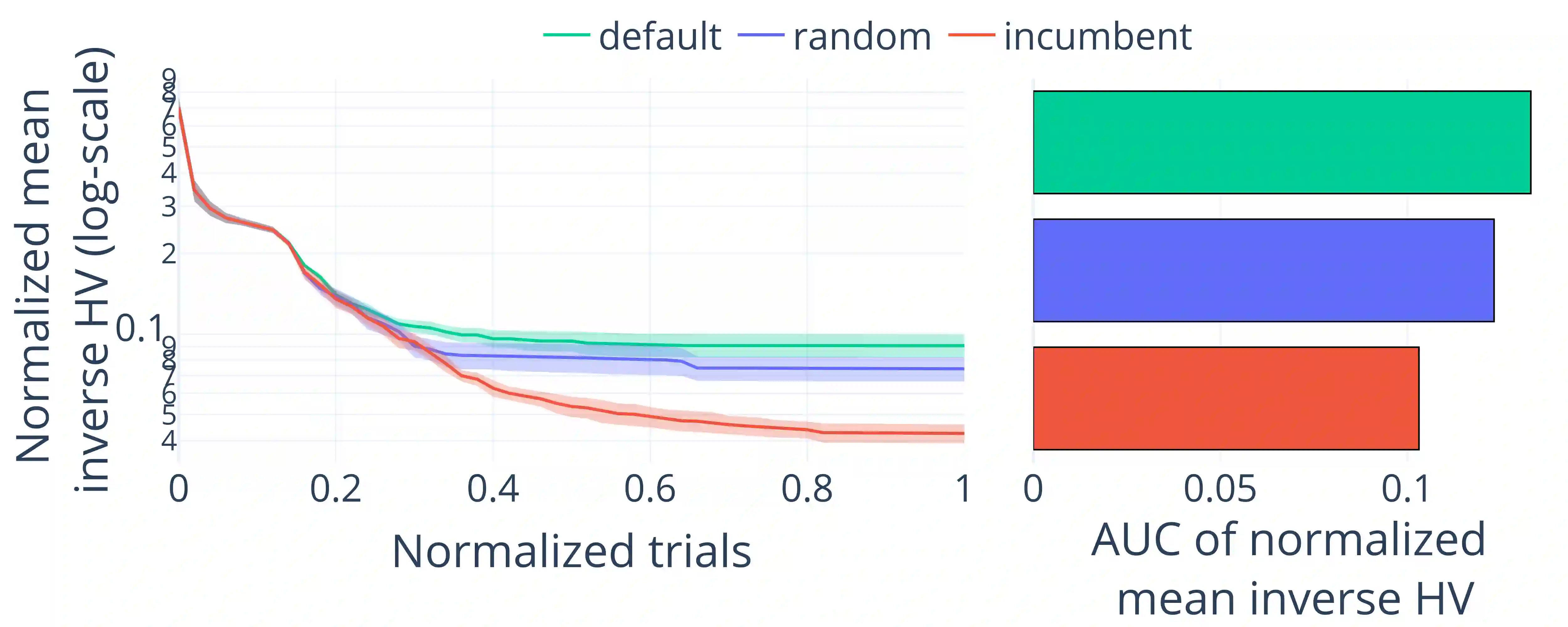

Choosing a suitable ML model is a complex task that can depend on several objectives, e.g., accuracy, model size, fairness, inference time, or energy consumption. In practice, this requires trading off multiple, often competing, objectives through multi-objective optimization (MOO). However, existing MOO methods typically treat all hyperparameters as equally important, overlooking that hyperparameter importance (HPI) can vary significantly depending on the trade-off between objectives. We propose a novel dynamic optimization approach that prioritizes the most influential hyperparameters based on varying objective trade-offs during the search process, which accelerates empirical convergence and leads to better solutions. Building on prior work on HPI for MOO post-analysis, we now integrate HPI, calculated with HyperSHAP, into the optimization. For this, we leverage the objective weightings naturally produced by the MOO algorithm ParEGO and adapt the configuration space by fixing the unimportant hyperparameters, allowing the search to focus on the important ones. Eventually, we validate our method with diverse tasks from PyMOO and YAHPO-Gym. Empirical results demonstrate improvements in convergence speed and Pareto front quality compared to baselines.

翻译:选择合适的机器学习模型是一项复杂的任务,通常涉及多个目标间的权衡,例如准确率、模型大小、公平性、推理时间或能耗。实践中,这需要通过多目标优化(MOO)来权衡多个常常相互冲突的目标。然而,现有的MOO方法通常将所有超参数视为同等重要,忽略了超参数重要性(HPI)可能随着目标间权衡关系的不同而发生显著变化。本文提出一种新颖的动态优化方法,该方法在搜索过程中根据变化的目标权衡关系,优先处理最具影响力的超参数,从而加速经验收敛并获得更优解。基于先前关于MOO后分析中HPI的研究,我们将通过HyperSHAP计算的HPI直接整合到优化过程中。为此,我们利用ParEGO算法自然生成的目标权重,通过固定不重要超参数来调整配置空间,使搜索聚焦于重要超参数。最后,我们在PyMOO和YAHPO-Gym的多样化任务上验证了所提方法。实证结果表明,相较于基线方法,本方法在收敛速度和帕累托前沿质量方面均有显著提升。