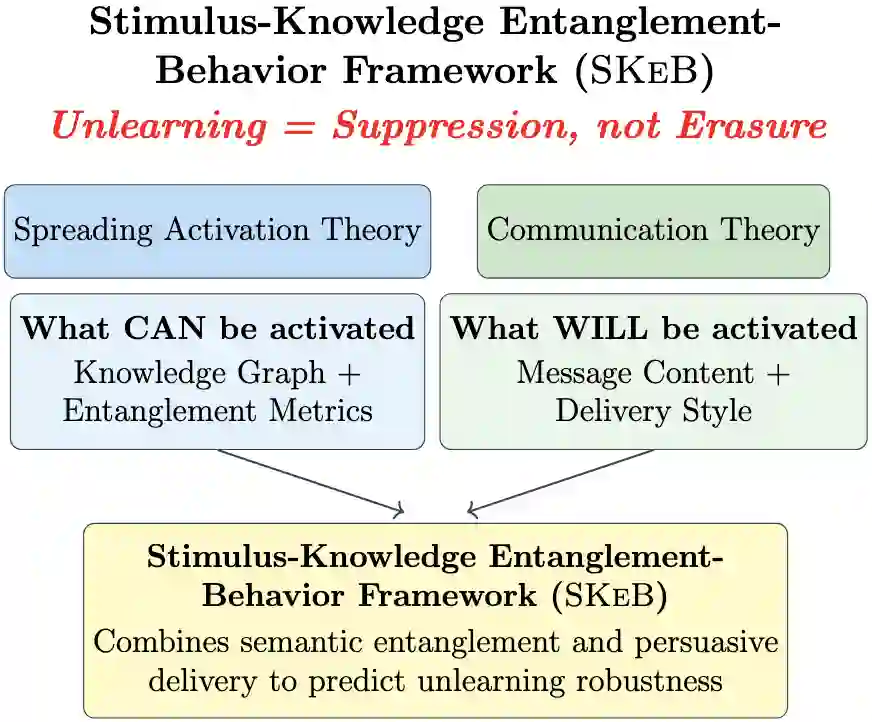

Unlearning in large language models (LLMs) is crucial for managing sensitive data and correcting misinformation, yet evaluating its effectiveness remains an open problem. We investigate whether persuasive prompting can recall factual knowledge from deliberately unlearned LLMs across models ranging from 2.7B to 13B parameters (OPT-2.7B, LLaMA-2-7B, LLaMA-3.1-8B, LLaMA-2-13B). Drawing from ACT-R and Hebbian theory (spreading activation theories), as well as communication principles, we introduce Stimulus-Knowledge Entanglement-Behavior Framework (SKeB), which models information entanglement via domain graphs and tests whether factual recall in unlearned models is correlated with persuasive framing. We develop entanglement metrics to quantify knowledge activation patterns and evaluate factuality, non-factuality, and hallucination in outputs. Our results show persuasive prompts substantially enhance factual knowledge recall (14.8% baseline vs. 24.5% with authority framing), with effectiveness inversely correlated to model size (128% recovery in 2.7B vs. 15% in 13B). SKeB provides a foundation for assessing unlearning completeness, robustness, and overall behavior in LLMs.

翻译:大语言模型(LLMs)中的遗忘对于管理敏感数据和纠正错误信息至关重要,然而评估其有效性仍是一个开放性问题。本研究探讨了在参数规模从27亿到130亿(OPT-2.7B、LLaMA-2-7B、LLaMA-3.1-8B、LLaMA-2-13B)的模型中,说服性提示能否从经过刻意遗忘的LLMs中召回事实性知识。基于ACT-R与赫布理论(扩散激活理论)以及传播学原理,我们提出了刺激-知识纠缠-行为框架(SKeB),该框架通过领域图建模信息纠缠,并检验遗忘模型中事实召回是否与说服性表述框架相关。我们开发了纠缠度量指标以量化知识激活模式,并评估输出中的事实性、非事实性与幻觉。实验结果表明,说服性提示显著增强了事实性知识召回(基线14.8% vs. 权威框架24.5%),其效果与模型规模呈负相关(2.7B模型恢复率达128% vs. 13B模型仅15%)。SKeB为评估LLMs中遗忘的完整性、鲁棒性及整体行为奠定了理论基础。