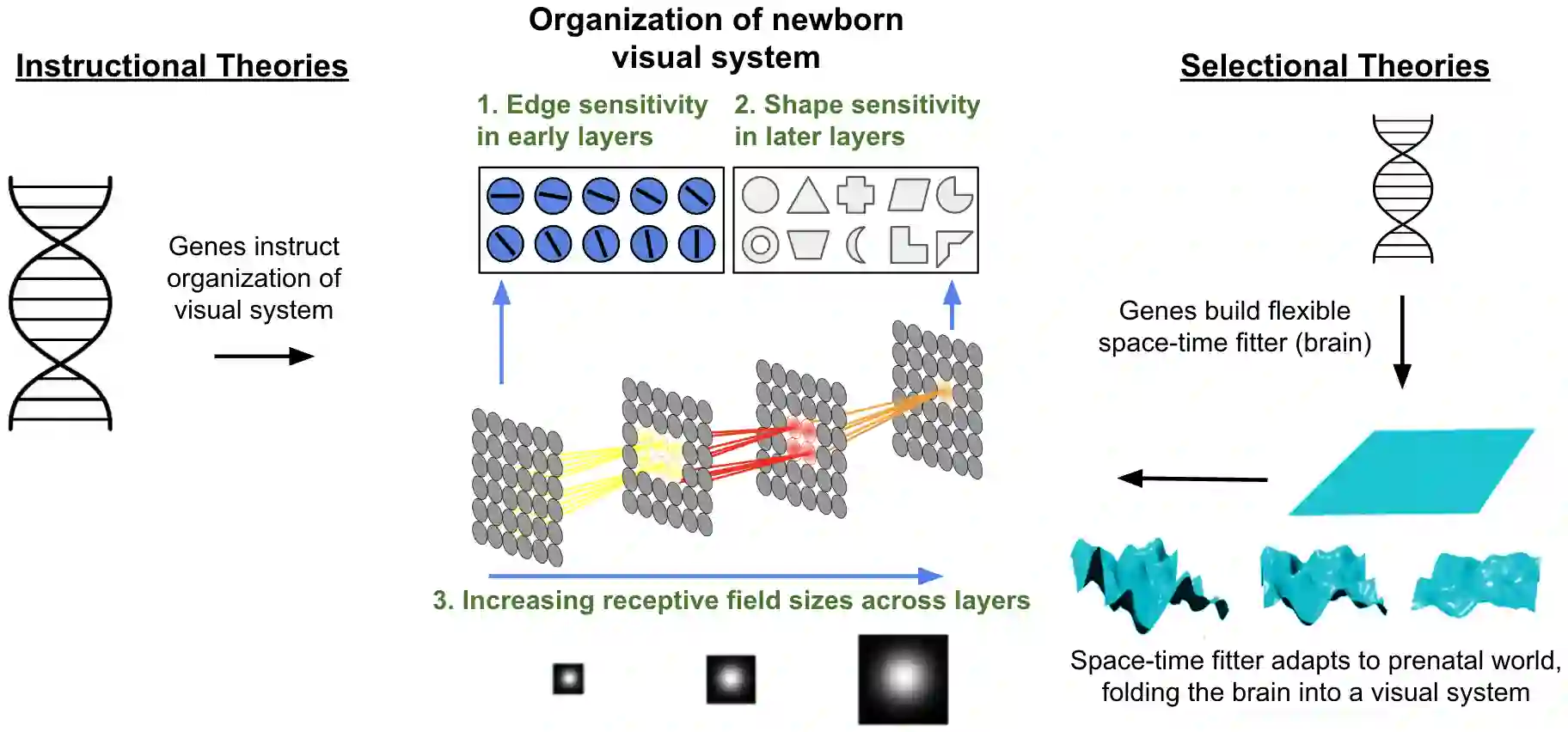

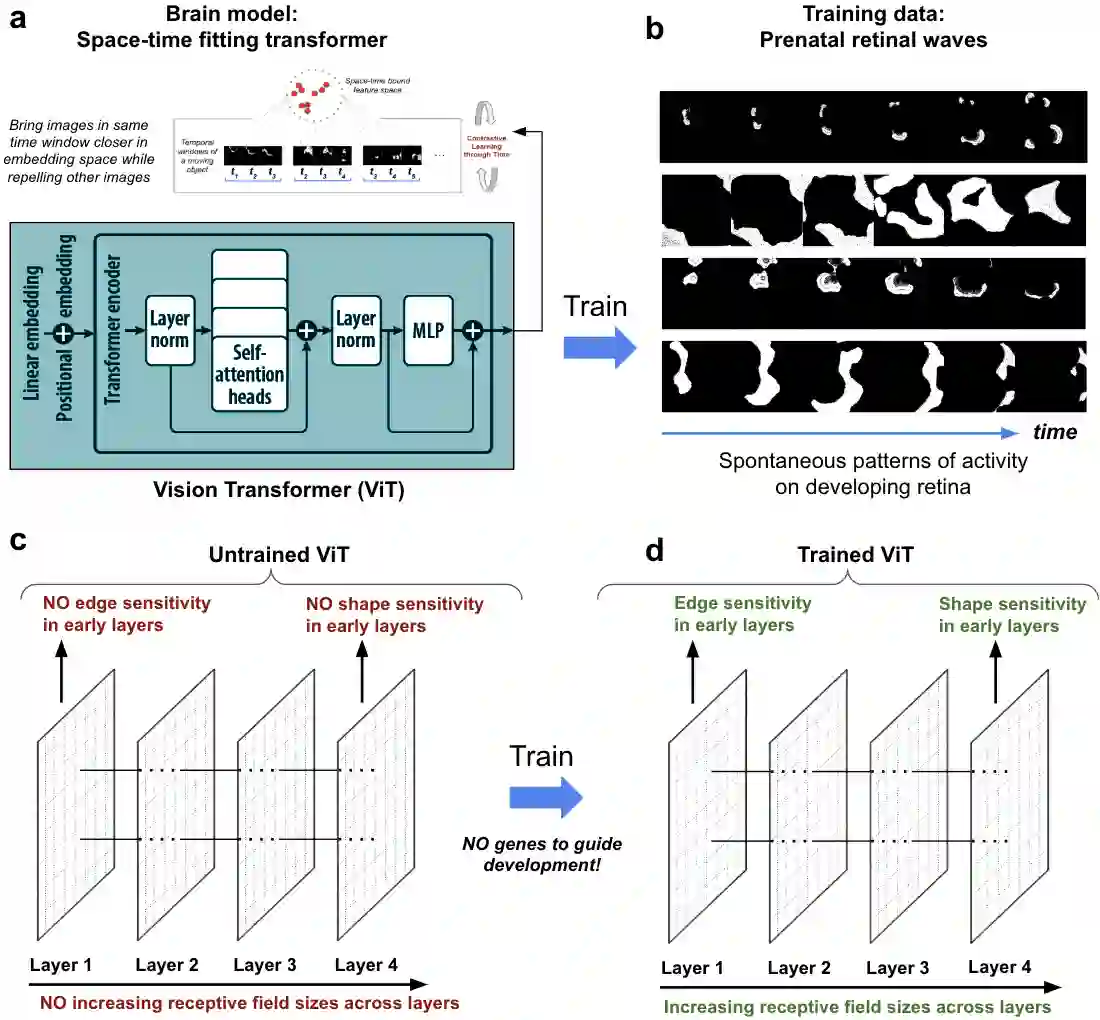

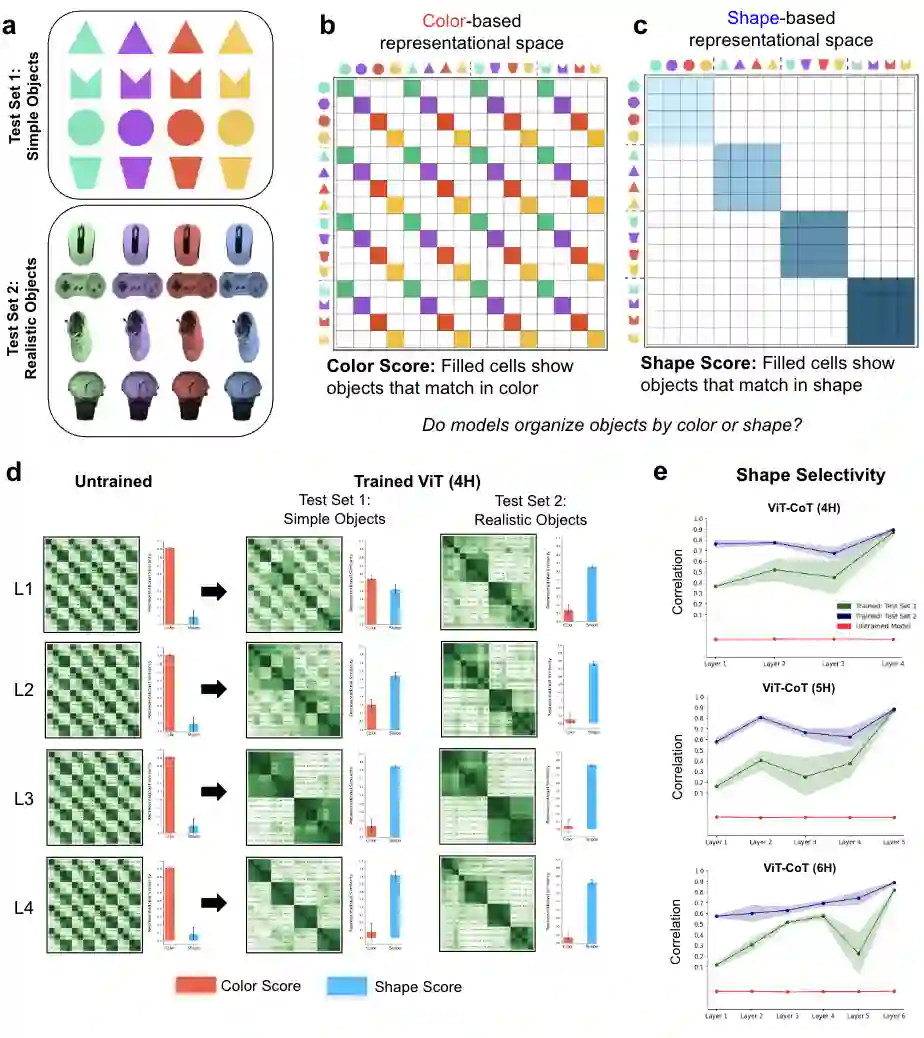

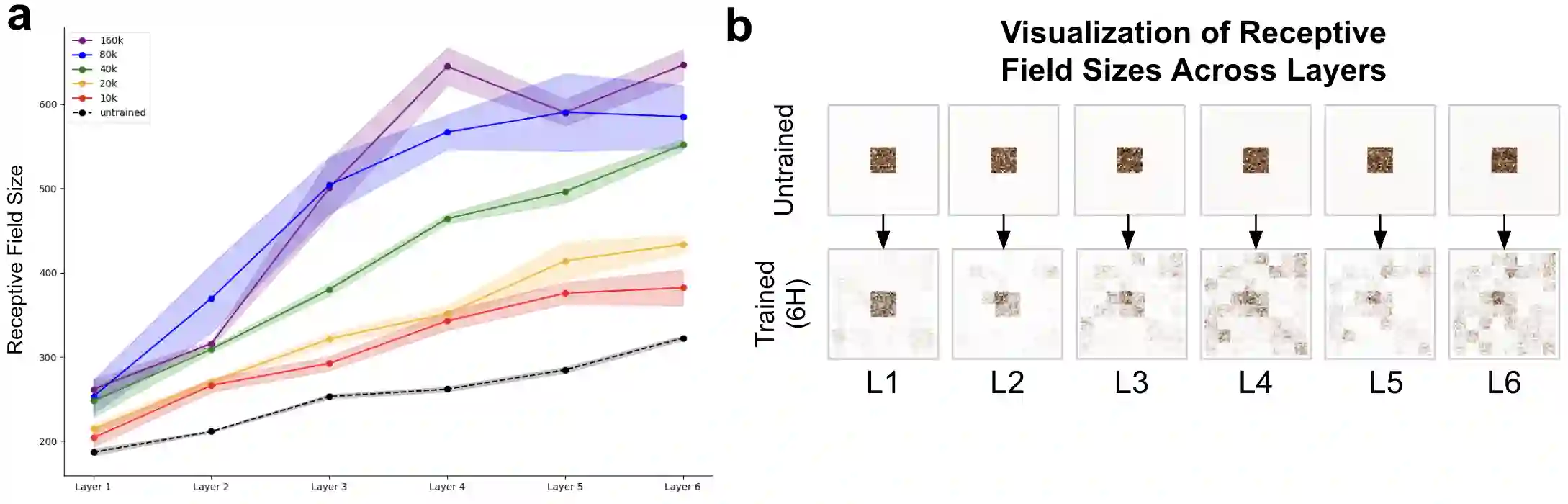

Do transformers learn like brains? A key challenge in addressing this question is that transformers and brains are trained on fundamentally different data. Brains are initially "trained" on prenatal sensory experiences (e.g., retinal waves), whereas transformers are typically trained on large datasets that are not biologically plausible. We reasoned that if transformers learn like brains, then they should develop the same structure as newborn brains when exposed to the same prenatal data. To test this prediction, we simulated prenatal visual input using a retinal wave generator. Then, using self-supervised temporal learning, we trained transformers to adapt to those retinal waves. During training, the transformers spontaneously developed the same structure as newborn visual systems: (1) early layers became sensitive to edges, (2) later layers became sensitive to shapes, and (3) the models developed larger receptive fields across layers. The organization of newborn visual systems emerges spontaneously when transformers adapt to a prenatal visual world. This developmental convergence suggests that brains and transformers learn in common ways and follow the same general fitting principles.

翻译:Transformer是否像大脑一样学习?解决这一问题的关键挑战在于Transformer与大脑在根本上不同的数据上进行训练。大脑最初通过产前感官体验(如视网膜波)进行"训练",而Transformer通常使用非生物学合理的大型数据集进行训练。我们推断,若Transformer的学习方式与大脑相似,那么当暴露于相同的产前数据时,它们应发展出与新生大脑相同的结构。为验证这一预测,我们使用视网膜波生成器模拟产前视觉输入,随后通过自监督时序学习训练Transformer适应这些视网膜波。在训练过程中,Transformer自发形成了与新生视觉系统相同的结构:(1) 早期层对边缘特征敏感,(2) 深层对形状特征敏感,(3) 模型各层感受野逐级增大。当Transformer适应产前视觉世界时,新生视觉系统的组织结构会自发涌现。这种发展趋同性表明,大脑与Transformer遵循共同的学习机制和普适的拟合原则。