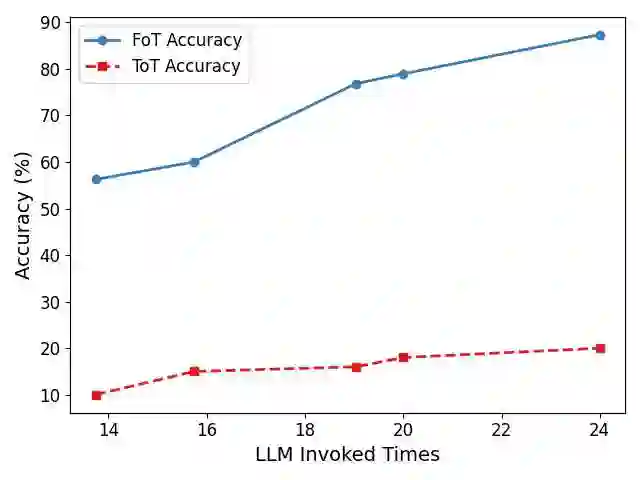

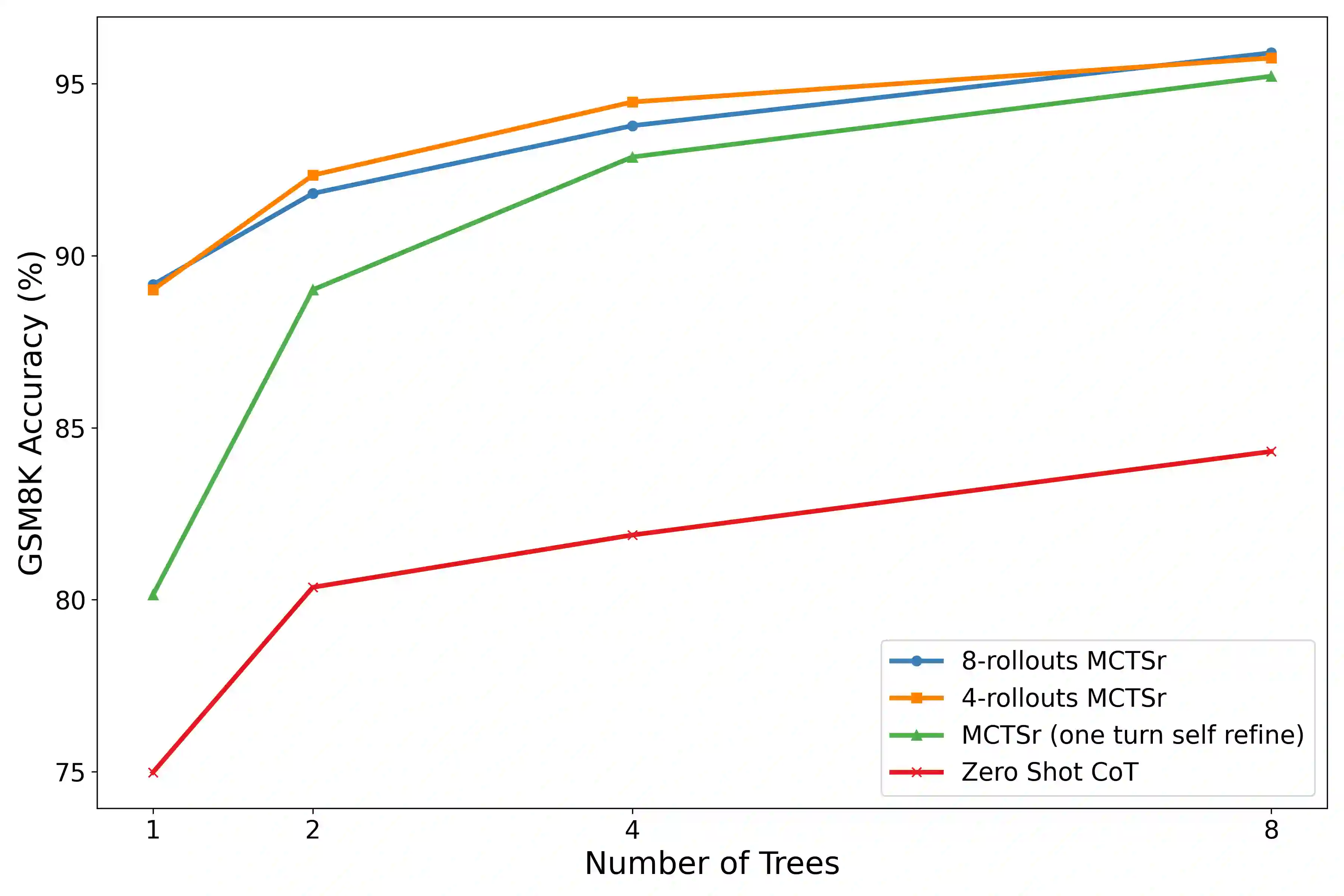

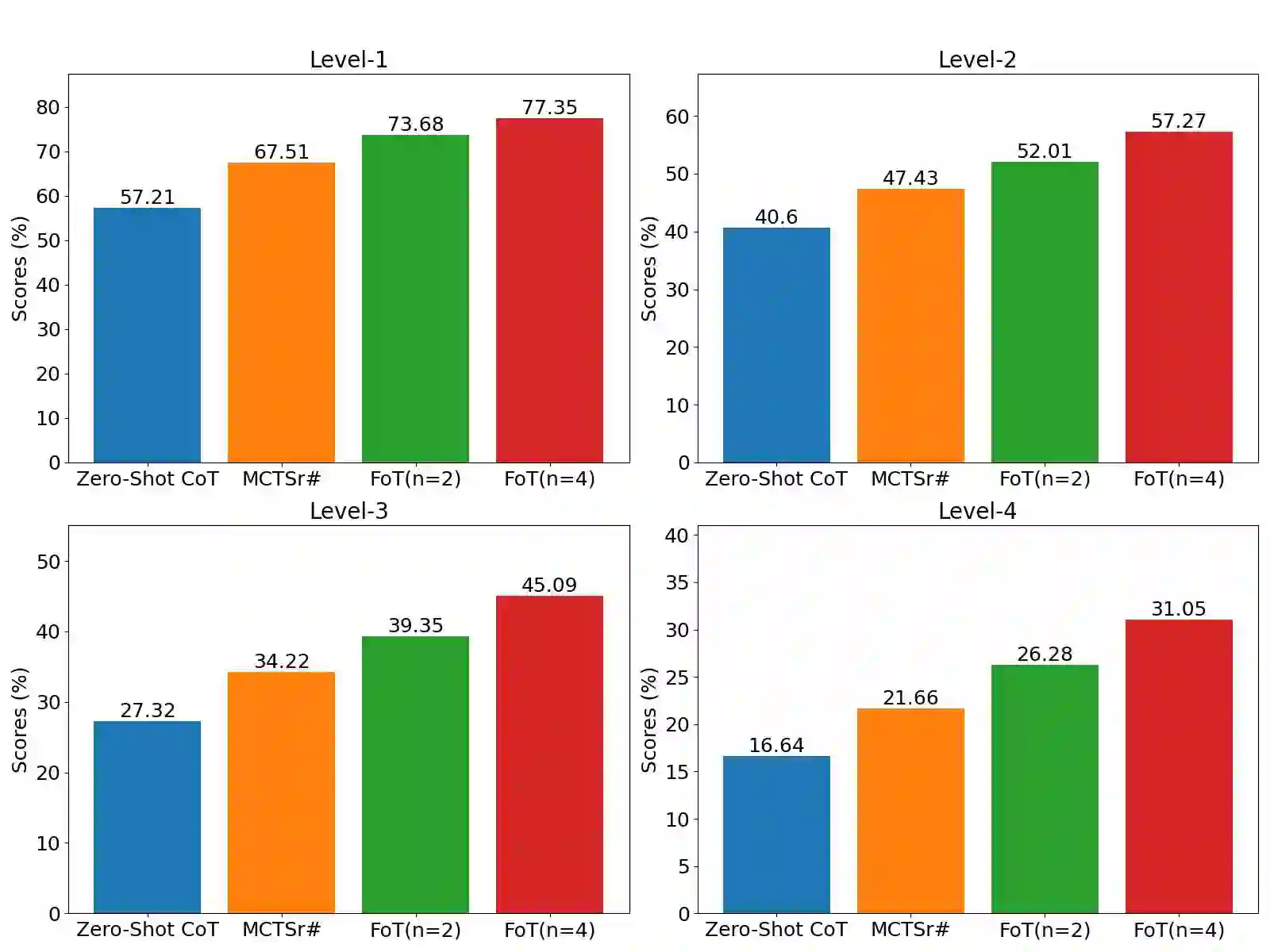

Large Language Models (LLMs) have demonstrated remarkable abilities across various language tasks, but solving complex reasoning problems remains a significant challenge. While existing methods, such as Chain-of-Thought (CoT) and Tree-of-Thought (ToT), enhance reasoning by decomposing problems or structuring prompts, they typically perform a single pass of reasoning and may fail to revisit flawed paths, compromising accuracy. To address this limitation, we propose a novel reasoning framework called Forest-of-Thought (FoT), which integrates multiple reasoning trees to leverage collective decision-making for solving complex logical problems. FoT employs sparse activation strategies to select the most relevant reasoning paths, improving both efficiency and accuracy. Additionally, we introduce a dynamic self-correction strategy that enables real-time error correction, along with consensus-guided decision-making strategies to optimize both correctness and computational resources. Experimental results demonstrate that the FoT framework, combined with these strategies, significantly enhances the reasoning capabilities of LLMs, enabling them to solve complex tasks with greater precision and efficiency. Code will be available at https://github.com/iamhankai/Forest-of-Thought.

翻译:大语言模型(LLMs)在各种语言任务中展现出卓越能力,但解决复杂推理问题仍是重大挑战。现有方法如思维链(CoT)和思维树(ToT)通过分解问题或结构化提示来增强推理,但通常仅执行单次推理且难以修正错误路径,影响准确性。为突破此局限,我们提出名为思维森林(FoT)的新型推理框架,该框架整合多棵推理树以利用集体决策解决复杂逻辑问题。FoT采用稀疏激活策略选择最相关推理路径,提升效率与准确性。此外,我们引入动态自校正策略实现实时纠错,并结合共识引导决策策略以优化正确性与计算资源。实验结果表明,FoT框架结合这些策略能显著增强LLMs的推理能力,使其以更高精度和效率解决复杂任务。代码发布于 https://github.com/iamhankai/Forest-of-Thought。