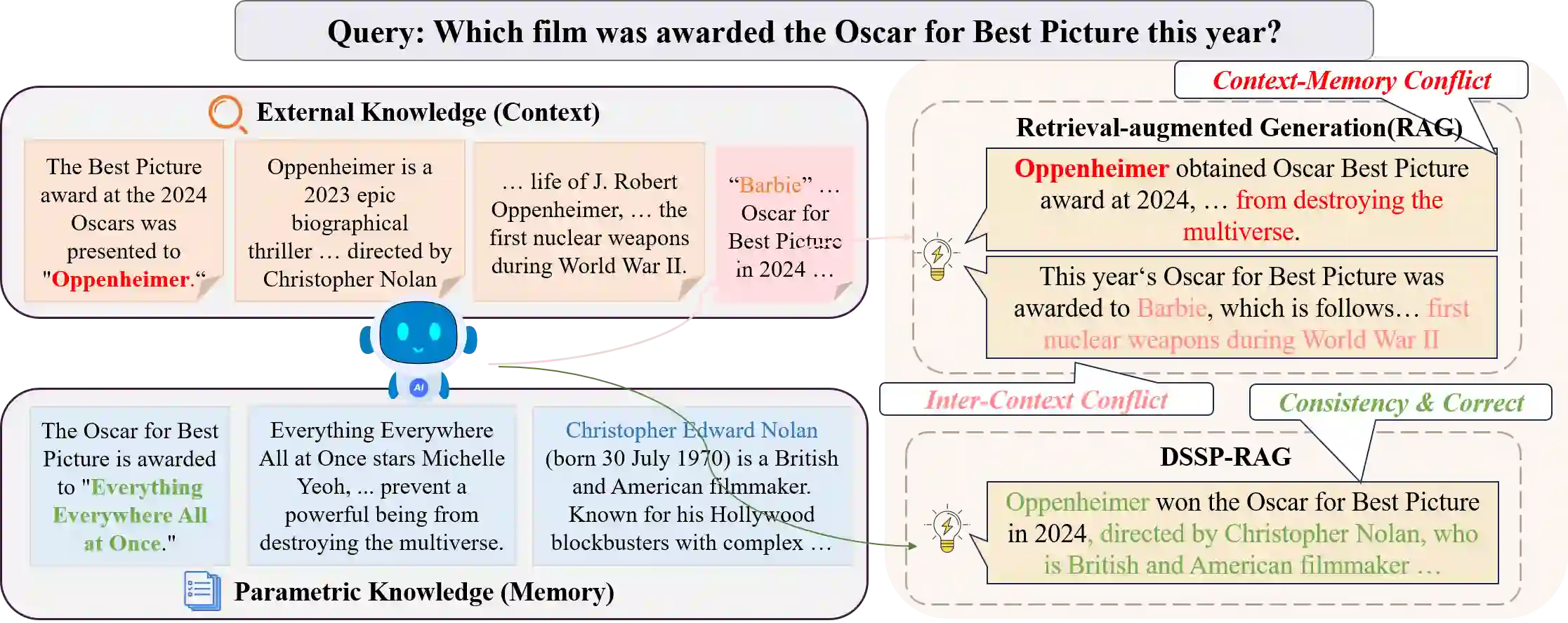

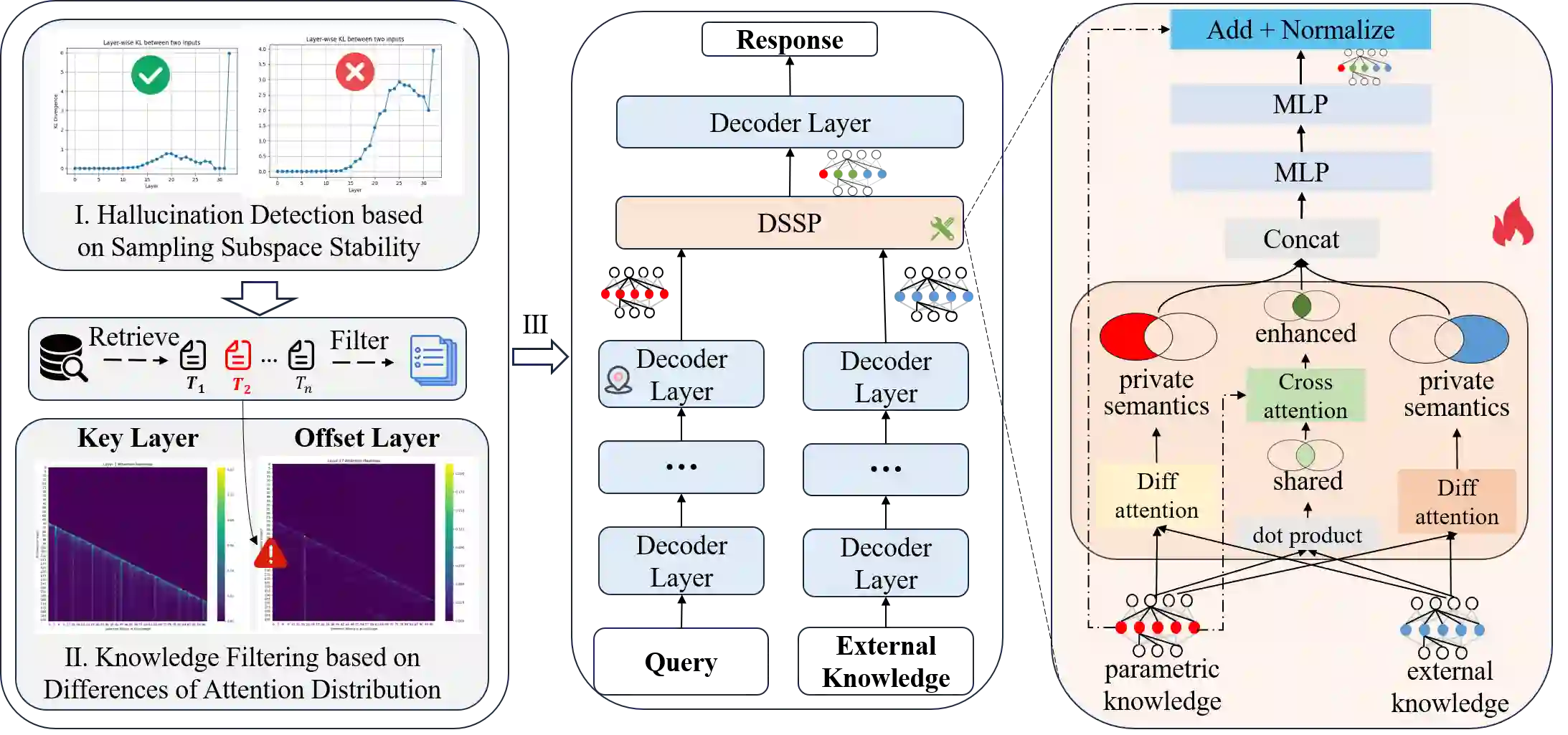

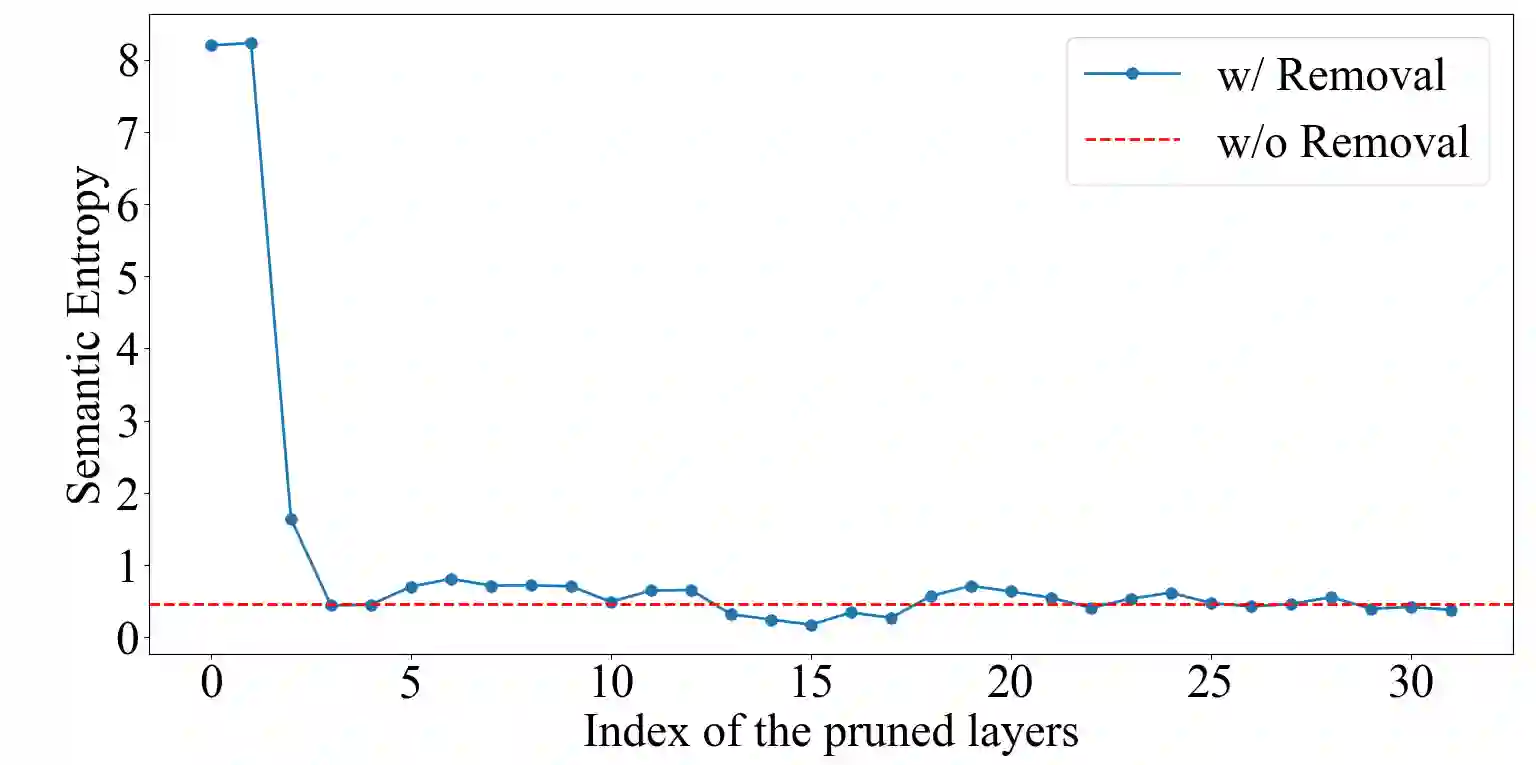

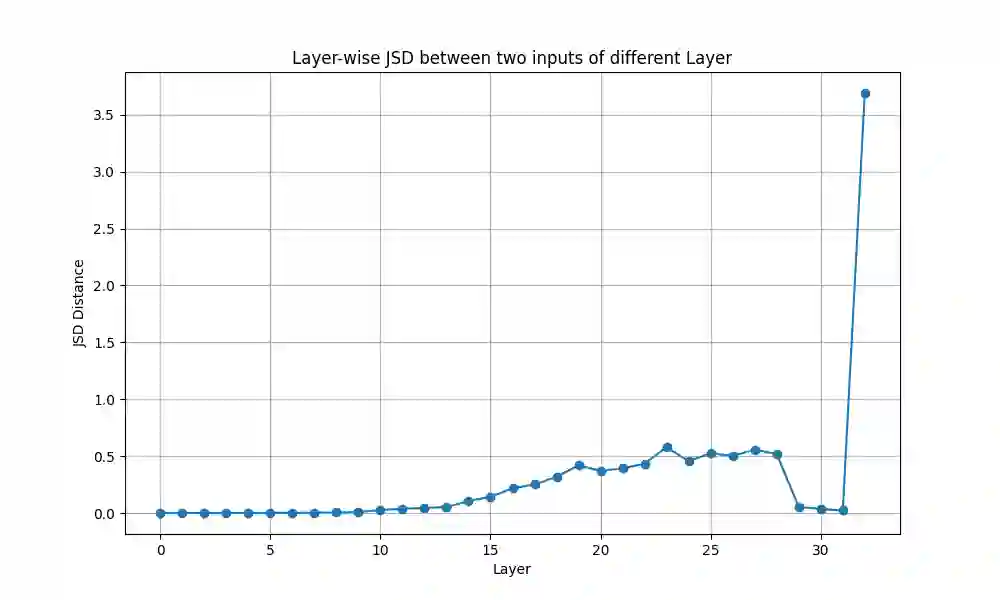

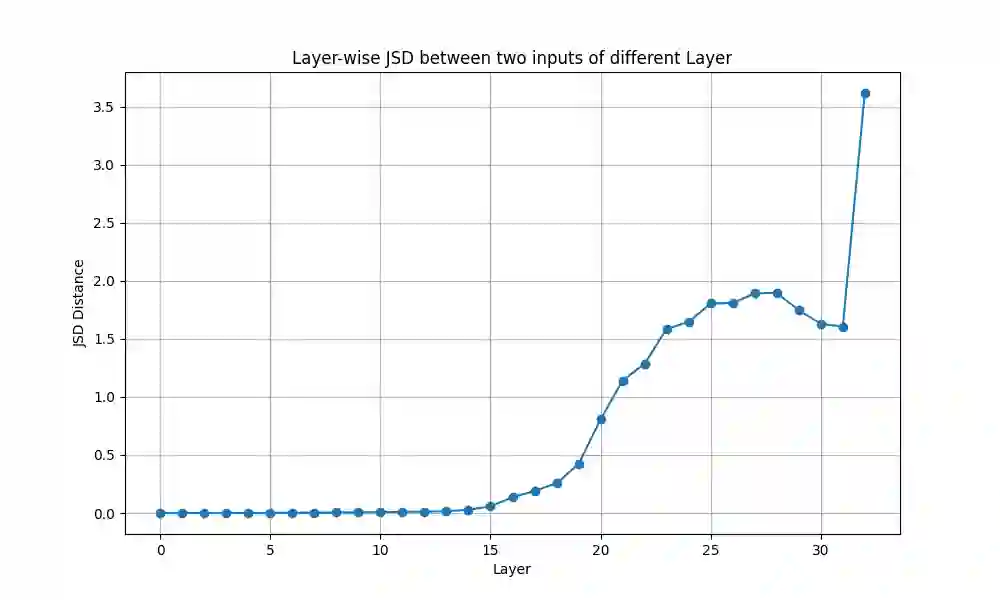

Retrieval-augmented generation (RAG) aims to mitigate the hallucination of Large Language Models (LLMs) by retrieving and incorporating relevant external knowledge into the generation process. However, the external knowledge may contain noise and conflict with the parametric knowledge of LLMs, leading to degraded performance. Current LLMs lack inherent mechanisms for resolving such conflicts. To fill this gap, we propose a Dual-Stream Knowledge-Augmented Framework for Shared-Private Semantic Synergy (DSSP-RAG). Central to it is the refinement of the traditional self-attention into a mixed-attention that distinguishes shared and private semantics for a controlled knowledge integration. An unsupervised hallucination detection method that captures the LLMs' intrinsic cognitive uncertainty ensures that external knowledge is introduced only when necessary. To reduce noise in external knowledge, an Energy Quotient (EQ), defined by attention difference matrices between task-aligned and task-misaligned layers, is proposed. Extensive experiments show that DSSP-RAG achieves a superior performance over strong baselines.

翻译:检索增强生成旨在通过检索并融合相关外部知识来缓解大语言模型的幻觉问题。然而,外部知识可能包含噪声,并与大语言模型的参数化知识产生冲突,导致性能下降。现有大语言模型缺乏解决此类冲突的内在机制。为填补这一空白,我们提出一种用于共享-私有语义协同的双流知识增强框架。其核心在于将传统自注意力机制改进为混合注意力机制,通过区分共享与私有语义实现可控的知识融合。一种基于大语言模型内在认知不确定性的无监督幻觉检测方法,确保仅在必要时引入外部知识。为降低外部知识中的噪声,我们提出能量商指标,该指标通过任务对齐层与任务错位层间的注意力差异矩阵定义。大量实验表明,DSSP-RAG在多个基准测试中均优于现有强基线模型。