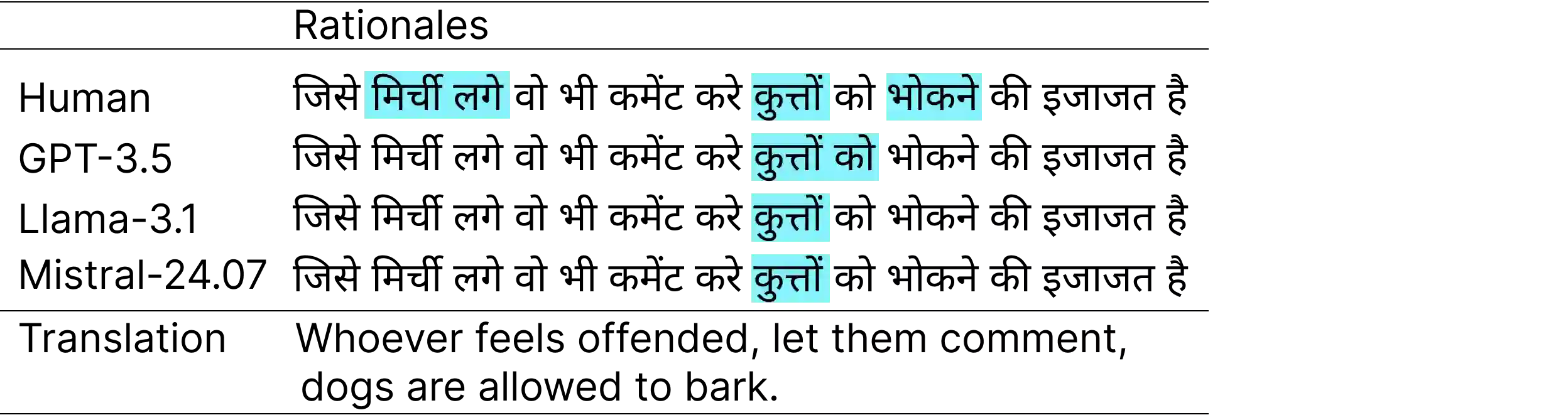

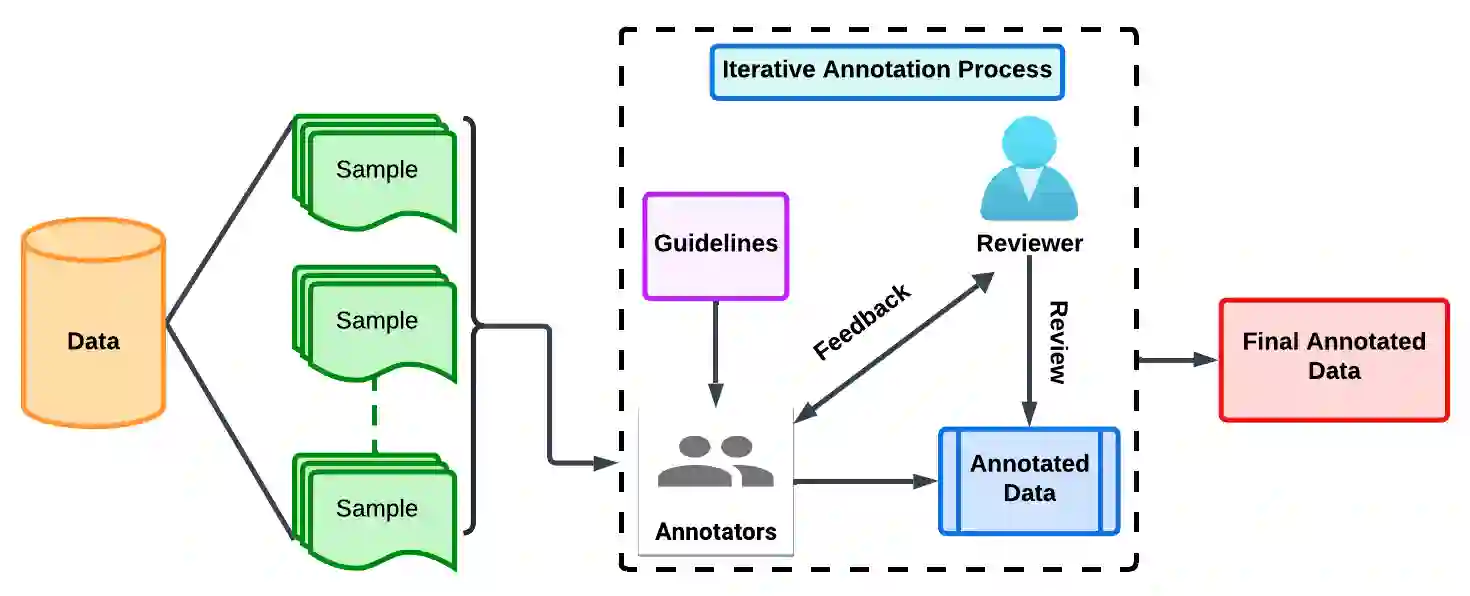

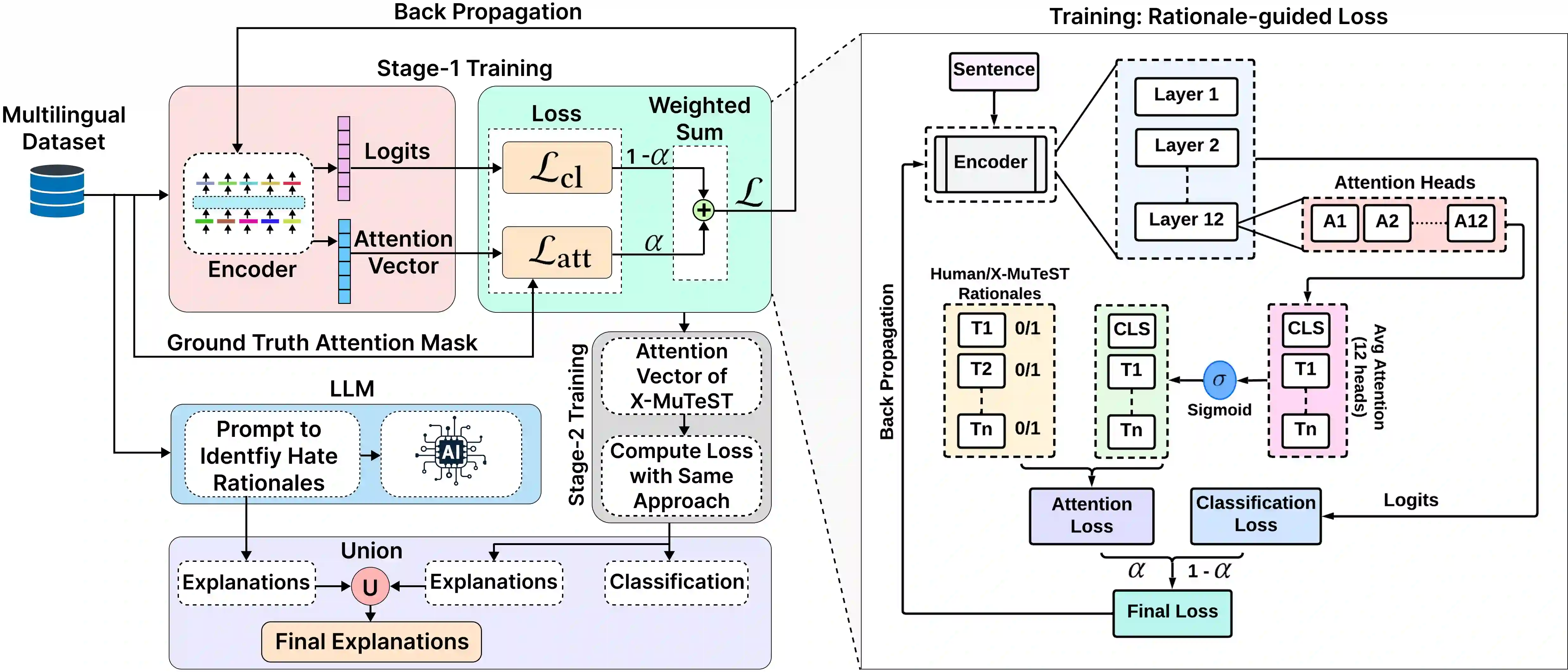

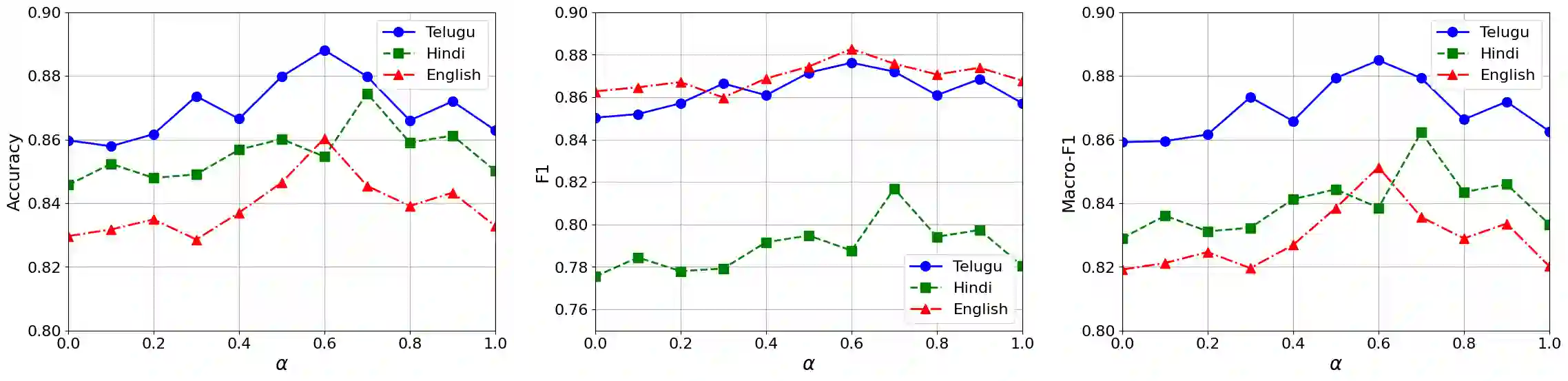

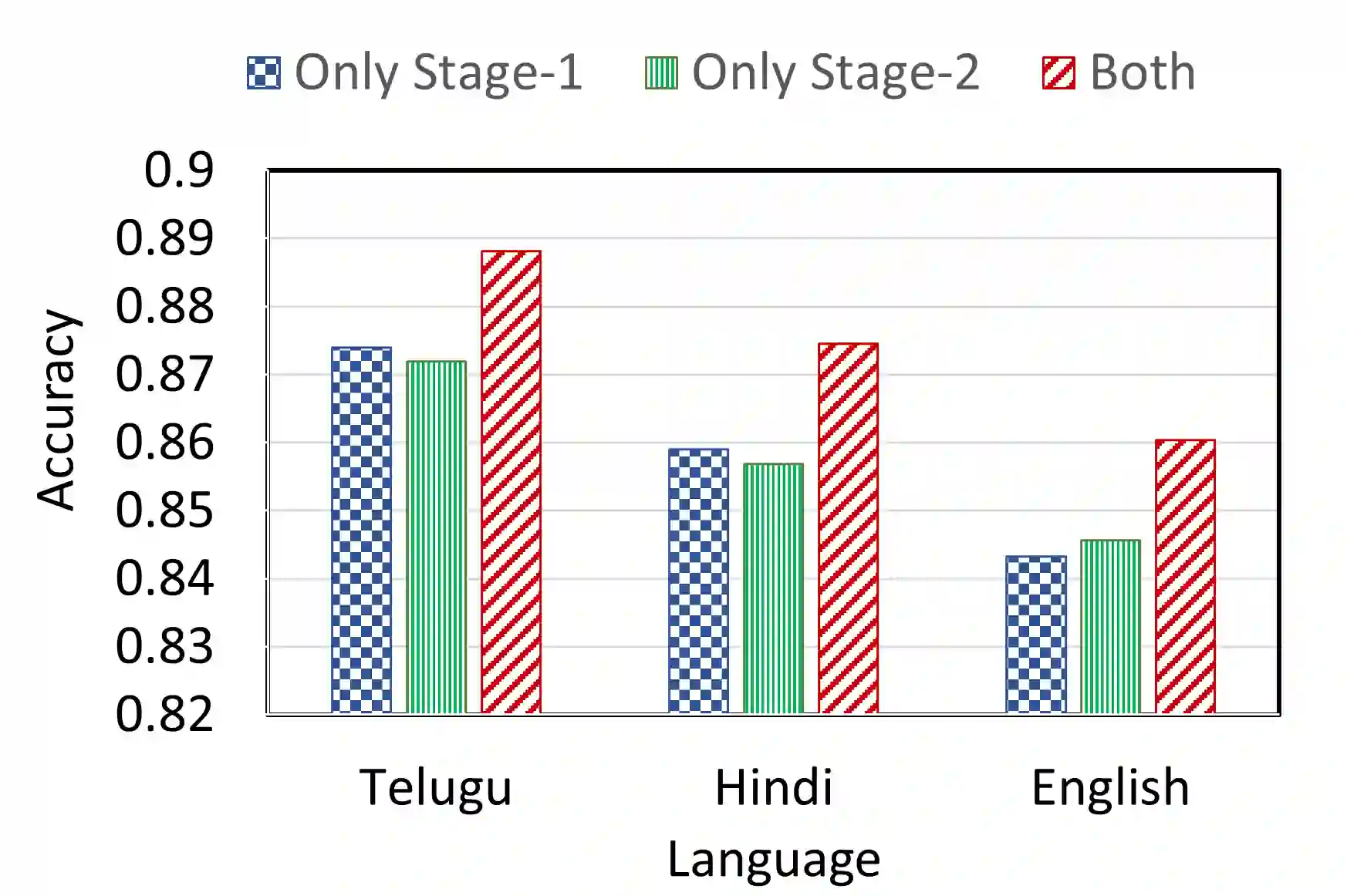

Hate speech detection on social media faces challenges in both accuracy and explainability, especially for underexplored Indic languages. We propose a novel explainability-guided training framework, X-MuTeST (eXplainable Multilingual haTe Speech deTection), for hate speech detection that combines high-level semantic reasoning from large language models (LLMs) with traditional attention-enhancing techniques. We extend this research to Hindi and Telugu alongside English by providing benchmark human-annotated rationales for each word to justify the assigned class label. The X-MuTeST explainability method computes the difference between the prediction probabilities of the original text and those of unigrams, bigrams, and trigrams. Final explanations are computed as the union between LLM explanations and X-MuTeST explanations. We show that leveraging human rationales during training enhances both classification performance and explainability. Moreover, combining human rationales with our explainability method to refine the model attention yields further improvements. We evaluate explainability using Plausibility metrics such as Token-F1 and IOU-F1 and Faithfulness metrics such as Comprehensiveness and Sufficiency. By focusing on under-resourced languages, our work advances hate speech detection across diverse linguistic contexts. Our dataset includes token-level rationale annotations for 6,004 Hindi, 4,492 Telugu, and 6,334 English samples. Data and code are available on https://github.com/ziarehman30/X-MuTeST

翻译:社交媒体上的仇恨言论检测在准确性和可解释性方面均面临挑战,尤其是在尚未充分探索的印度语言中。我们提出了一种新颖的可解释性引导训练框架——X-MuTeST(可解释多语言仇恨言论检测),该框架将大型语言模型(LLMs)的高层语义推理与传统注意力增强技术相结合,用于仇恨言论检测。我们将此项研究扩展到印地语和泰卢固语以及英语,为每个单词提供基准的人工标注依据,以证明所分配类别标签的合理性。X-MuTeST可解释性方法计算原始文本的预测概率与单字、双字和三字组的预测概率之间的差异。最终解释通过LLM解释与X-MuTeST解释的并集计算得出。我们证明,在训练过程中利用人工依据可以同时提升分类性能和可解释性。此外,将人工依据与我们的可解释性方法相结合以优化模型注意力,能带来进一步的改进。我们使用如Token-F1和IOU-F1等合理性指标,以及如全面性和充分性等忠实性指标来评估可解释性。通过关注资源匮乏的语言,我们的工作推动了跨不同语言环境的仇恨言论检测。我们的数据集包含6,004个印地语样本、4,492个泰卢固语样本和6,334个英语样本的词元级依据标注。数据和代码可在 https://github.com/ziarehman30/X-MuTeST 获取。