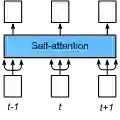

Although recent approaches to face normal estimation have achieved promising results, their effectiveness heavily depends on large-scale paired data for training. This paper concentrates on relieving this requirement via developing a coarse-to-fine normal estimator. Concretely, our method first trains a neat model from a small dataset to produce coarse face normals that perform as guidance (called exemplars) for the following refinement. A self-attention mechanism is employed to capture long-range dependencies, thus remedying severe local artifacts left in estimated coarse facial normals. Then, a refinement network is customized for the sake of mapping input face images together with corresponding exemplars to fine-grained high-quality facial normals. Such a logical function split can significantly cut the requirement of massive paired data and computational resource. Extensive experiments and ablation studies are conducted to demonstrate the efficacy of our design and reveal its superiority over state-of-the-art methods in terms of both training expense as well as estimation quality. Our code and models are open-sourced at: https://github.com/AutoHDR/FNR2R.git.

翻译:尽管当前的人脸法向量估计方法已取得显著成果,但其有效性严重依赖于大规模配对数据进行训练。本文致力于通过开发一种由粗到精的法向量估计器来缓解这一需求。具体而言,我们的方法首先利用小规模数据集训练一个简洁模型,以生成粗糙的人脸法向量作为后续细化过程的引导(称为范例)。通过采用自注意力机制捕获长程依赖关系,从而修正估计的粗糙人脸法向量中存在的严重局部伪影。随后,定制一个细化网络,将输入的人脸图像与对应的范例共同映射为精细化的高质量人脸法向量。这种逻辑功能划分能够显著降低对海量配对数据与计算资源的需求。我们进行了大量实验与消融研究,以验证所提设计的有效性,并证明其在训练成本与估计质量方面均优于现有先进方法。我们的代码与模型已开源发布于:https://github.com/AutoHDR/FNR2R.git。