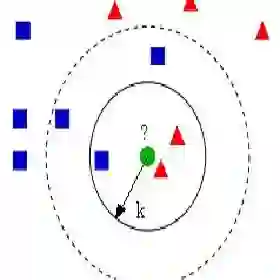

Mixture-of-Experts (MoE) architectures scale large language models efficiently by employing a parametric "router" to dispatch tokens to a sparse subset of experts. Typically, this router is trained once and then frozen, rendering routing decisions brittle under distribution shifts. We address this limitation by introducing kNN-MoE, a retrieval-augmented routing framework that reuses optimal expert assignments from a memory of similar past cases. This memory is constructed offline by directly optimizing token-wise routing logits to maximize the likelihood on a reference set. Crucially, we use the aggregate similarity of retrieved neighbors as a confidence-driven mixing coefficient, thus allowing the method to fall back to the frozen router when no relevant cases are found. Experiments show kNN-MoE outperforms zero-shot baselines and rivals computationally expensive supervised fine-tuning.

翻译:混合专家(MoE)架构通过采用参数化“路由器”将令牌分发至稀疏的专家子集,从而高效扩展大语言模型。通常,该路由器经过一次训练后即被冻结,导致在分布偏移下路由决策变得脆弱。为克服此限制,我们提出kNN-MoE——一种检索增强的路由框架,该框架通过复用历史相似案例记忆中的最优专家分配来实现路由。该记忆通过直接优化令牌级路由逻辑值以最大化参考集上的似然度,在离线状态下构建。关键的是,我们利用检索到的近邻聚合相似度作为置信度驱动的混合系数,使得该方法在未找到相关案例时可回退至冻结路由器。实验表明,kNN-MoE在零样本基准上表现优异,且可与计算成本高昂的监督微调方法相媲美。