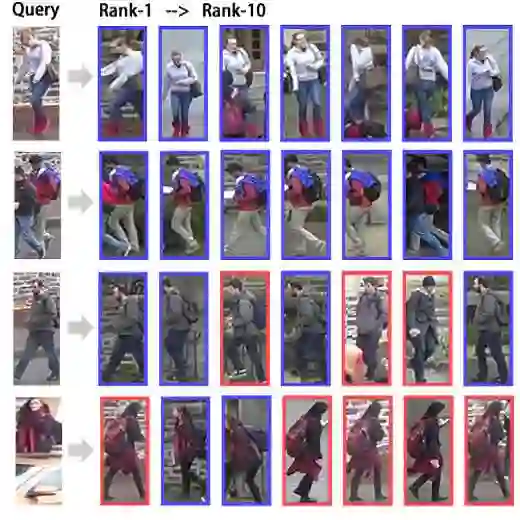

Person re-identification (ReID) is an extremely important area in both surveillance and mobile applications, requiring strong accuracy with minimal computational cost. State-of-the-art methods give good accuracy but with high computational budgets. To remedy this, this paper proposes VisNet, a computationally efficient and effective re-identification model suitable for real-world scenarios. It is the culmination of conceptual contributions, including feature fusion at multiple scales with automatic attention on each, semantic clustering with anatomical body partitioning, a dynamic weight averaging technique to balance classification semantic regularization, and the use of loss function FIDI for improved metric learning tasks. The multiple scales fuse ResNet50's stages 1 through 4 without the use of parallel paths, with semantic clustering introducing spatial constraints through the use of rule-based pseudo-labeling. VisNet achieves 87.05% Rank-1 and 77.65% mAP on the Market-1501 dataset, having 32.41M parameters and 4.601 GFLOPs, hence, proposing a practical approach for real-time deployment in surveillance and mobile applications where computational resources are limited.

翻译:行人重识别(ReID)在监控与移动应用领域具有极其重要的地位,需要在最小计算成本下实现高精度识别。现有先进方法虽能提供良好精度,但通常伴随高昂计算开销。为解决此问题,本文提出VisNet——一种适用于实际场景的高计算效率且性能优异的行人重识别模型。该模型融合了多项创新设计:包括具有自动注意力机制的多尺度特征融合、基于解剖学身体分区的语义聚类、用于平衡分类语义正则化的动态权重平均技术,以及采用FIDI损失函数以提升度量学习性能。多尺度特征融合直接整合ResNet50第1至4阶段特征而无需并行路径,语义聚类则通过基于规则的伪标注引入空间约束。VisNet在Market-1501数据集上取得87.05% Rank-1准确率与77.65% mAP,仅需32.41M参数量与4.601 GFLOPs计算量,为计算资源受限的实时监控与移动应用场景提供了实用解决方案。